Kubernetes 是 Google 基于 Borg 开源的容器编排调度引擎。作为 CNCF 最重要的项目,Kubernetes 的目标不止是成为一个编排系统,更是提供一种规范,该规范可以让我们描述集群的架构并定义服务的最终状态,让系统自动达到和维持这种状态。

对于 Kubernetes 这样庞大的系统来说,监控告警自然是不可或缺的。对于 Kubernetes 集群,我们一般需要考虑监控以下几个方面:

- kubernetes 节点,比如节点的 CPU,Load,Disk,Memory 等指标;

- 内部组件的状态,比如 kube-scheduler,kube-controller-manager,kube-DNS/CoreDNS 等组件的详细运行状态;

- 编排级的 metrics,比如 Deployment,Pod,Daemonset,StatefulSet 等资源的状态,资源请求,调度和 API 延迟等数据指标。

Kubernetes 监控方案

Kubernetes 监控方法有以下集中:

Heapster: 早期的版本(kubernetes 1.10.x 之前),Heapster 用于对集群进行监控和数据聚合,以 Pod 的形式运行在集群中,在正确部署后可以在 Kubernetes 提供的 Dashboard 插件页面查看 Heapster 采集到的资源信息;(该方法在新版本已弃用)

kube-state-metrics: kube-state-metrics 通过监听 API Server 生成有关资源对象的状态指标,需要注意的是 kube-state-metrics 只是提供了一个 metrics 指标数据,并不会存储这些数据,需要后端数据库来存储这些数据。此外,kube-state-metrics 采集的 metrics 数据的名称和标签是不稳定的,可能会改变,需要根据实际环境灵活配置。

metrics-server: metrics-server 也是一个针对 Kubernetes 监控的数据聚合工具,是 Heapster 的替代品。在 Kubernetes 1.11 版本发布之后,Heapster 已经被弃用。

metrics-server 和 kube-state-metrics 有很大的不同,主要体现在二者关注的指标数据上。

- kube-state-metrics 主要关注集群资源相关的一些元数据,比如 Deployment,Pod,副本状态和资源限额等静态指标;

- metrics-server 主要关注的是资源度量 API 的实现,比如 CPU,文件描述符,内存,请求延时等实时指标。

Prometheus: 上面的监控方案都需要依赖额外的数据库,而 Prometheus 作为优秀的监控工具,自身就内置了一套存储方案。Prometheus 对 Kubernetes 有很好从支持,这种支持不仅仅是对 Prometheus 默认的 Pull 策略的支持,而且是在代码层面的原生支持。可以通过 Prometheus 提供的 Kubernetes 服务发现机制来实现集群的自动化监控。

Kubernetes 集群中的 HPA 水平自动扩缩容和 kubectl top 命令 都依赖 Heapster/metrics-server,要使用这两个功能,必须保证已经安装 Heapster 或者 metrics-server 组件,推荐使用 metrics-server。

安装 Prometheus

Prometheus 有多重安装方式,包括 二进制安装,容器安装,Helm 方式安装,Operator 方式安装,kube-prometheus Stack 方式安装。这里采用的是 kube-prometheus Stack 方式进行安装。

Prometheus Stack 项目仓库地址: kube-prometheus

此 Stack 中包含的组件:

- Prometheus Operator

- 高可用 Prometheus

- 高可用 Alertmanager

- Prometheus node_exporter

- Prometheus Adapter for Kubernetes Metrics APIs

- kube-stats-metrics

- grafana

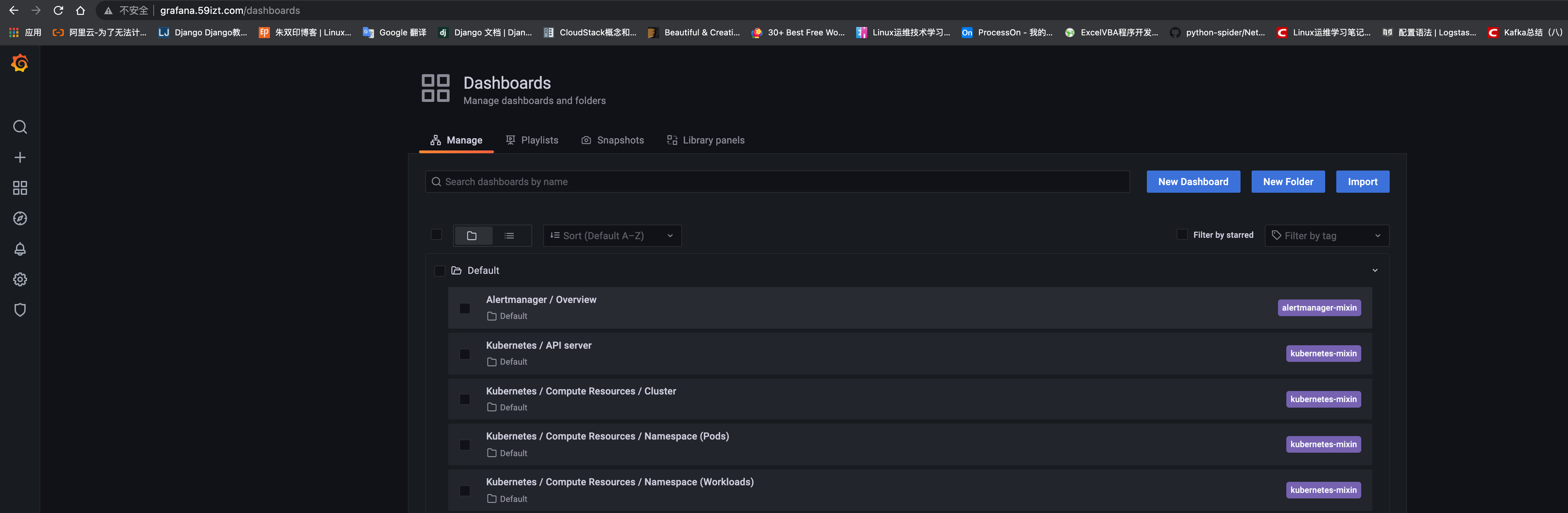

此 Stack 用于集群监控,因此它预先配置为从所有 Kubernetes 组件收集指标。除此之外,它还提供了一组默认的仪表板和警报规则。许多有用的仪表板和警报来自 kubernetes-mixin 项目,与该项目类似,它提供可组合的 jsonnet 作为库,供用户根据自己的需求进行定制。

与 Kubernetes 版本兼容性请查看: Kubernetes compatibility matrix

下面开始部署 Prometheus Stack 到 k8s 集群:

下载指定版本的仓库到本地

1

git clone -b release-0.9 https://github.com/prometheus-operator/kube-prometheus.git

安装 Prometheus CRD

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21# cd kube-prometheus/manifests

# kubectl create -f setup/

namespace/monitoring created

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

service/prometheus-operator created

serviceaccount/prometheus-operator created

# 查看 Operator

# kubectl get pods -n monitoring

NAME READY STATUS RESTARTS AGE

prometheus-operator-75d9b475d9-xzz68 0/2 ContainerCreating 0 78s修改镜像地址,使用以下命令检查是否存在使用

k8s.gcr.io地址的镜像1

grep -R 'k8s.gcr.io' ./

- 修改

kube-state-metrics-deployment.yaml文件中kube-state-metrics的镜像地址为bitnami/kube-state-metrics:2.1.1 - 修改

prometheus-adapter-deployment.yaml文件中prometheus-adapter的镜像地址为willdockerhub/prometheus-adapter:v0.9.0

- 修改

安装 Prometheus Stack

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21[root@k8s-master-01 manifests]# kubectl create -f .

# 查看 Pod 运行状态

# kubectl get pods -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 4m24s

alertmanager-main-1 2/2 Running 0 4m24s

alertmanager-main-2 2/2 Running 0 4m24s

blackbox-exporter-6798fb5bb4-8ltmf 3/3 Running 0 4m24s

grafana-7476b4c65b-2mrcs 1/1 Running 0 4m22s

kube-state-metrics-7fcc9c66b-6542d 3/3 Running 0 4m21s

node-exporter-bzw4h 2/2 Running 0 4m20s

node-exporter-mmlzm 2/2 Running 0 4m20s

node-exporter-pcfk5 2/2 Running 0 4m21s

node-exporter-tvk4l 2/2 Running 0 4m20s

node-exporter-v6jbm 2/2 Running 0 4m20s

prometheus-adapter-cd877bbf9-4llfq 1/1 Running 0 4m19s

prometheus-adapter-cd877bbf9-5rms5 1/1 Running 0 4m19s

prometheus-k8s-0 2/2 Running 0 4m17s

prometheus-k8s-1 2/2 Running 0 4m17s

prometheus-operator-75d9b475d9-p2wsg 2/2 Running 0 11m查看创建的 svc,默认创建的 svc 都是 ClusterIP,需要修改为 NodePort 类型, 或者使用 ingress-nginx 代理服务,这里先使用修改服务类型为 NodePort 方式

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22# 修改 grafana 的 svc

kubectl edit svc -n monitoring grafana

# 修改 Prometheus 的 svc

kubectl edit svc -n monitoring prometheus-k8s

# 修改 Alertmanager 的 svc

kubectl edit svc -n monitoring alertmanager-main

# 查看修改后的 NodePort

# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main NodePort 10.108.174.128 <none> 9093:32512/TCP 11m

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 11m

blackbox-exporter ClusterIP 10.100.164.90 <none> 9115/TCP,19115/TCP 11m

grafana NodePort 10.96.133.97 <none> 3000:31613/TCP 11m

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 11m

node-exporter ClusterIP None <none> 9100/TCP 11m

prometheus-adapter ClusterIP 10.104.101.25 <none> 443/TCP 11m

prometheus-k8s NodePort 10.110.158.21 <none> 9090:31049/TCP 11m

prometheus-operated ClusterIP None <none> 9090/TCP 11m

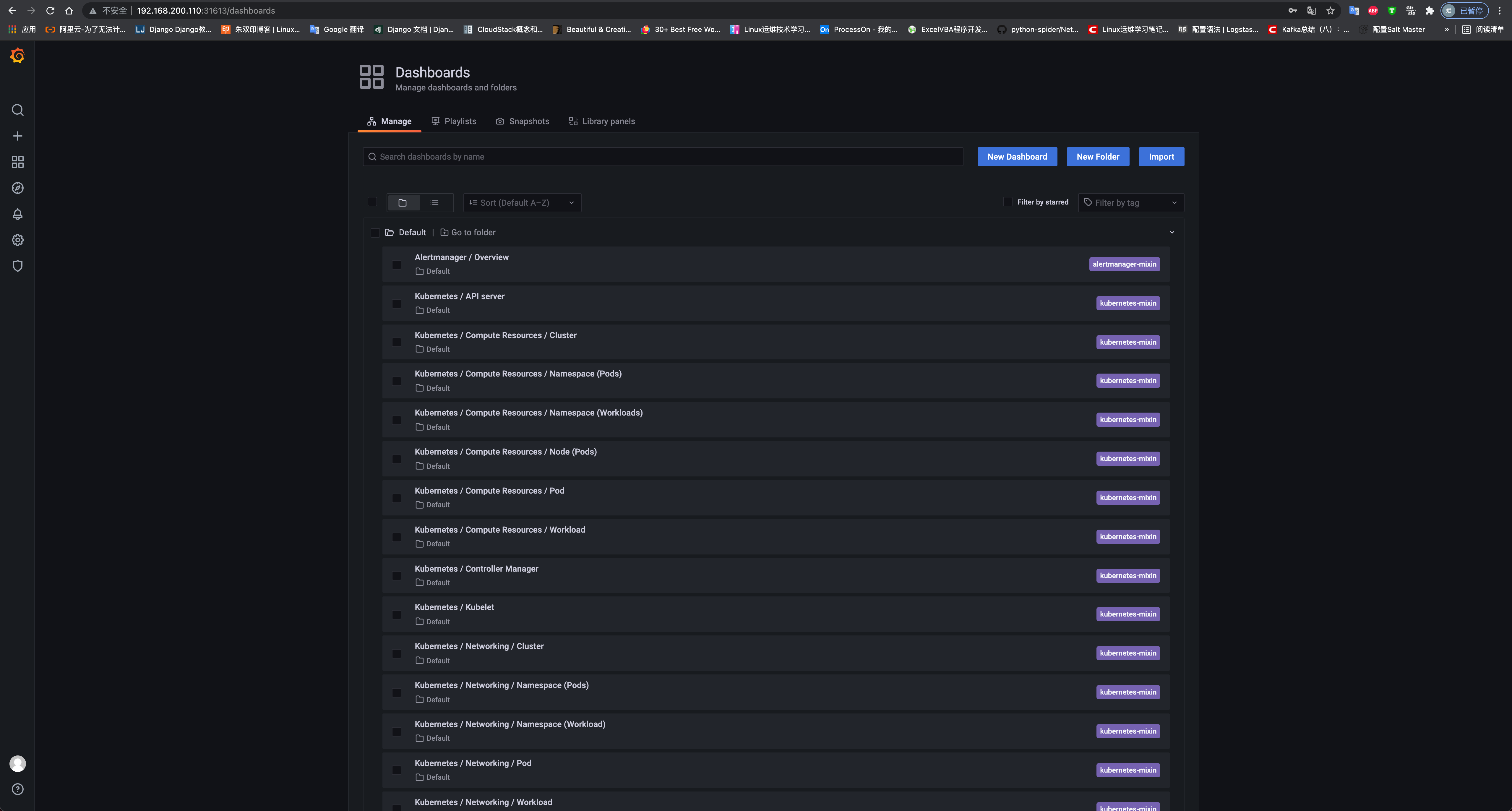

prometheus-operator ClusterIP None <none> 8443/TCP 19m打开浏览器,访问 grafana 服务

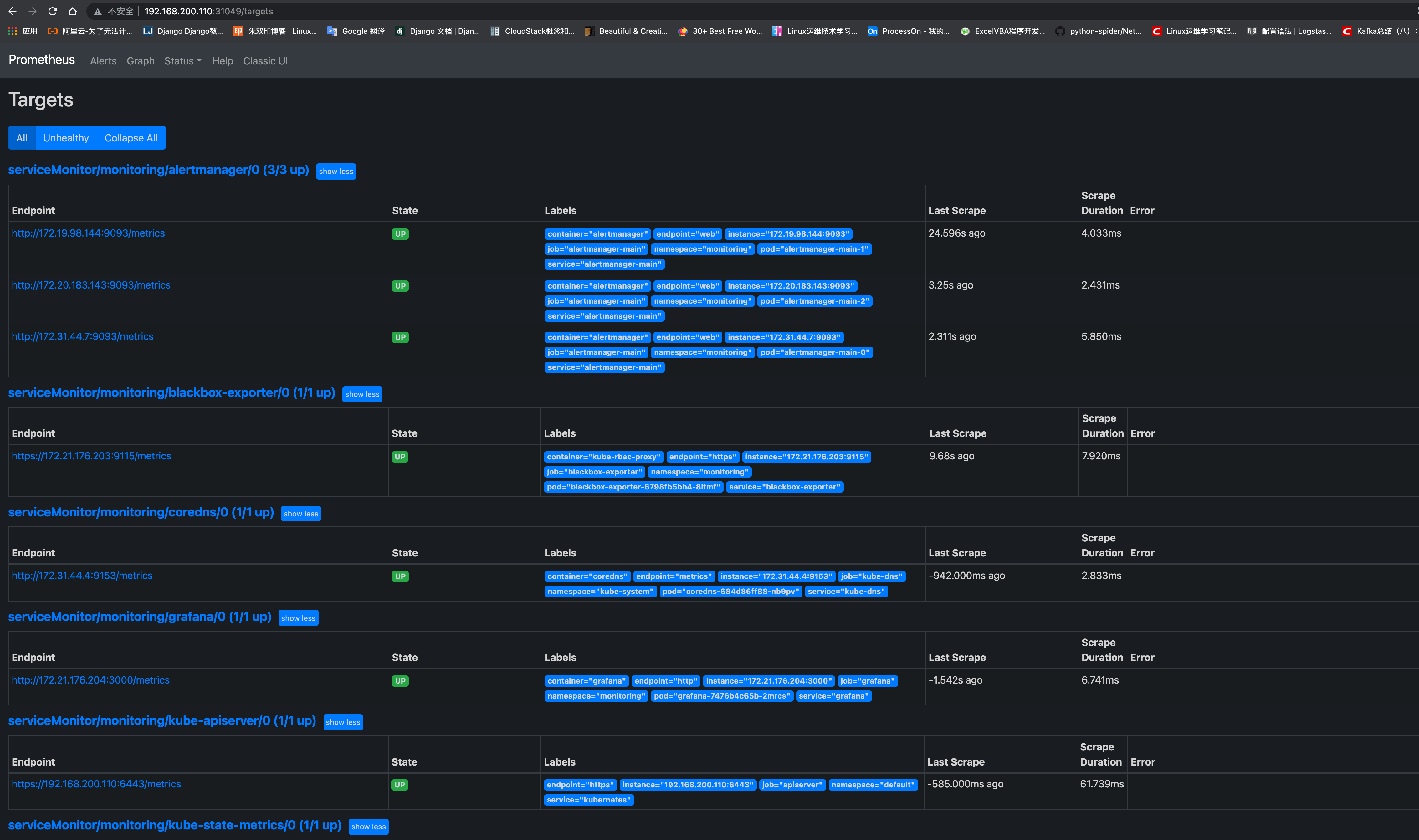

访问 Prometheus 页面

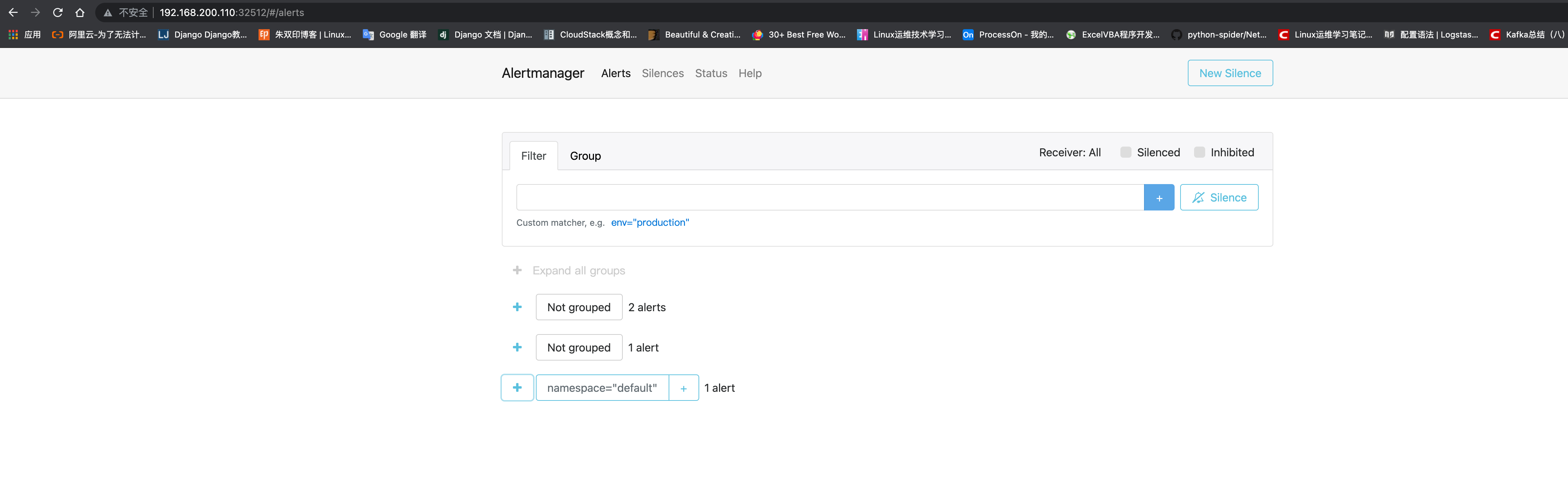

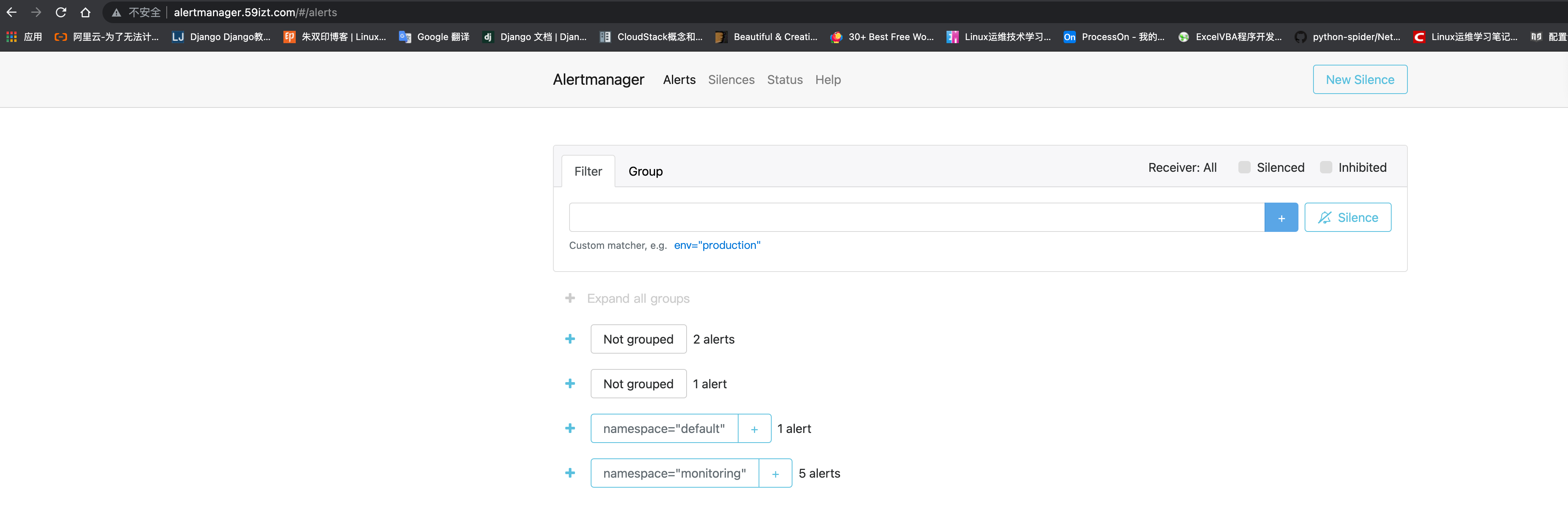

访问 Alertmanager 页面

配置 Ingress 代理 Prometheus 相关组件的服务

创建 ingress-nginx 配置文件,内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: prometheus-grafana-alertmanager

namespace: monitoring

spec:

ingressClassName: nginx

rules:

- host: grafana.59izt.com

http:

paths:

- backend:

service:

name: grafana

port:

number: 3000

path: /

pathType: ImplementationSpecific

- host: prometheus.59izt.com

http:

paths:

- backend:

service:

name: prometheus-k8s

port:

number: 9090

path: /

pathType: ImplementationSpecific

- host: alertmanager.59izt.com

http:

paths:

- backend:

service:

name: alertmanager-main

port:

number: 9093

path: /

pathType: ImplementationSpecific创建 ingress 资源

1

2

3# kubectl create -f prometheus-ingress.yaml

Warning: networking.k8s.io/v1beta1 Ingress is deprecated in v1.19+, unavailable in v1.22+; use networking.k8s.io/v1 Ingress

ingress.networking.k8s.io/prometheus-grafana-alertmanager created配置域名解析或者 hosts 映射,然后浏览器访问域名测试

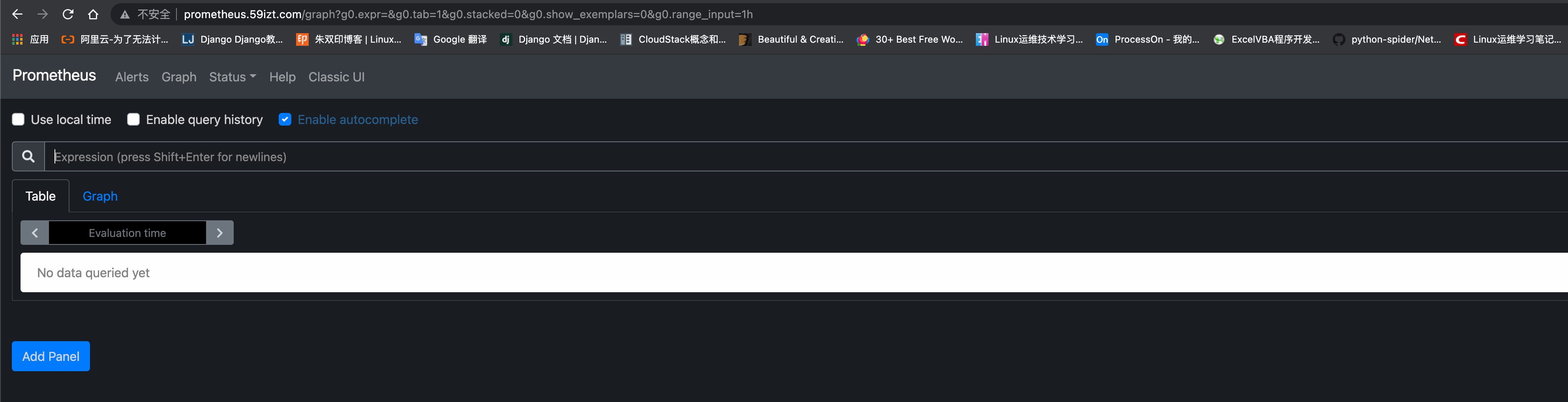

prometheus 域名访问

grafana 域名访问

alertmanager 域名访问

监控 k8s-master 组件

配置监控 kube-controller-manager

查看 kube-controller-manager 服务的 serviceMonitor 配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29# kubectl get servicemonitor -n monitoring kube-controller-manager -oyaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

creationTimestamp: "2022-07-14T04:14:14Z"

generation: 1

labels:

k8s-app: kube-controller-manager

name: kube-controller-manager

namespace: monitoring

resourceVersion: "2539376"

selfLink: /apis/monitoring.coreos.com/v1/namespaces/monitoring/servicemonitors/kube-controller-manager

uid: 8fcef0ea-6d32-462e-8fd9-d28f734f6f15

spec:

endpoints:

- interval: 30s

metricRelabelings:

- action: drop

regex: etcd_(debugging|disk|request|server).*

sourceLabels:

- __name__

port: http-metrics

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

k8s-app: kube-controller-manager该 ServiceMonitor 匹配的是 kube-system 命名空间下,具有 k8s-app=kube-controller-manager 标签的 SVC,接下来通过该标签查看是否有该 Service:

手动创建 kube-controller-manager 的 Service 以及 Endpoint

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33cat > kube-controller-manager.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: kube-controller-manager

name: kube-controller-manager

namespace: kube-system

spec:

type: ClusterIP

ports:

- name: http-metrics

port: 10252

protocol: TCP

targetPort: 10252

---

apiVersion: v1

kind: Endpoints

metadata:

labels:

k8s-app: kube-controller-manager

name: kube-controller-manager

namespace: kube-system

subsets:

- addresses:

- ip: 192.168.3.13

ports:

- name: http-metrics

port: 10252

protocol: TCP

EOF

kubectl apply -f kube-controller-manager.yaml

配置监控 kube-scheduler

查看 kube-scheduler 服务的 serviceMonitor 配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24# kubectl get servicemonitor -n monitoring kube-scheduler -oyaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

creationTimestamp: "2022-07-14T04:14:14Z"

generation: 1

labels:

k8s-app: kube-scheduler

name: kube-scheduler

namespace: monitoring

resourceVersion: "2539381"

selfLink: /apis/monitoring.coreos.com/v1/namespaces/monitoring/servicemonitors/kube-scheduler

uid: e7f93183-4cae-431a-99d2-07e3a41dd834

spec:

endpoints:

- interval: 30s

port: http-metrics

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

k8s-app: kube-scheduler该 ServiceMonitor 匹配的是 kube-system 命名空间下,具有 k8s-app=kube-scheduler 标签的 SVC,接下来通过该标签查看是否有该 Service:

手动创建 kube-scheduler 的 Service 以及 Endpoint

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33cat > kube-scheduler.yaml <<EOF

apiVersion: v1

kind: Service

metadata:

labels:

k8s-app: kube-scheduler

name: kube-scheduler

namespace: kube-system

spec:

type: ClusterIP

ports:

- name: http-metrics

port: 10251

protocol: TCP

targetPort: 10251

---

apiVersion: v1

kind: Endpoints

metadata:

labels:

k8s-app: kube-scheduler

name: kube-scheduler

namespace: kube-system

subsets:

- addresses:

- ip: 192.168.3.13

ports:

- name: http-metrics

port: 10251

protocol: TCP

EOF

kubectl apply -f kube-scheduler.yaml

数据持久化

持久化 Prometheus

在 kube-prometheus/manifests/prometheus-prometheus.yaml 中做如下修改,添加数据持久化配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.29.1

prometheus: k8s

name: k8s

namespace: monitoring

spec:

alerting:

alertmanagers:

- apiVersion: v2

name: alertmanager-main

namespace: monitoring

port: web

enableFeatures: []

externalLabels: {}

image: quay.io/prometheus/prometheus:v2.29.1

nodeSelector:

kubernetes.io/os: linux

podMetadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.29.1

podMonitorNamespaceSelector: {}

podMonitorSelector: {}

probeNamespaceSelector: {}

probeSelector: {}

replicas: 2

resources:

requests:

memory: 400Mi

ruleNamespaceSelector: {}

ruleSelector: {}

securityContext:

fsGroup: 2000

runAsNonRoot: true

runAsUser: 1000

serviceAccountName: prometheus-k8s

serviceMonitorNamespaceSelector: {}

serviceMonitorSelector: {}

version: 2.29.1

# 以下是新增的数据持久化相关配置

storage:

volumeClaimTemplate:

spec:

storageClassName: glusterfs

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi替换资源

1

2# kubectl replace -f prometheus-prometheus.yaml

prometheus.monitoring.coreos.com/k8s replaced查看创建的 pv 以及 pvc

1

2

3

4

5

6

7

8# kubectl get pvc -n monitoring

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

prometheus-k8s-db-prometheus-k8s-0 Bound pvc-5a3b08ab-abab-4d56-9ef8-a6b4ef979f07 5Gi RWO glusterfs 3m15s

prometheus-k8s-db-prometheus-k8s-1 Bound pvc-a729aa28-547b-4cdf-888a-4f3527d7870b 5Gi RWO glusterfs 3m15s

# kubectl get pv |grep monitoring

pvc-5a3b08ab-abab-4d56-9ef8-a6b4ef979f07 5Gi RWO Delete Bound monitoring/prometheus-k8s-db-prometheus-k8s-0 glusterfs 4m9s

pvc-a729aa28-547b-4cdf-888a-4f3527d7870b 5Gi RWO Delete Bound monitoring/prometheus-k8s-db-prometheus-k8s-1 glusterfs 4m9s

持久化 Grafana

修改 kube-prometheus/manifests/grafana-deployment.yaml 文件,添加 pvc 配置以及修改挂载选项

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69# 在文件开头添加创建 pvc 的资源清单

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: grafana-pvc

namespace: monitoring

spec:

storageClassName: glusterfs

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 8.1.1

name: grafana

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

template:

metadata:

annotations:

checksum/grafana-datasources: fbf9c3b28f5667257167c2cec0ac311a

labels:

app.kubernetes.io/component: grafana

app.kubernetes.io/name: grafana

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 8.1.1

spec:

containers:

- env: []

image: grafana/grafana:8.1.1

name: grafana

ports:

- containerPort: 3000

name: http

readinessProbe:

httpGet:

path: /api/health

port: http

resources:

limits:

cpu: 200m

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

... 省略 N 行

volumes:

# 注释原有的 volume 配置

#- emptyDir: {}

# name: grafana-storage

# 新增以下 volume 配置

- name: grafana-storage

persistentVolumeClaim:

claimName: grafana-pvc

...... 以下省略 N 行 ......替换资源

1

2

3# kubectl apply -f grafana-deployment.yaml

persistentvolumeclaim/grafana-pvc created

deployment.apps/grafana configured查看 pvc 以及 pv

1

2

3

4

5

6

7

8# kubectl get pvc -n monitoring

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

grafana-pvc Bound pvc-83f9c75d-114d-45db-a467-72585bfbe51e 5Gi RWO glusterfs 66s

prometheus-k8s-db-prometheus-k8s-0 Bound pvc-5a3b08ab-abab-4d56-9ef8-a6b4ef979f07 5Gi RWO glusterfs 21m

prometheus-k8s-db-prometheus-k8s-1 Bound pvc-a729aa28-547b-4cdf-888a-4f3527d7870b 5Gi RWO glusterfs 21m

# kubectl get pv |grep grafana

pvc-83f9c75d-114d-45db-a467-72585bfbe51e 5Gi RWO Delete Bound monitoring/grafana-pvc glusterfs 76s

修改 Prometheus 数据存储时间为 30 天

修改 kube-prometheus/manifests/setup/prometheus-operator-deployment.yaml 文件,增加以下参数

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.49.0

name: prometheus-operator

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/part-of: kube-prometheus

template:

metadata:

annotations:

kubectl.kubernetes.io/default-container: prometheus-operator

labels:

app.kubernetes.io/component: controller

app.kubernetes.io/name: prometheus-operator

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.49.0

spec:

containers:

- args:

- --kubelet-service=kube-system/kubelet

- --prometheus-config-reloader=quay.io/prometheus-operator/prometheus-config-reloader:v0.49.0

# 增加 storage.tsdb.retention.time=30d 参数

- storage.tsdb.retention.time=30d

image: quay.io/prometheus-operator/prometheus-operator:v0.49.0

name: prometheus-operator

ports:

- containerPort: 8080

name: http

resources:

limits:

cpu: 200m

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

securityContext:

allowPrivilegeEscalation: false

- args:

- --logtostderr

- --secure-listen-address=:8443

- --tls-cipher-suites=TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_CHACHA20_POLY1305,TLS_ECDHE_ECDSA_WITH_CHACHA20_POLY1305

- --upstream=http://127.0.0.1:8080/

image: quay.io/brancz/kube-rbac-proxy:v0.11.0

name: kube-rbac-proxy

ports:

- containerPort: 8443

name: https

resources:

limits:

cpu: 20m

memory: 40Mi

requests:

cpu: 10m

memory: 20Mi

securityContext:

runAsGroup: 65532

runAsNonRoot: true

runAsUser: 65532

nodeSelector:

kubernetes.io/os: linux

securityContext:

runAsNonRoot: true

runAsUser: 65534

serviceAccountName: prometheus-operator替换资源

1

2# kubectl replace -f setup/prometheus-operator-deployment.yaml

deployment.apps/prometheus-operator replaced