安装 snapshot 控制器

k8s 1.19 版本以上需要单独安装 snapshot 控制器,才能完成 pvc 的快照功能,所以在此提前安装下,如果是1.19以下版本,不需要单独安装;

项目地址: external-snapshotter

卷快照功能取决于卷快照控制器和卷快照 CRD。卷快照控制器和 CRD 都独立于任何 CSI 驱动程序。无论集群上部署了多少 CSI 驱动程序,每个集群都必须只运行一个卷快照控制器实例和一组卷快照 CRD。

下载 external-snapshotter

1

git clone https://github.com/kubernetes-csi/external-snapshotter.git

安装 CRDs

1

2

3

4

5# cd external-snapshotter/

# kubectl create -f client/config/crd

customresourcedefinition.apiextensions.k8s.io/volumesnapshotclasses.snapshot.storage.k8s.io created

customresourcedefinition.apiextensions.k8s.io/volumesnapshotcontents.snapshot.storage.k8s.io created

customresourcedefinition.apiextensions.k8s.io/volumesnapshots.snapshot.storage.k8s.io created修改 snapshot-controller 镜像地址,安装卷快照控制器组件,(需要事先将 snapshot-controller 的镜像同步到国内)

1

2

3

4

5

6

7# kubectl create -f deploy/kubernetes/snapshot-controller/

serviceaccount/snapshot-controller created

clusterrole.rbac.authorization.k8s.io/snapshot-controller-runner created

clusterrolebinding.rbac.authorization.k8s.io/snapshot-controller-role created

role.rbac.authorization.k8s.io/snapshot-controller-leaderelection created

rolebinding.rbac.authorization.k8s.io/snapshot-controller-leaderelection created

deployment.apps/snapshot-controller created查看快照控制器

1

2

3

4# kubectl get pods -n kube-system -l app=snapshot-controller

NAME READY STATUS RESTARTS AGE

snapshot-controller-7f9db47df5-bq7pk 1/1 Running 1 7m20s

snapshot-controller-7f9db47df5-trw59 1/1 Running 0 7m20s

PVC 快照

注:

- PVC 快照功能需要 k8s 1.17+ 版本

- 需要安装快照控制器和快照 v1/v1beta1 CRD。更多信息可以在这里找到 external-snapshotter。

创建块存储的快照

这里使用块存储来演示 pvc 的快照与还原功能,共享文件类型的快照与还原和块存储类型的方法一致。可以参考官方文档: CephFS Snapshots

创建 snapshotClass

1

2

3# cd rook/cluster/examples/kubernetes/ceph/

# kubectl create -f csi/rbd/snapshotclass.yaml

volumesnapshotclass.snapshot.storage.k8s.io/csi-rbdplugin-snapclass created在之前创建的 MySQL 容器中创建一个文件夹,并创建一个文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18# kubectl exec -ti wordpress-mysql-6965fc8cc8-62tb2 -- bash

root@wordpress-mysql-6965fc8cc8-62tb2:/# ls

bin boot dev docker-entrypoint-initdb.d entrypoint.sh etc home lib lib64 media mnt opt proc root run sbin srv sys tmp usr var

root@wordpress-mysql-6965fc8cc8-62tb2:/# cd /var/lib/mysql

root@wordpress-mysql-6965fc8cc8-62tb2:/var/lib/mysql# ls

auto.cnf ib_logfile0 ib_logfile1 ibdata1 lost+found mysql performance_schema

root@wordpress-mysql-6965fc8cc8-62tb2:/var/lib/mysql# mkdir test_snapshot

root@wordpress-mysql-6965fc8cc8-62tb2:/var/lib/mysql# ls

auto.cnf ib_logfile0 ib_logfile1 ibdata1 lost+found mysql performance_schema test_snapshot

root@wordpress-mysql-6965fc8cc8-62tb2:/var/lib/mysql# echo "test for snapshot" > test_snapshot/test.txt

root@wordpress-mysql-6965fc8cc8-62tb2:/var/lib/mysql# cat test_snapshot/test.txt

test for snapshot修改 csi/rbd/snapshot.yaml 文件中的 source pvc 为 MySQL pod 挂载的 pvc

1

2

3

4

5

6

7

8

9

10

11

12

13# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-b786a9c1-31c3-4344-b9cb-1a820ab9427a 3Gi RWO rook-ceph-block 4h30m

# vim csi/rbd/snapshot.yaml # 修改 persistentVolumeClaimName 的值为如下所示

apiVersion: snapshot.storage.k8s.io/v1

kind: VolumeSnapshot

metadata:

name: rbd-pvc-snapshot

spec:

volumeSnapshotClassName: csi-rbdplugin-snapclass

source:

persistentVolumeClaimName: mysql-pv-claim创建快照

1

2# kubectl create -f csi/rbd/snapshot.yaml

volumesnapshot.snapshot.storage.k8s.io/rbd-pvc-snapshot created查看创建的 volumesnapshotclass 以及 volumesnapshot

1

2

3

4

5

6

7# kubectl get volumesnapshotclasses

NAME DRIVER DELETIONPOLICY AGE

csi-rbdplugin-snapclass rook-ceph.rbd.csi.ceph.com Delete 8m1s

[root@k8s-master-01 ceph]# kubectl get volumesnapshot

NAME READYTOUSE SOURCEPVC SOURCESNAPSHOTCONTENT RESTORESIZE SNAPSHOTCLASS SNAPSHOTCONTENT CREATIONTIME AGE

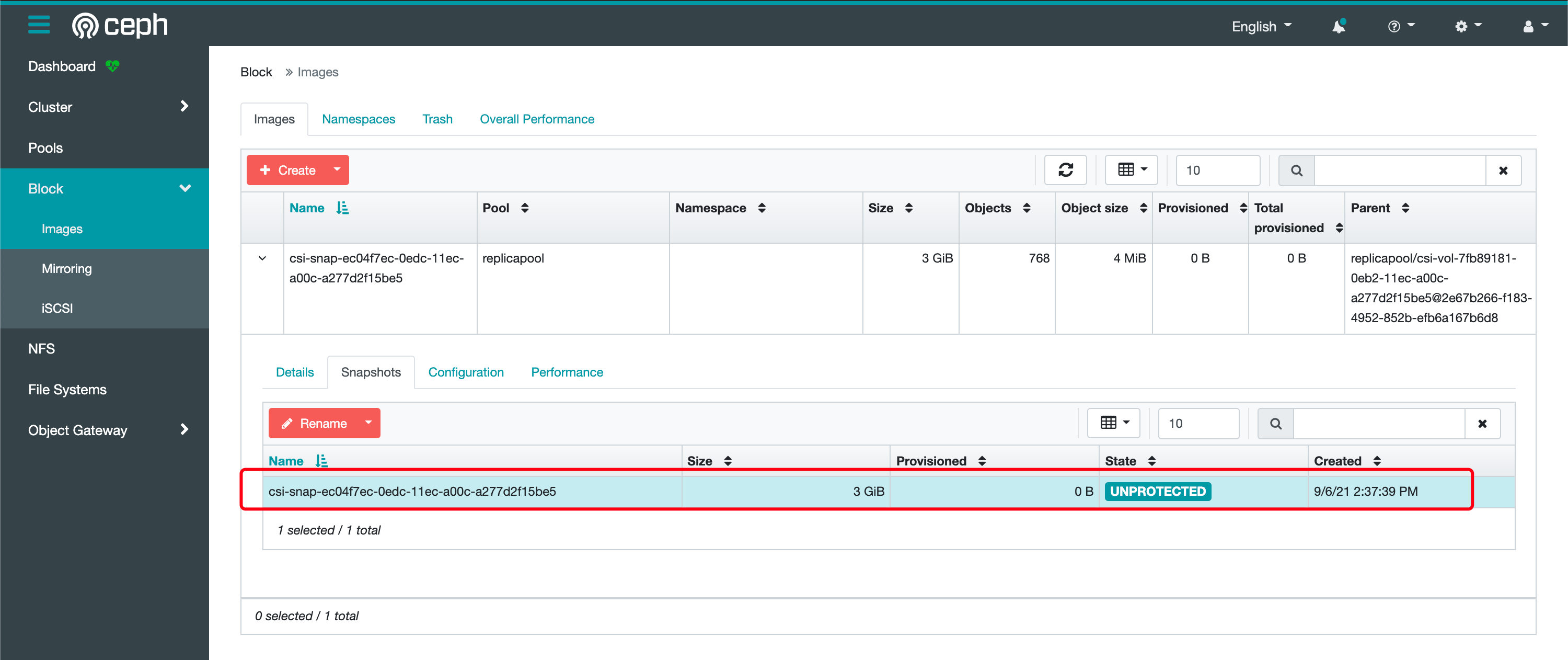

rbd-pvc-snapshot true mysql-pv-claim 3Gi csi-rbdplugin-snapclass snapcontent-a7c439c0-64fe-483c-953e-3fbdcd736d2b 7m47s 7m57s当 volumesnapshot 的 READYTOUSE 字段为 true 时,快照创建完成。此时也可以在 Ceph Dashboard 界面查看到块存储的快照信息

使用指定快照创建 PVC

如果想要创建一个具有某个数据的 PVC,可以从某个快照恢复

编写 pvc-restore.yaml 文件,内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc-restore

spec:

storageClassName: rook-ceph-block # 新建 PVC 的 StorageClass

dataSource:

name: rbd-pvc-snapshot # 快照名称

kind: VolumeSnapshot

apiGroup: snapshot.storage.k8s.io

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi # storage 的大小不能低于原来的 pvc 的大小使用以下命令创建 pvc

1

2# kubectl create -f csi/rbd/pvc-restore.yaml

persistentvolumeclaim/rbd-pvc-restore created查看新建的 pvc

1

2

3

4

5# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-b786a9c1-31c3-4344-b9cb-1a820ab9427a 3Gi RWO rook-ceph-block 5h21m

nginx-share-pvc Bound pvc-684bf6df-87ab-42f9-aac6-8262a8e7cc90 2Gi RWX rook-cephfs 5h53m

rbd-pvc-restore Bound pvc-4a8074ea-4630-4a3b-8184-e3dfd7af5dbd 3Gi RWO rook-ceph-block 6s数据校验,创建一个容器,挂载该 PVC,查看是否含有之前的文件

- 创建 restore-check-snapshot-rbd.yaml 文件,内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29apiVersion: apps/v1

kind: Deployment

metadata:

name: check-snapshot-restore

spec:

selector:

matchLabels:

app: check

strategy:

type: Recreate

template:

metadata:

labels:

app: check

spec:

containers:

- image: alpine:3.8

name: check

command:

- sh

- -c

- sleep 36000

volumeMounts:

- name: check-mysql-persistent-storage

mountPath: /mnt

volumes:

- name: check-mysql-persistent-storage

persistentVolumeClaim:

claimName: rbd-pvc-restore- 创建 deployment

1

kubectl create -f restore-check-snapshot-rbd.yaml

- 查看数据是否存在

1

2

3

4

5# kubectl exec check-snapshot-restore-64b85c5f88-hqgqr -- ls /mnt/test_snapshot

test.txt

# kubectl exec check-snapshot-restore-64b85c5f88-hqgqr -- cat /mnt/test_snapshot/test.txt

test for snapshot测试无误后清理数据(snapshotclass可以不删除,后期创建rbd快照直接用该snapshotclass即可)

1

2

3

4

5

6# kubectl delete -f restore-check-snapshot-rbd.yaml

deployment.apps "check-snapshot-restore" deleted

# kubectl delete -f csi/rbd/pvc-restore.yaml -f csi/rbd/snapshot.yaml

persistentvolumeclaim "rbd-pvc-restore" deleted

volumesnapshot.snapshot.storage.k8s.io "rbd-pvc-snapshot" deleted

PVC 克隆

CSI 卷克隆功能添加了对在 dataSource 字段中指定现有 PVC 以用户想要克隆卷的支持。克隆被定义为现有 Kubernetes 卷的副本,可以像任何标准卷一样使用。唯一的区别是,在配置时,后端设备不是创建“新”空卷,而是创建指定卷的完全副本。

块存储类型的卷克隆

前提条件:

- k8s 版本必须大于等于 1.16+

- ceph 的 csi 驱动必须大于等于 v3.0.0+

创建卷克隆

创建 pvc-clone.yaml 文件,内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: rbd-pvc-clone

spec:

storageClassName: rook-ceph-block # 需要与被 clone 的 pvc 的 StorageClass 相同

dataSource:

name: mysql-pv-claim # 被 clone 的 pvc 名称

kind: PersistentVolumeClaim # 被 clone 卷的类型

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi # 不能比源 pvc 的小克隆 pvc

1

2# kubectl create -f pvc-clone.yaml

persistentvolumeclaim/rbd-pvc-clone created查看新创建的 pvc

1

2

3

4

5# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-b786a9c1-31c3-4344-b9cb-1a820ab9427a 3Gi RWO rook-ceph-block 5h52m

nginx-share-pvc Bound pvc-684bf6df-87ab-42f9-aac6-8262a8e7cc90 2Gi RWX rook-cephfs 6h24m

rbd-pvc-clone Bound pvc-93173263-0328-475a-9c6d-b2981a4fd134 3Gi RWO rook-ceph-block 23s参考 pvc 快照的方式,验证新创建的 pvc

测试无误后清理数据

Ceph 集群清理

官方文档地址: Cleaning up a Cluster

删除所有创建的资源包括 Deployment, StatefulSet, DaemonSet, Pod 等

1

kubectl delete -f mysql.yaml -f wordpress.yaml

手动删除删除还存在的 PVC

1

2kubectl delete -f pvc-clone.yaml

kubectl delete pvc xxx确保所有的 pvc,pv 都删除后,删除 Ceph 的块存储池,共享文件存储

1

2kubectl delete -n rook-ceph cephblockpools replicapool

kubectl delete -n rook-ceph cephfilesystem myfs清理 StorageClass

1

kubectl delete sc rook-ceph-block rook-cephfs

清理 Ceph 集群

1

kubectl -n rook-ceph delete cephcluster rook-ceph

删除 rook 资源

1

kubectl delete -f operator.yaml -f common.yaml -f crds.yaml

如果卡住的话,需要参考 Rook 的 Troubleshooting

清理数据目录和磁盘,参考官方文档 Delete the data on hosts

- 执行以下命令删除主机上的 /var/lib/rook 目录

1

for i in k8s-master-01 k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02;do ssh root@$i "rm -rf /var/lib/rook";done

- 重置数据磁盘,可以通过以下方法将 Rook 用于 osds 的节点上的磁盘重置为可用状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

DISK="/dev/sdb"

# Zap the disk to a fresh, usable state (zap-all is important, b/c MBR has to be clean)

# You will have to run this step for all disks.

sgdisk --zap-all $DISK

# Clean hdds with dd

dd if=/dev/zero of="$DISK" bs=1M count=100 oflag=direct,dsync

# Clean disks such as ssd with blkdiscard instead of dd

blkdiscard $DISK

# These steps only have to be run once on each node

# If rook sets up osds using ceph-volume, teardown leaves some devices mapped that lock the disks.

ls /dev/mapper/ceph-* | xargs -I% -- dmsetup remove %

# ceph-volume setup can leave ceph-<UUID> directories in /dev and /dev/mapper (unnecessary clutter)

rm -rf /dev/ceph-*

rm -rf /dev/mapper/ceph--*

# Inform the OS of partition table changes

partprobe $DISK