架构环境说明

这里演示 CentOS 7 二进制方式安装高可用 K8S 1.21.x,使用 containerd 运行时替换 docker;

本次安装使用 5 台 Linux 服务器,系统版本为 CentOS 7.9,分别为 3台 Master,2台 Node,其中 Node 配置相同。

| 主机 | IP地址 | 系统版本 | 角色 |

|---|---|---|---|

| k8s-master-01 | 192.168.55.111 | CentOS 7.9 | master |

| k8s-master-02 | 192.168.55.112 | CentOS 7.9 | master |

| k8s-master-03 | 192.168.55.113 | CentOS 7.9 | master |

| k8s-node-01 | 192.168.55.114 | CentOS 7.9 | worker |

| k8s-node-01 | 192.168.55.115 | CentOS 7.9 | worker |

| k8s-master-lb | 192.168.55.110 | CentOS 7.9 | LB(VIP) VIP 不占用机器资源,如果不是高可用集群,该 IP为 Master 01 的IP |

注意:VIP(虚拟IP)不要和公司内网IP重复,首先去 ping 一下,不通才可以用。VIP 需要和主机在同一个局域网内!公有云的话,VIP 为公有云的负载均衡的IP,比如阿里云的 SLB 地址,腾讯云的 ELB 地址,注意公有云的负载均衡都是内网的负载均衡。

- k8s 节点宿主机IP网段: 192.168.55.0/24

- k8s Service 网段: 10.96.0.0/12

- k8s Pod 网段: 172.16.0.0/12

注意: 以上三个网段的IP地址不能出现冲突

服务器初始化设置

节点安装 containerd

所有节点配置内核参数以及模块加载

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

# 设置必需的 sysctl 参数,这些参数在重新启动后仍然存在。

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

# 应用 sysctl 参数而无需重新启动

sudo sysctl --system所有节点添加 docker 的 yum 仓库

1

2

3yum install -y yum-utils

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast所有节点安装 containerd

1

yum install -y containerd.io

配置 containerd,创建默认的配置文件

1

2mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml修改 cgroup 驱动程序为 systemd

1

2

3

4[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc]

...

[plugins."io.containerd.grpc.v1.cri".containerd.runtimes.runc.options]

SystemdCgroup = true修改镜像加速

1

2

3

4

5

6

7

8

9[plugins."io.containerd.grpc.v1.cri".registry]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors]

[plugins."io.containerd.grpc.v1.cri".registry.mirrors."docker.io"]

endpoint = ["https://1nj0zren.mirror.aliyuncs.com"]

[plugin."io.containerd.grpc.v1.cri".registry.mirrors."habor.china-snow.net"]

endpoint = ["https://habor.china-snow.net"]

[plugins."io.containerd.grpc.v1.cri".registry.configs]

[plugins."io.containerd.grpc.v1.cri".registry.configs."habor.china-snow.net".tls]

insecure_skip_verify = true修改 sandbox_image 镜像地址为国内阿里云地址

1

2

3

4

5

6

7[plugins]

[plugins."io.containerd.gc.v1.scheduler"]

...

[plugins."io.containerd.grpc.v1.cri"]

disable_tcp_service = true

...

sandbox_image = "registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2"将改好的配置文件复制到其他节点

1

for i in k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02;do scp /etc/containerd/config.toml root@$i:/etc/containerd/config.toml; done

所有节点启动 containerd,并配置开机启动

1

systemctl enable --now containerd

安装 HAProxy 和 Keepalived

这里高可用采用的是 HAProxy + Keepalived,HAProxy 和 Keepalived 以守护进程的方式在所有 Master 节点部署。

所有 Master 节点执行以下命令安装 HAProxy 和 Keepalived。

1

yum install -y haproxy keepalived

配置 HAProxy

所有 Master 节点配置 HAProxy(详细配置参考 HAProxy 文档,所有 MAster 节点的 HAProxy 配置相同)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy_ori.cfg

cat > /etc/haproxy/haproxy.cfg <<EOF

global

log 127.0.0.1 local0 err

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

ulimit-n 16384

user haproxy

group haproxy

stats timeout 30s

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

timeout http-request 15s

timeout queue 1m

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-keep-alive 15s

timeout check 15s

maxconn 3000

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend K8S-master

backend static

balance roundrobin

server static 127.0.0.1:4331 check

backend K8S-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master-01 192.168.55.111:6443 check

server k8s-master-02 192.168.55.112:6443 check

server k8s-master-03 192.168.55.113:6443 check

EOF

配置 Keepalived

所有 Master 节点配置 Keepalived。注意修改 interface(服务网卡),priority(优先级,不同即可)mcast_src_ip(节点IP地址)。详细配置参考 Keepalived 文档。

创建 Keepalived 配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived_ori.conf

export INTERFACE=$(ip route show |grep default |cut -d ' ' -f5)

export IPADDR=$(ifconfig |grep -A1 $INTERFACE |grep inet |awk '{print $2}')

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ${INTERFACE}

mcast_src_ip ${IPADDR}

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

192.168.55.110

}

track_script {

chk_apiserver

}

}

EOF

# 修改其他 master 节点配置文件的 priority 值

# k8s-master-02 节点改为 priority 101

# k8s-master-03 节点改为 priority 102注意,上述的健康检查是关闭的,集群建立完成后再开启。

配置 Keepalived 健康检查文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27cat > /etc/keepalived/check_apiserver.sh <<EOF

#/bin/bash

err=0

for k in \$(seq 1 3)

do

check_code=\$(pgrep haproxy)

if [[ \$check_code == "" ]]; then

err=\$(expr \$err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ \$err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_apiserver.sh

启动 HAProxy 和 Keepalived

执行以下命令启动 keepalived 以及 haproxy,并配置开机启动

1

2systemctl enable --now haproxy.service

systemctl enable --now keepalived.service

生成证书

在 Kubeadm 安装方式下,初始化时会自动生成证书,但是在二进制安装方式下,需要手动生成证书,可以使用 OpenSSL 或者 cfssl。cfssl 是一个开源的证书管理工具,使用 json 文件生成证书,相比 openssl 更方便使用。

准备 cfssl 证书生成工具

安装证书生成工具

cfssl以及cfssljson1

2

3

4

5

6

7

8

9

10

11

12mkdir cfssl && cd cfssl

# 下载工具

wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssljson_1.5.0_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl_1.5.0_linux_amd64

# 添加执行权限

chmod +x cfssljson_1.5.0_linux_amd64 cfssl_1.5.0_linux_amd64

# 将命令移动到可执行路劲并重命名

mv cfssl_1.5.0_linux_amd64 /usr/local/bin/cfssl

mv cfssljson_1.5.0_linux_amd64 /usr/local/bin/cfssljson创建证书存放目录

1

2mkdir -p /usr/local/etcd/ssl

mkdir -p /usr/local/kubernetes/ssl

生成 Etcd 相关证书

创建

etcd-ca-csr.json文件内容如下:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21cat > etcd-ca-csr.json <<EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF创建

etcd-csr.json文件内容如下1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF创建 ca-config.json 文件内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20cat > ca-config.json<<EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF生成 etcd 证书。关于 csr 文件,为证书签名请求文件,配置了一些域名,公司,单位

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17cd ~/cfssl

# 生成 etcd CA 证书和 CA证书的 key

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /usr/local/etcd/ssl/etcd-ca

# 生成 etcd 证书文件

cfssl gencert \

-ca=/usr/local/etcd/ssl/etcd-ca.pem \

-ca-key=/usr/local/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,\

192.168.55.111,\

192.168.55.112,\

192.168.55.113,\

192.168.55.114,\

192.168.55.115 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /usr/local/etcd/ssl/etcd注意,-hostname 需要把所有的 etcd 节点地址都填进去,也可以多填几个预留地址,方便后期 etcd 集群扩容。

生成 kubernetes CA 根证书

创建

ca-csr.json文件内容如下:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF生成 Kubernetes 的 CA 证书以及 CA Key

1

cfssl gencert -initca ca-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/ca

生成 APIServer 证书

创建

apiserver-csr.json文件内容如下1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18cat > apiserver-csr.json <<EOF

{

"CN": "kube-apiserver",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

]

}

EOF生成 apiserver 的证书

1

2

3

4

5

6

7

8

9

10

11

12

13

14cfssl gencert \

-ca=/usr/local/kubernetes/ssl/ca.pem \

-ca-key=/usr/local/kubernetes/ssl/ca-key.pem \

-config=ca-config.json \

-hostname=10.96.0.1,\

192.168.55.110,\

127.0.0.1,\

kubernetes,\

kubernetes.default,\

kubernetes.default.svc,\

kubernetes.default.svc.cluster,\

kubernetes.default.svc.cluster.local \

-profile=kubernetes \

apiserver-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/apiserver10.96.0.0/12 是 k8s Service 的网段,如果说需要修改 k8s Service 的网段为其他网段,那么这里也需要更改 10.96.0.1 为其他的网段的第一个IP地址;192.168.55.110 是高可用集群的 VIP,如果不是高可用集群,那么192.168.55.110 需要替换成 k8s-master-01 的 IP 地址。

生成 apiserver 的聚合证书。(Requestheader-client-xxx requestheader-allowwd-xxx:aggerator)

- 创建

front-proxy-ca-csr.json文件内容如下

1

2

3

4

5

6

7

8

9cat > front-proxy-ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF- 创建

front-proxy-client-csr.json文件内容如下:

1

2

3

4

5

6

7

8

9cat > front-proxy-client-csr.json <<EOF

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF- 生成 apiserver 的聚合证书

1

2

3

4

5

6

7

8cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/front-proxy-ca

cfssl gencert \

-ca=/usr/local/kubernetes/ssl/front-proxy-ca.pem \

-ca-key=/usr/local/kubernetes/ssl/front-proxy-ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

front-proxy-client-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/front-proxy-client- 创建

生成 ControllerManager 证书

创建

manager-csr.json文件内容如下1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18cat > manager-csr.json <<EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "Kubernetes-manual"

}

]

}

EOF生成 controller-manager 证书

1

2

3

4

5

6cfssl gencert \

-ca=/usr/local/kubernetes/ssl/ca.pem \

-ca-key=/usr/local/kubernetes/ssl/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/controller-manager

生成 Scheduler 证书

创建

scheduler-csr.json文件内容如下1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18cat > scheduler-csr.json <<EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "Kubernetes-manual"

}

]

}

EOF生成 scheduler 证书文件

1

2

3

4

5

6cfssl gencert \

-ca=/usr/local/kubernetes/ssl/ca.pem \

-ca-key=/usr/local/kubernetes/ssl/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/scheduler

生成 Admin 证书

创建

admin-csr.json文件内容如下:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18cat > admin-csr.json <<EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "Kubernetes-manual"

}

]

}

EOF创建 admin 证书

1

2

3

4

5

6cfssl gencert \

-ca=/usr/local/kubernetes/ssl/ca.pem \

-ca-key=/usr/local/kubernetes/ssl/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/admin

生成 ServiceAccount Key

创建 sa.key

1

2

3

4

5

6openssl genrsa -out /usr/local/kubernetes/ssl/sa.key 2048

# 输出如下

Generating RSA private key, 2048 bit long modulus

....+++

.......................................................+++

e is 65537 (0x10001)创建 sa.pub

1

2

3openssl rsa -in /usr/local/kubernetes/ssl/sa.key -pubout -out /usr/local/kubernetes/ssl/sa.pub

# 输出如下

writing RSA key

安装 Etcd

拷贝二进制文件

以下操作都在 k8s-master-01 节点操作

下载 etcd 以及 k8s 二进制包

1

wget https://github.com/etcd-io/etcd/releases/download/v3.4.13/etcd-v3.4.13-linux-amd64.tar.gz

解压二进制包

1

tar zxvf etcd-v3.4.13-linux-amd64.tar.gz

创建软件安装目录

1

mkdir -p /usr/local/etcd/{bin,cfg}

拷贝二进制文件到安装目录

1

cp etcd-v3.4.13-linux-amd64/{etcd,etcdctl} /usr/local/etcd/bin/

所有节点创建 /opt/cni/bin 目录

1

2

3for i in k8s-master-01 k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02;do

ssh root@$i "mkdir -p /opt/cni/bin";

done

创建 etcd 配置文件

在 k8s-master-01 节点上创建 etcd-master-01 的配置文件,注意修改对应的 IP地址和 name(名字)。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48cat > /usr/local/etcd/cfg/etcd.config.yml << EOF

name: 'k8s-master-01'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://192.168.55.111:2380'

listen-client-urls: 'https://192.168.55.111:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://192.168.55.111:2380'

advertise-client-urls: 'https://192.168.55.111:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master-01=https://192.168.55.111:2380,k8s-master-02=https://192.168.55.112:2380,k8s-master-03=https://192.168.55.113:2380,k8s-node-01=https://192.168.55.114:2380,k8s-node-02=https://192.168.55.115:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/usr/local/etcd/ssl/etcd.pem'

key-file: '/usr/local/etcd/ssl/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/usr/local/etcd/ssl/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/usr/local/etcd/ssl/etcd.pem'

key-file: '/usr/local/etcd/ssl/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/usr/local/etcd/ssl/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF关于配置文件参数参考:

注册 etcd 为系统服务

创建 etcd service 服务文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19# 创建服务文件

cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/local/etcd/bin/etcd --config-file=/usr/local/etcd/cfg/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

EOF

复制文件到节点

将上面步骤生成的 /usr/local/etcd 目录以及 /usr/lib/systemd/system/etcd.service 服务文件拷贝到其他 Etcd 节点

1

2

3

4for i in k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02;do \

scp -r /usr/local/etcd root@$i:/usr/local/; \

scp -r /usr/lib/systemd/system/etcd.service root@$i:/usr/lib/systemd/system/etcd.service;

done

修改配置文件

需要修改其他节点配置文件中的内容为:

- name: 节点名称,集群中必须唯一

- listen-peer-urls: 修改为当前节点的IP地址

- listen-client-urls: 修改为当前节点的IP地址

- initial-advertise-peer-urls: 修改为当前节点的IP地址

- advertise-client-urls: 修改为当前节点的IP地址

启动服务并设置开机启动

在所有 etcd 节点执行以下命令:

1 | systemctl daemon-reload |

查看集群健康状态

执行以下命令查看 etcd 集群状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21export ETCDCTL_API=3

/usr/local/etcd/bin/etcdctl \

--cacert=/usr/local/etcd/ssl/etcd-ca.pem \

--cert=/usr/local/etcd/ssl/etcd.pem \

--key=/usr/local/etcd/ssl/etcd-key.pem \

--endpoints="https://192.168.55.111:2379,\

https://192.168.55.112:2379,\

https://192.168.55.113:2379,\

https://192.168.55.114:2379,\

https://192.168.55.115:2379" endpoint status --write-out=table

# 返回如下信息

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | IS LEARNER | RAFT TERM | RAFT INDEX | RAFT APPLIED INDEX | ERRORS |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+

| https://192.168.55.111:2379 | 4544cd0b6bd8b29f | 3.4.13 | 20 kB | false | false | 3 | 13 | 13 | |

| https://192.168.55.112:2379 | 1386be870b3b2d18 | 3.4.13 | 20 kB | true | false | 3 | 13 | 13 | |

| https://192.168.55.113:2379 | f3bee1bd195739b7 | 3.4.13 | 33 kB | false | false | 3 | 13 | 13 | |

| https://192.168.55.114:2379 | 3f6247e505a830a1 | 3.4.13 | 20 kB | false | false | 3 | 13 | 13 | |

| https://192.168.55.115:2379 | f5b52b969235fd4f | 3.4.13 | 20 kB | false | false | 3 | 13 | 13 | |

+---------------------------+------------------+---------+---------+-----------+------------+-----------+------------+--------------------+--------+注意:如果启动节点启动失败,需要清除启动信息,然后再次启动, 参考文章 etcd集群节点挂掉后恢复步骤

具体操作如下:

1

rm -rf /var/lib/etcd/*

部署 kubernetes 组件

以下操作都在 k8s-master-01 节点操作

下载 kubernetes 二进制包

1

wget https://dl.k8s.io/v1.21.3/kubernetes-server-linux-amd64.tar.gz

解压二进制包

1

tar zxvf kubernetes-server-linux-amd64.tar.gz

创建安装目录

1

mkdir -p /usr/local/kubernetes/{bin,cfg,manifests}

拷贝二进制文件到安装目录

1

2cp kubernetes/server/bin/kube{-apiserver,-controller-manager,-scheduler,-proxy,let} /usr/local/kubernetes/bin/

cp kubernetes/server/bin/kubectl /usr/local/bin/

部署 Master 组件

为简化操作,以下所有步骤仅在 k8s-master-01 上操作,最后将生成的文件拷贝到其他 Master 节点。

配置 kube-apiserver

创建 Apiserver 服务文件,注意,如果不是高可用集群,将 192.168.55.110 改为 k8s-master-01的地址

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/kubernetes/bin/kube-apiserver \\

--v=2 \\

--logtostderr=true \\

--allow-privileged=true \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--advertise-address=192.168.55.110 \\

--service-cluster-ip-range=10.96.0.0/12 \\

--service-node-port-range=30000-32767 \\

--etcd-servers=https://192.168.55.111:2379,https://192.168.55.112:2379,https://192.168.55.113:2379,https://192.168.55.114:2379,https://192.168.55.115:2379 \\

--etcd-cafile=/usr/local/etcd/ssl/etcd-ca.pem \\

--etcd-certfile=/usr/local/etcd/ssl/etcd.pem \\

--etcd-keyfile=/usr/local/etcd/ssl/etcd-key.pem \\

--client-ca-file=/usr/local/kubernetes/ssl/ca.pem \\

--tls-cert-file=/usr/local/kubernetes/ssl/apiserver.pem \\

--tls-private-key-file=/usr/local/kubernetes/ssl/apiserver-key.pem \\

--kubelet-client-certificate=/usr/local/kubernetes/ssl/apiserver.pem \\

--kubelet-client-key=/usr/local/kubernetes/ssl/apiserver-key.pem \\

--service-account-key-file=/usr/local/kubernetes/ssl/sa.pub \\

--service-account-signing-key-file=/usr/local/kubernetes/ssl/sa.key \\

--service-account-issuer=https://kubernetes.default.svc.cluster.local \\

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \\

--authorization-mode=Node,RBAC \\

--enable-bootstrap-token-auth=true \\

--requestheader-client-ca-file=/usr/local/kubernetes/ssl/front-proxy-ca.pem \\

--proxy-client-cert-file=/usr/local/kubernetes/ssl/front-proxy-client.pem \\

--proxy-client-key-file=/usr/local/kubernetes/ssl/front-proxy-client-key.pem \\

--requestheader-allowed-names=aggregator \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-username-headers=X-Remote-User \\

--enable-aggregator-routing=true \\

--feature-gates=EphemeralContainers=true

# --token-auth-file=/usr/local/kubernetes/cfg/token.csv

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF

配置 kube-controller-manager

创建 Controller-manager 服务启动文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33cat >/usr/lib/systemd/system/kube-controller-manager.service <<EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/kubernetes/bin/kube-controller-manager \\

--v=2 \\

--logtostderr=true \\

--address=127.0.0.1 \\

--root-ca-file=/usr/local/kubernetes/ssl/ca.pem \\

--cluster-signing-cert-file=/usr/local/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/usr/local/kubernetes/ssl/ca-key.pem \\

--service-account-private-key-file=/usr/local/kubernetes/ssl/sa.key \\

--kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig \\

--leader-elect=true \\

--use-service-account-credentials=true \\

--node-monitor-grace-period=40s \\

--node-monitor-period=5s \\

--pod-eviction-timeout=2m0s \\

--controllers=*,bootstrapsigner,tokencleaner \\

--allocate-node-cidrs=true \\

--cluster-cidr=172.16.0.0/12 \\

--requestheader-client-ca-file=/usr/local/kubernetes/ssl/front-proxy-ca.pem \\

--node-cidr-mask-size=24

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF

配置 kube-scheduler

创建 Scheduler 服务启动文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20cat >/usr/lib/systemd/system/kube-scheduler.service <<EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/kubernetes/bin/kube-scheduler \\

--v=2 \\

--logtostderr=true \\

--address=127.0.0.1 \\

--leader-elect=true \\

--kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF

生成 kubeconfig 文件

生成 Controller-Manager 的 kubeconfig 文件。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16kubectl config set-cluster kubernetes \

--certificate-authority=/usr/local/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.55.110:8443 \

--kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig && \

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/usr/local/kubernetes/ssl/controller-manager.pem \

--client-key=/usr/local/kubernetes/ssl/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig && \

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig && \

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig生成 Scheduler 的 kubeconfig 文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16kubectl config set-cluster kubernetes \

--certificate-authority=/usr/local/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.55.110:8443 \

--kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig && \

kubectl config set-credentials system:kube-scheduler \

--client-certificate=/usr/local/kubernetes/ssl/scheduler.pem \

--client-key=/usr/local/kubernetes/ssl/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig && \

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig && \

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig生成 admin 的 kubeconfig 文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16kubectl config set-cluster kubernetes \

--certificate-authority=/usr/local/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.55.110:8443 \

--kubeconfig=/usr/local/kubernetes/cfg/admin.kubeconfig && \

kubectl config set-credentials kubernetes-admin \

--client-certificate=/usr/local/kubernetes/ssl/admin.pem \

--client-key=/usr/local/kubernetes/ssl/admin-key.pem \

--embed-certs=true \

--kubeconfig=/usr/local/kubernetes/cfg/admin.kubeconfig && \

kubectl config set-context kubernetes-admin@kubernetes \

--cluster=kubernetes \

--user=kubernetes-admin \

--kubeconfig=/usr/local/kubernetes/cfg/admin.kubeconfig && \

kubectl config use-context kubernetes-admin@kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/admin.kubeconfig

注意,如果不是高可用集群,192.168.55.110:8443 改为 k8s-master-01 的地址,8443 改为 apiserver 的端口,默认是 6443。

启动 master 组件

拷贝文件到其他 Master 节点

1

2

3

4for i in k8s-master-02 k8s-master-03;do

scp /usr/lib/systemd/system/kube-{apiserver,controller-manager,scheduler}.service root@$i:/usr/lib/systemd/system/;

scp -r /usr/local/kubernetes/ root@$i:/usr/local/;

done启动 master 组件

1

2

3

4systemctl daemon-reload

systemctl enable --now kube-apiserver.service

systemctl enable --now kube-controller-manager.service

systemctl enable --now kube-scheduler.service

配置 kubectl

以下所有步骤只在安装了 kubectl 的节点执行。kubectl 不一定要在 k8s 集群内,可以在任意一台可以访问 k8s 集群的服务器上安装 kubectl。

创建 kubectl 配置文件

1

2mkdir ~/.kube

cp /usr/local/kubernetes/cfg/admin.kubeconfig ~/.kube/config配置kubectl子命令补全

1

2

3

4

5

6yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

kubectl completion bash > ~/.kube/completion.bash.inc

echo "source ~/.kube/completion.bash.inc" >> ~/.bash_profile

source $HOME/.bash_profile测试 kubectl 命令

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-4 Healthy {"health":"true"}

etcd-0 Healthy {"health":"true"}

etcd-3 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

# kubectl cluster-info

Kubernetes master is running at https://192.168.55.110:8443

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11h如果出现

Unable to connect to the server: net/http: TLS handshake timeout错误,请检查是否开启了代理1

2

3

4

5

6echo $http_proxy $https_proxy

# 取消代理

unset https_proxy

unset http_proxy

配置 TLS Bootstrapping

在 master-01 创建 bootstrap,注意,如果不是高可用集群,192.168.55.110:8443 改为 k8s-master-01 的地址,8443 改为 apiserver的端口,默认是6443

生成

bootstrap-kubelet.kubeconfig文件1

2

3

4

5

6

7

8

9

10

11

12

13

14kubectl config set-cluster kubernetes \

--certificate-authority=/usr/local/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.55.110:8443 \

--kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig && \

kubectl config set-credentials tls-bootstrap-token-user \

--token=c8ad9c.2e4d610cf3e7426e \

--kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig && \

kubectl config set-context tls-bootstrap-token-user@kubernetes \

--cluster=kubernetes \

--user=tls-bootstrap-token-user \

--kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig && \

kubectl config use-context tls-bootstrap-token-user@kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig注意:如果要修改 bootstrap.secret.yaml 的 token-id 和 token-secret,需要保证下图红圈内的字符串一致的,并且位数是一样的。还要保证上个命令的 token:c8ad9c.2e4d610cf3e7426e 与你修改的字符串 要一致

创建

bootstrap.secret.yaml文件,内容如下1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-c8ad9c

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token generated by 'kubelet '."

token-id: c8ad9c

token-secret: 2e4d610cf3e7426e

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver创建 bootstrap 资源对象

1

kubectl create -f bootstrap.secret.yaml

部署 Worker 节点

所有 Worker 节点配置 Kubelet,如果 Master 节点也需要运行 Pod(在生产环境中不建议,在测试环境中为了节省资源可以运行 Pod),同样需要配置 Kubelet,Master 节点与 Worker 节点的 Kubelet 配置唯一的区别是 Master 节点的 –node-labels 为 node-role.kubernetes.io/master=’’,Worker 节点的为 node-role.kubernetes.io/node=’’。因为 Master 节点己经有证书,所以就无需复制证书,直接创建 Kubelet 的配置文件即可。

配置 kubelet

在 worker 节点创建应用目录以及拷贝二进制文件

1

2

3

4for i in k8s-node-01 k8s-node-02;do

ssh root@$i "mkdir -p /usr/local/kubernetes/{bin,cfg,ssl,manifests}";

scp ~/kubernetes/server/bin/{kubelet,kube-proxy} root@$i:/usr/local/kubernetes/bin/;

done拷贝证书以及配置文件到 worker 节点

1

2

3

4for i in k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02;do

scp /usr/local/kubernetes/ssl/{ca.pem,ca-key.pem,front-proxy-ca.pem} root@$i:/usr/local/kubernetes/ssl/;

scp /usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig root@$i:/usr/local/kubernetes/cfg/;

done所有 worker 节点创建 kubelet 服务启动文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20for i in k8s-master-01 k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02;do \

ssh root@$i "

cat >/usr/lib/systemd/system/kubelet.service <<EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

ExecStart=/usr/local/kubernetes/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

EOF";

done所有 worker 节点配置 kubelet service 的配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13mkdir -p /etc/systemd/system/kubelet.service.d;

cat >/etc/systemd/system/kubelet.service.d/10-kubelet.conf <<EOF

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig --kubeconfig=/usr/local/kubernetes/cfg/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin"

Environment="KUBELET_CONFIG_ARGS=--config=/usr/local/kubernetes/cfg/kubelet-conf.yml --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.2"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node='' --container-runtime=remote --container-runtime-endpoint=unix:///run/containerd/containerd.sock --cgroup-driver=systemd"

ExecStart=

ExecStart=/usr/local/kubernetes/bin/kubelet \$KUBELET_KUBECONFIG_ARGS \$KUBELET_CONFIG_ARGS \$KUBELET_SYSTEM_ARGS \$KUBELET_EXTRA_ARGS

EOF

# 拷贝配置文件到其他 worker 节点

for i in k8s-master-01 k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02;do scp -r /etc/systemd/system/kubelet.service.d/ root@$i:/etc/systemd/system/;done注意上面的

--node-labels,如果是 master 节点,需要修改为node-role.kubernetes.io/master=''所有 worker 节点创建 kubelet 的配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75cat >/usr/local/kubernetes/cfg/kubelet-conf.yml <<EOF

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /usr/local/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /usr/local/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

EOF

# 拷贝配置文件到其他节点

for i in k8s-master-01 k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02;do scp -r /usr/local/kubernetes/cfg/kubelet-conf.yml root@$i:/usr/local/kubernetes/cfg/;done所有 worker 节点启动 kubelet

1

2systemctl daemon-reload

systemctl enable --now kubelet查看 kubelet 服务启动状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23# systemctl status kubelet.service -l

● kubelet.service - Kubernetes Kubelet

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; vendor preset: disabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubelet.conf

Active: active (running) since Thu 2021-08-05 20:30:06 CST; 40s ago

Docs: https://github.com/kubernetes/kubernetes

Main PID: 1808 (kubelet)

Tasks: 14

Memory: 35.1M

CGroup: /system.slice/kubelet.service

└─1808 /usr/local/kubernetes/bin/kubelet --bootstrap-kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig --kubeconfig=/usr/local/kubernetes/cfg/kubelet.kubeconfig --config=/usr/local/kubernetes/cfg/kubelet-conf.yml --pod-infra-container-image=registry.cn-hangzhou.aliyuncs.com/google_containers/pause-amd64:3.2 --network-plugin=cni --cni-conf-dir=/etc/cni/net.d --cni-bin-dir=/opt/cni/bin --node-labels=node.kubernetes.io/node= --container-runtime=remote --container-runtime-endpoint=unix:///run/containerd/containerd.sock --cgroup-driver=systemd

Aug 05 20:30:18 k8s-node-01 kubelet[1808]: E0805 20:30:18.601212 1808 kubelet.go:1870] "Skipping pod synchronization" err="PLEG is not healthy: pleg has yet to be successful"

Aug 05 20:30:18 k8s-node-01 kubelet[1808]: I0805 20:30:18.628681 1808 kuberuntime_manager.go:1044] "Updating runtime config through cri with podcidr" CIDR="172.16.3.0/24"

Aug 05 20:30:18 k8s-node-01 kubelet[1808]: I0805 20:30:18.629835 1808 kubelet_network.go:76] "Updating Pod CIDR" originalPodCIDR="" newPodCIDR="172.16.3.0/24"

Aug 05 20:30:18 k8s-node-01 kubelet[1808]: E0805 20:30:18.630083 1808 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:Network plugin returns error: cni plugin not initialized"

Aug 05 20:30:18 k8s-node-01 kubelet[1808]: I0805 20:30:18.728236 1808 reconciler.go:157] "Reconciler: start to sync state"

Aug 05 20:30:23 k8s-node-01 kubelet[1808]: E0805 20:30:23.526940 1808 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:Network plugin returns error: cni plugin not initialized"

Aug 05 20:30:28 k8s-node-01 kubelet[1808]: E0805 20:30:28.527608 1808 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:Network plugin returns error: cni plugin not initialized"

Aug 05 20:30:33 k8s-node-01 kubelet[1808]: E0805 20:30:33.528416 1808 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:Network plugin returns error: cni plugin not initialized"

Aug 05 20:30:38 k8s-node-01 kubelet[1808]: E0805 20:30:38.529579 1808 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:Network plugin returns error: cni plugin not initialized"

Aug 05 20:30:43 k8s-node-01 kubelet[1808]: E0805 20:30:43.530412 1808 kubelet.go:2211] "Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:Network plugin returns error: cni plugin not initialized"如上,出现

Container runtime network not ready" networkReady="NetworkReady=false reason:NetworkPluginNotReady message:Network plugin returns error: cni plugin not initialized"显示只有如下信息为正常查看集群节点状态

1

2

3

4

5

6

7# kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master-01 NotReady <none> 116s v1.21.3 192.168.55.111 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.4.9

k8s-master-02 NotReady <none> 112s v1.21.3 192.168.55.112 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.4.9

k8s-master-03 NotReady <none> 110s v1.21.3 192.168.55.113 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.4.9

k8s-node-01 NotReady <none> 109s v1.21.3 192.168.55.114 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.4.9

k8s-node-02 NotReady <none> 108s v1.21.3 192.168.55.115 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.4.9

部署 kube-proxy

以下操作在 master-01 执行,注意,如果不是高可用集群,192.168.55.110:8443 改为 master-01 的地址,8443 改为 apiserver 的端口,默认是 6443.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23kubectl -n kube-system create serviceaccount kube-proxy

kubectl create clusterrolebinding system:kube-proxy \

--clusterrole system:node-proxier \

--serviceaccount kube-system:kube-proxy

SECRET=$(kubectl -n kube-system get sa/kube-proxy \

--output=jsonpath='{.secrets[0].name}')

JWT_TOKEN=$(kubectl -n kube-system get secret/$SECRET \

--output=jsonpath='{.data.token}' | base64 -d)

kubectl config set-cluster kubernetes \

--certificate-authority=/usr/local/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://192.168.55.110:8443 \

--kubeconfig=/usr/local/kubernetes/cfg/kube-proxy.kubeconfig && \

kubectl config set-credentials kubernetes \

--token=${JWT_TOKEN} \

--kubeconfig=/usr/local/kubernetes/cfg/kube-proxy.kubeconfig && \

kubectl config set-context kubernetes \

--cluster=kubernetes \

--user=kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/kube-proxy.kubeconfig && \

kubectl config use-context kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/kube-proxy.kubeconfig创建 kube-proxy 服务启动文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17cat >/usr/lib/systemd/system/kube-proxy.service <<EOF

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/kubernetes/bin/kube-proxy \\

--config=/usr/local/kubernetes/cfg/kube-proxy.conf \\

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF创建 kube-proxy 配置文件,如果更改了集群Pod的网段,需要更改 clusterCIDR: 172.16.0.0/12 参数。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38cat >/usr/local/kubernetes/cfg/kube-proxy.conf <<EOF

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /usr/local/kubernetes/cfg/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 172.16.0.0/12

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms

EOF拷贝 kube-proxy 相关文件到其他节点

1

2

3

4for i in k8s-master-02 k8s-master-03 k8s-node-01 k8s-node-02;do

scp /usr/local/kubernetes/cfg/kube-proxy.{conf,kubeconfig} root@$i:/usr/local/kubernetes/cfg/;

scp /usr/lib/systemd/system/kube-proxy.service root@$i:/usr/lib/systemd/system/kube-proxy.service;

done所有节点启动 kube-proxy

1

2systemctl daemon-reload

systemctl enable --now kube-proxy.service

安装 calico

calico 官方安装文档: Install Calico for on-premises deployments

安装最新的 Calico

下载 calico 最新的 yaml 资源文件

1

curl https://docs.projectcalico.org/manifests/calico-etcd.yaml -O

修改 calico-etcd 配置文件,添加 ETCD 节点信息以及证书

1

2

3

4

5

6

7

8

9sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://192.168.55.111:2379,https://192.168.55.112:2379,https://192.168.55.113:2379,https://192.168.55.114:2379,https://192.168.55.115:2379"#g' calico-etcd.yaml

ETCD_CA=`cat /usr/local/etcd/ssl/etcd-ca.pem | base64 | tr -d '\n'`

ETCD_CERT=`cat /usr/local/etcd/ssl/etcd.pem | base64 | tr -d '\n'`

ETCD_KEY=`cat /usr/local/etcd/ssl/etcd-key.pem | base64 | tr -d '\n'`

sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml修改 calico 中 CIDR 的网段为 Pods 网段,即 172.16.0.0/12

在 k8s-master-01 节点执行以下命令

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27kubectl create -f calico-etcd.yaml

# 输出如下

configmap/calico-config created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created查看集群calico pods 运行状态,系统 Pod 都在 kube-system 命名空间

1

2

3

4

5

6

7

8

9kubectl get pods -n kube-system

# 输出如下

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-42snz 1/1 Running 0 2m47s

calico-node-b8hqx 1/1 Running 0 2m47s

calico-node-m5x7n 1/1 Running 0 2m47s

calico-node-pz5qn 1/1 Running 0 2m47s

calico-node-vp6wm 1/1 Running 0 2m47s

calico-node-wqgtn 1/1 Running 0 2m47s再次查看集群 node 状态

1

2

3

4

5

6

7# kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master-01 Ready <none> 42m v1.21.3 192.168.55.111 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.4.9

k8s-master-02 Ready <none> 42m v1.21.3 192.168.55.112 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.4.9

k8s-master-03 Ready <none> 42m v1.21.3 192.168.55.113 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.4.9

k8s-node-01 Ready <none> 42m v1.21.3 192.168.55.114 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.4.9

k8s-node-02 Ready <none> 42m v1.21.3 192.168.55.115 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.4.9如果容器状态异常可以使用 kubectl describe 或者logs查看容器的日志.

安装 CoreDNS

安装最新版的 CoreDNS

1

2

3

4

5

6

7

8

9

10

11

12

13

14git clone https://github.com/coredns/deployment.git

cd deployment/kubernetes

./deploy.sh -s -i 10.96.0.10 | kubectl apply -f -

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

# 查看状态

kubectl get po -n kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-85b4878f78-h29kh 1/1 Running 0 8h安装下载好的 CoreDNS

1

2

3

4

5

6

7

8

9cd ~/k8s-ha-install

kubectl create -f CoreDNS/coredns.yaml

# 输出如下

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created查看状态

1

2

3kubectl get pods -n kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-7bf4bd64bd-fn8wc 1/1 Running 0 107s

安装 Metrics Server

metrices-server 的 GitHub 地址:kubernetes-sigs/metrics-server

安装最新 metrices-server

下载最新版本

components.yaml文件1

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.5.0/components.yaml

同步文件中 metrices-server 镜像到阿里云镜像仓库(略)

修改 component.yml 文件内容,主要修改以下几个地方:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32spec:

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443 # 1. 修改安全端口为 4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls # 2. 添加以下内容

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem # change to front-proxy-ca.crt for kubeadm

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

image: registry.cn-hangzhou.aliyuncs.com/59izt/metrics-server:v0.5.0 # 3. 修改镜像使用自己同步的阿里云镜像

...

ports:

- containerPort: 4443 # 4. 修改容器暴露端口为 4443

name: https

protocol: TCP

...

volumeMounts:

- mountPath: /tmp

name: tmp-dir

- mountPath: /etc/kubernetes/pki # 5. 挂载卷到容器

name: ca-ssl

...

volumes:

- emptyDir: {}

name: tmp-dir

- name: ca-ssl # 6. 挂载证书到卷

hostPath:

path: /usr/local/kubernetes/ssl执行以下命令创建资源

1

kubectl create -f comp.yaml

查看 pod 运行状态

1

2

3kubectl get pods -n kube-system -l k8s-app=metrics-server

NAME READY STATUS RESTARTS AGE

metrics-server-69c977b6ff-6ncw4 1/1 Running 0 4m7s查看集群度量指标

1

2

3

4

5

6

7

8

9

10kubectl top pods -n kube-system

NAME CPU(cores) MEMORY(bytes)

calico-kube-controllers-97769f7c7-kv9d9 2m 12Mi

calico-node-ff66c 19m 45Mi

calico-node-hf46z 20m 45Mi

calico-node-x6nzb 22m 42Mi

calico-node-xff8p 19m 39Mi

calico-node-z9wdl 22m 42Mi

coredns-7bf4bd64bd-fn8wc 4m 13Mi

metrics-server-69c977b6ff-6ncw4 2m 16Mi

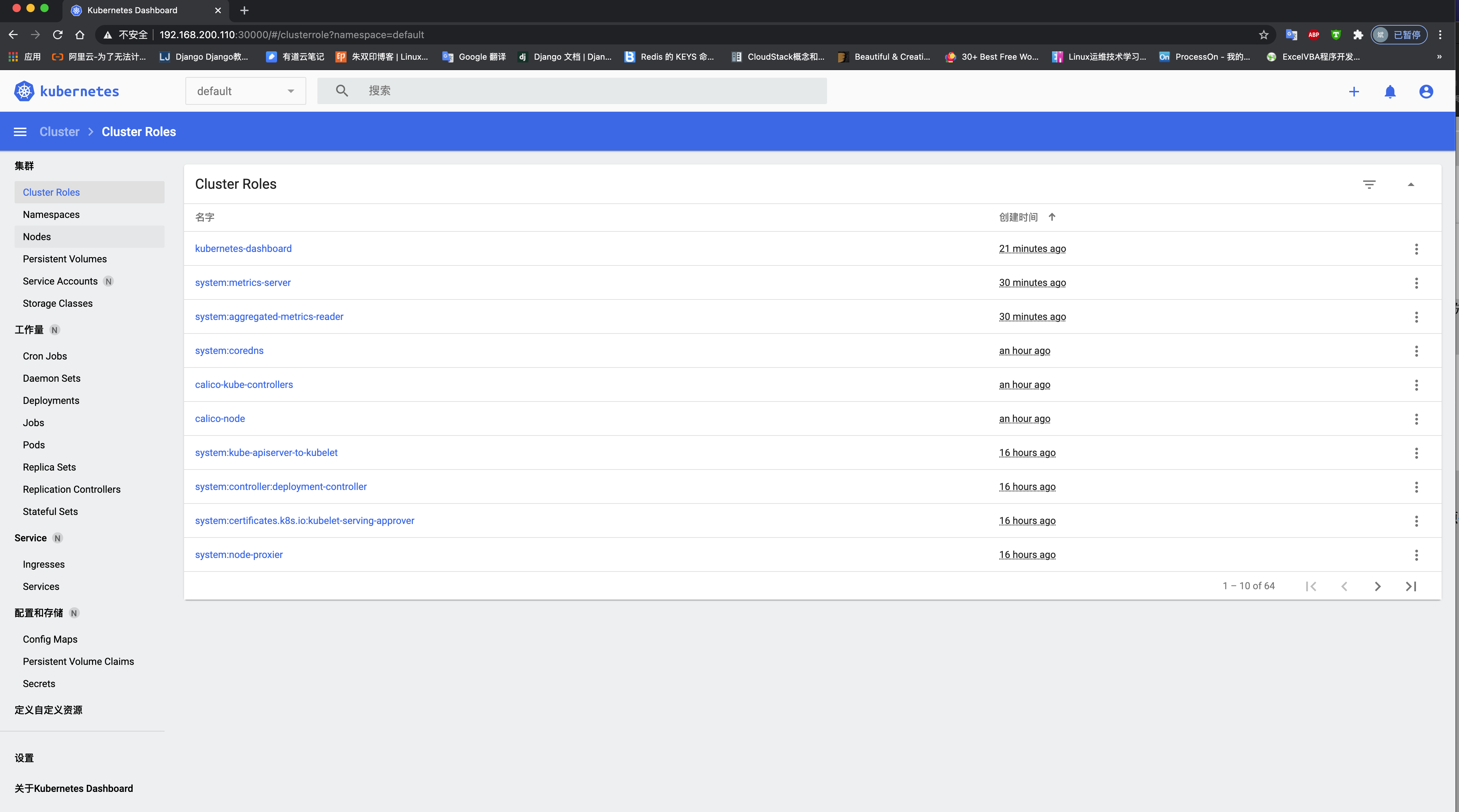

安装 Dashboard

Dashboard 官方 GitHub:

https://github.com/kubernetes/dashboard找到最新的安装文件,执行如下命令

1

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.0.4/aio/deploy/recommended.yaml

或者使用下载好的指定版的

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19cd ~/k8s-ha-install/dashboard

kubectl apply -f .

# 输出如下

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created查看 dashboard 容器状态

1

2

3

4kubectl get pods -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

dashboard-metrics-scraper-7b59f7d4df-thfkp 1/1 Running 0 116s

kubernetes-dashboard-548f88599b-wljlf 1/1 Running 0 116s更改 Dashboard 的 svc,将 type: ClusterIP 更改为 type: NodePort,如果已经是 NodePort 则忽略此步骤

1

kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard

由于使用的是自签名的证书,浏览器可能会打不开,需要在谷歌浏览器启动文件中加入启动参数,用于解决无法访问 Dashboard 的问题。启动参数如下

1

--test-type --ignore-certificate-errors

根据自己的实例端口号,通过任意安装了 kube-proxy 的宿主机IP或者 VIP 的 IP 加上端口即可访问 dashboard。查看端口的命令如下

1

2

3

4# kubectl get svc -n kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

dashboard-metrics-scraper ClusterIP 10.99.225.145 <none> 8000/TCP 90s

kubernetes-dashboard NodePort 10.103.146.251 <none> 443:30479/TCP 90s创建管理员用户(如果使用的是下载好的 dashboard.yaml 文件,则用户已经创建好了,这一步可以忽略)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24cat > admin.yaml <<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kube-system

EOF

kubectl create -f admin.yaml -n kube-system查看 token 的值

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

# 输出如下

Name: admin-user-token-fdvxb

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: a599017b-3c78-4b4e-84b5-8e8606446653

Type: kubernetes.io/service-account-token

Data

====

token: eyJhbGciOiJSUzI1NiIsImtpZCI6InVCTmZMclZYSHJBUzF5bWY2cFdLWkJNdEFkNzdfUmp3UXNZWjIzdlVsNWcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLWZkdnhiIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiJhNTk5MDE3Yi0zYzc4LTRiNGUtODRiNS04ZTg2MDY0NDY2NTMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.S34TEGtF-F-BGyU7o8hjF_S4sXMVgy6M3bXVrhen-Be1EC_qoYPfBlyhlMaPVYcEecXfg8j5MVXKnF5XswQufdRaYr9JxwH9T6JMHb-c09lWpVlVqjt5TuzI3kUuXj9geHnDdOrd_wDhNSNSdTBxOPALB1cCvhwt_NNlIwXrUJYkre3kXZnHsJqJeDVS0SGCwZczzls-u9CvNtzSckm3dIznVIc5_1ukyCtDpcOfaFNTOCDnCh7eeYcoQu9zDUukH3TGYuOEIsJA5heRIvp1FRVYCuf_wEgvkQd3IXJyMWoXmDyD4kGX-djYDp60-S7TucsZhtrC4ajm_v9rsxoiCA

ca.crt: 1363 bytes

namespace: 11 bytes访问 Dashboard。选择登录方式为 token。输入上面获得的 token,选择登陆,结果如下