参考文档:

kubernetes 部署专有 IPFS Cluster 集群

关于 IPFS Cluster

IPFS 为用户提供了内容寻址存储的强大功能。但是,永久网络需要不影响 IPFS 网络的分布式性质的数据冗余和可用性解决方案。

IPFS 集群是一个分布式应用程序,可作为IPFS对等方的挎斗,维护全局集群精确集并将其项目智能地分配给IPFS对等方。IPFS集群支持大型IPFS存储服务,如 nft.storage 和 web3.storage

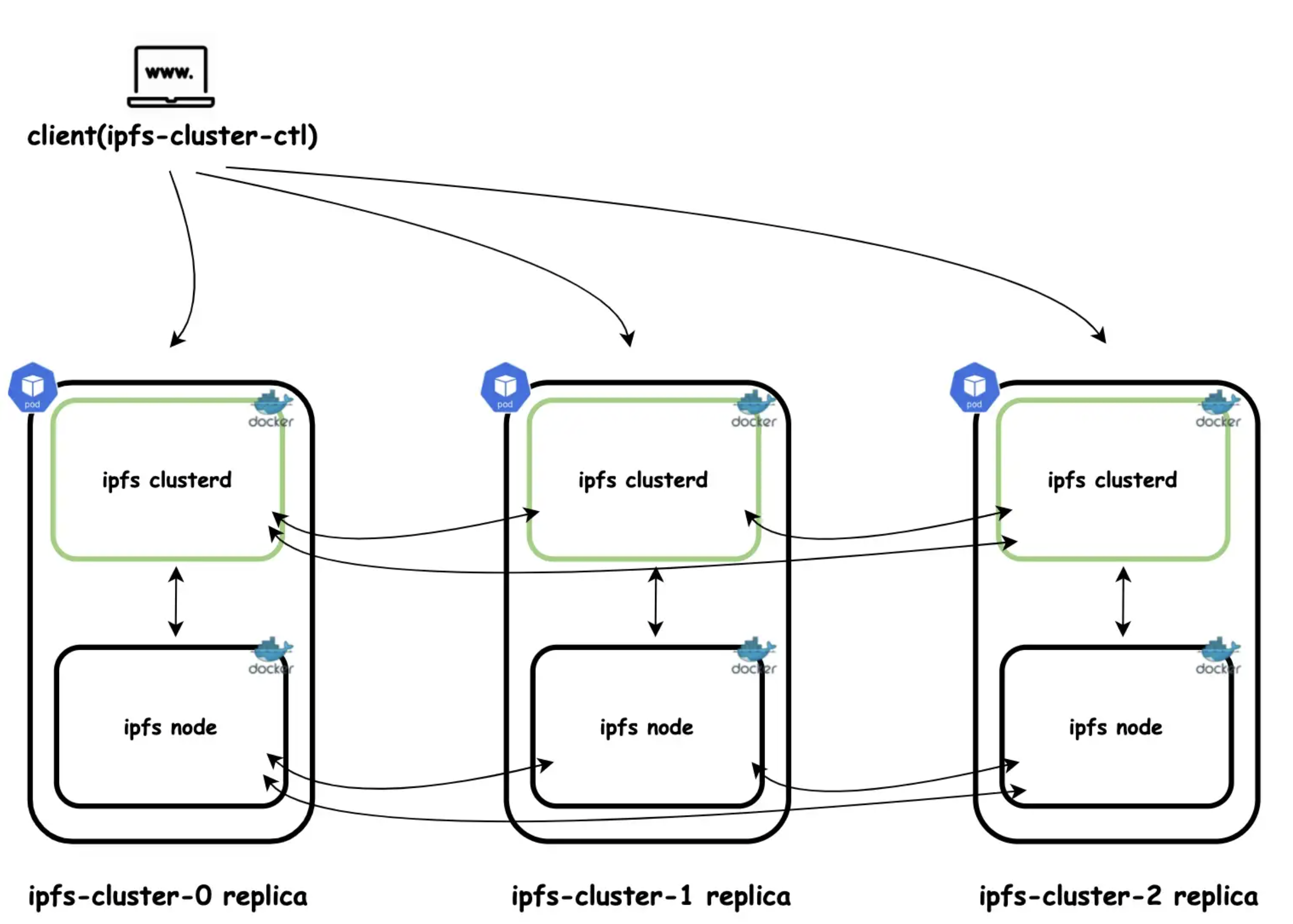

集群架构

下图描述了这个 IPFS 集群的架构,它包含三个 IPFS 节点和三个 IPFS 集群节点。

集群配置

IPFS Cluster 使用两个主要的配置文件

service.json: 包含集群对等配置,通常在所有集群对等中都是相同的identity.json: 包含每个对等点使用的唯一身份

identity.json 包括一个 base64 编码的私钥和与之关联的公共对等 ID。此对等 ID 标识集群中的对等点。在自动化部署时,我们可以预先手动生成对等 ID 和私钥,并使用 CLUSTER_ID 和 CLUSTER_PRIVATE_KEY 环境变量覆盖它们。

生成配置 ID 和 私钥

这里使用 ipfs-key 命令生成了对等 ID 和 base64 编码的私钥。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21# 下载 ipfs-key 源码

git clone https://github.com/whyrusleeping/ipfs-key.git

# 编译 ipfs-key

go build -o ipfs-key

# 安装 ipfs-key

mv ipfs-key /usr/local/bin/

# 生成对等 bootstrap-peer-id 和 创建 bootstrap-peer-priv-key 的 key

# ipfs-key | base64 -w 0

Generating a 2048 bit RSA key...

Success!

ID for generated key: QmPwLuzdjuhYRqd4ZTXnCc9vsBB3PiiEz5vw7g3AhPXSkp

CAASpwkwggSjAgEAAoIBAQCuPhNCuUxiHhxM5o9LQKHg7+KKN3KBAr6xIhu1xiKljMHRZ9VHb82dAdwmolvpxLzHmZhyXY8Oa5/oN1CRjLY/akzQRMQUVEw7CgYAsonDeqCdoIO8tHbIGTOuMMJSQDMcDDBa31lntEk9cp5J+dFBSiJbCyl2NBP7u9dSUnY1piwH2pa4qy6ewtY+kUEEvxVe2IEmr9ojEn2WVrYJsey8xElTcW/G/WXikR6tcEZdMLcUQ1Gx4ky8fQeCQMLh0PNIfE4dOYSRC2vtxzSAMjI1IT+Yg6adapQ+5G3kVW9h5zTQ5BY80gYcsx7j3qFKGhu+wLB5rmxH6bNfhMXF6jnNAgMBAAECggEAJtHLprzzyJRex79CQ4jFyACJ3zNVPmrnIz2vIMNg6rM+ZzIT8VN2YrmMW8smYSGk0W9l6Gzxt0vBF5JjT9oirGQ5ctkvOjxBs5GbHsKoMLX8XMHrN7qZECGVQwS39m05NdF9YHGMUK949ci4fVC8Dyi+GLyW4y/dF+OUqFGY5oCduA9Wx0NGalN/E7WZk2Hgzn5bpjK35IeTIFnnf4WoJfUn2n70sJ0EPzhOjRjrBwo1XSygLgjSk/stPhLFHaNkIbLgfaTLARz7oooW5Kxe2HkWwZEs9sO+N8kPmEvX/9YpKtMB3B48rwoJN/GSejAmGKYNwjXB69wSKWTVOpTonQKBgQDCmRMjZEE4S1JcX2gbYSEoA215w8NX6LoBdP87kc/Bt/npdVTA7RJCDcWlLesKJHEtC9kOrN27E3UfEr7TWWeWqW1chN/ZzBzVDiwQ+aiYr/wOGlNXV8viDQC42v39y5BWdRNn/u+GLj40RtrMXBGZNnNuoDbqMfNRZKJoB/iKJwKBgQDlOMI++6kjsN/fvDAexDhs0Nn+NPabLE+eg4s0GcfoyR7IKQi6JmubZwc7MjWCopdPsZd0rdxs06h0QdnuQ2j/ZYPH1v2o6ZOG8b1xf8hb4Gxolnkh8zhANAqJRIsA0gBgxQuh3HguzR0fvSOhGzjy3sWqwznTGF1WHplh+kpY6wKBgQCRIHAxeNdbEHGACnct1CZSHRxMLz9EFICEDak71+bFZluvTJ3EtAll0beRFMmxarQtECT02N8UYdJ7NhOys36Z0gmJcl8voxXtnwAmOMsP9E9ahS+aeBPJpkDfnBLIcERY9j9e07X2sA4cFquetRs61G0KF9pclpwMG60zQJ6PCQKBgDi2pWDd9UhOX+XEcwd5txg9SGJcClP0T7LBizSV2F9hO4t8k5szHFaz1BcyYgjzX4qpPvbweWQ3risti9UmupjOLh/IsrQTLpwpvZySaClLSqdJ66iZu+Yuuhiia94FF3DZ7/nZSacSusz6iBE5Ygq9UZzhdrIjChzfr0WNns2HAoGAHXsysFVHtmbZAJpLw77IxAc+r2p4nErhGpULHpwn9gZzKj+RQtwTrNvXkv6nVsPJmltCQbcCnXEwdqxcEdAXSGvgucWS+IXh7m7npX/oIaNxYRndgFGIhbLwdU+IqUQANDRgMjSgjBPJf4W3GtdkT9G6JL4OxK9pshww9X+j/K0=

# 生成 bootstrap-peer-priv-key :生成方法 echo "<private key value>" | base64 -w 0 -

echo 'CAASpwkwggSjAgEAAoIBAQCuPhNCuUxiHhxM5o9LQKHg7+KKN3KBAr6xIhu1xiKljMHRZ9VHb82dAdwmolvpxLzHmZhyXY8Oa5/oN1CRjLY/akzQRMQUVEw7CgYAsonDeqCdoIO8tHbIGTOuMMJSQDMcDDBa31lntEk9cp5J+dFBSiJbCyl2NBP7u9dSUnY1piwH2pa4qy6ewtY+kUEEvxVe2IEmr9ojEn2WVrYJsey8xElTcW/G/WXikR6tcEZdMLcUQ1Gx4ky8fQeCQMLh0PNIfE4dOYSRC2vtxzSAMjI1IT+Yg6adapQ+5G3kVW9h5zTQ5BY80gYcsx7j3qFKGhu+wLB5rmxH6bNfhMXF6jnNAgMBAAECggEAJtHLprzzyJRex79CQ4jFyACJ3zNVPmrnIz2vIMNg6rM+ZzIT8VN2YrmMW8smYSGk0W9l6Gzxt0vBF5JjT9oirGQ5ctkvOjxBs5GbHsKoMLX8XMHrN7qZECGVQwS39m05NdF9YHGMUK949ci4fVC8Dyi+GLyW4y/dF+OUqFGY5oCduA9Wx0NGalN/E7WZk2Hgzn5bpjK35IeTIFnnf4WoJfUn2n70sJ0EPzhOjRjrBwo1XSygLgjSk/stPhLFHaNkIbLgfaTLARz7oooW5Kxe2HkWwZEs9sO+N8kPmEvX/9YpKtMB3B48rwoJN/GSejAmGKYNwjXB69wSKWTVOpTonQKBgQDCmRMjZEE4S1JcX2gbYSEoA215w8NX6LoBdP87kc/Bt/npdVTA7RJCDcWlLesKJHEtC9kOrN27E3UfEr7TWWeWqW1chN/ZzBzVDiwQ+aiYr/wOGlNXV8viDQC42v39y5BWdRNn/u+GLj40RtrMXBGZNnNuoDbqMfNRZKJoB/iKJwKBgQDlOMI++6kjsN/fvDAexDhs0Nn+NPabLE+eg4s0GcfoyR7IKQi6JmubZwc7MjWCopdPsZd0rdxs06h0QdnuQ2j/ZYPH1v2o6ZOG8b1xf8hb4Gxolnkh8zhANAqJRIsA0gBgxQuh3HguzR0fvSOhGzjy3sWqwznTGF1WHplh+kpY6wKBgQCRIHAxeNdbEHGACnct1CZSHRxMLz9EFICEDak71+bFZluvTJ3EtAll0beRFMmxarQtECT02N8UYdJ7NhOys36Z0gmJcl8voxXtnwAmOMsP9E9ahS+aeBPJpkDfnBLIcERY9j9e07X2sA4cFquetRs61G0KF9pclpwMG60zQJ6PCQKBgDi2pWDd9UhOX+XEcwd5txg9SGJcClP0T7LBizSV2F9hO4t8k5szHFaz1BcyYgjzX4qpPvbweWQ3risti9UmupjOLh/IsrQTLpwpvZySaClLSqdJ66iZu+Yuuhiia94FF3DZ7/nZSacSusz6iBE5Ygq9UZzhdrIjChzfr0WNns2HAoGAHXsysFVHtmbZAJpLw77IxAc+r2p4nErhGpULHpwn9gZzKj+RQtwTrNvXkv6nVsPJmltCQbcCnXEwdqxcEdAXSGvgucWS+IXh7m7npX/oIaNxYRndgFGIhbLwdU+IqUQANDRgMjSgjBPJf4W3GtdkT9G6JL4OxK9pshww9X+j/K0=' | base64 -w 0 -

# 生成的 secret 信息如下:

Q0FBU3B3a3dnZ1NqQWdFQUFvSUJBUUN1UGhOQ3VVeGlIaHhNNW85TFFLSGc3K0tLTjNLQkFyNnhJaHUxeGlLbGpNSFJaOVZIYjgyZEFkd21vbHZweEx6SG1aaHlYWThPYTUvb04xQ1JqTFkvYWt6UVJNUVVWRXc3Q2dZQXNvbkRlcUNkb0lPOHRIYklHVE91TU1KU1FETWNEREJhMzFsbnRFazljcDVKK2RGQlNpSmJDeWwyTkJQN3U5ZFNVblkxcGl3SDJwYTRxeTZld3RZK2tVRUV2eFZlMklFbXI5b2pFbjJXVnJZSnNleTh4RWxUY1cvRy9XWGlrUjZ0Y0VaZE1MY1VRMUd4NGt5OGZRZUNRTUxoMFBOSWZFNGRPWVNSQzJ2dHh6U0FNakkxSVQrWWc2YWRhcFErNUcza1ZXOWg1elRRNUJZODBnWWNzeDdqM3FGS0dodSt3TEI1cm14SDZiTmZoTVhGNmpuTkFnTUJBQUVDZ2dFQUp0SExwcnp6eUpSZXg3OUNRNGpGeUFDSjN6TlZQbXJuSXoydklNTmc2ck0rWnpJVDhWTjJZcm1NVzhzbVlTR2swVzlsNkd6eHQwdkJGNUpqVDlvaXJHUTVjdGt2T2p4QnM1R2JIc0tvTUxYOFhNSHJON3FaRUNHVlF3UzM5bTA1TmRGOVlIR01VSzk0OWNpNGZWQzhEeWkrR0x5VzR5L2RGK09VcUZHWTVvQ2R1QTlXeDBOR2FsTi9FN1daazJIZ3puNWJwakszNUllVElGbm5mNFdvSmZVbjJuNzBzSjBFUHpoT2pSanJCd28xWFN5Z0xnalNrL3N0UGhMRkhhTmtJYkxnZmFUTEFSejdvb29XNUt4ZTJIa1d3WkVzOXNPK044a1BtRXZYLzlZcEt0TUIzQjQ4cndvSk4vR1NlakFtR0tZTndqWEI2OXdTS1dUVk9wVG9uUUtCZ1FEQ21STWpaRUU0UzFKY1gyZ2JZU0VvQTIxNXc4Tlg2TG9CZFA4N2tjL0J0L25wZFZUQTdSSkNEY1dsTGVzS0pIRXRDOWtPck4yN0UzVWZFcjdUV1dlV3FXMWNoTi9aekJ6VkRpd1ErYWlZci93T0dsTlhWOHZpRFFDNDJ2Mzl5NUJXZFJObi91K0dMajQwUnRyTVhCR1pObk51b0RicU1mTlJaS0pvQi9pS0p3S0JnUURsT01JKys2a2pzTi9mdkRBZXhEaHMwTm4rTlBhYkxFK2VnNHMwR2Nmb3lSN0lLUWk2Sm11Ylp3YzdNaldDb3BkUHNaZDByZHhzMDZoMFFkbnVRMmovWllQSDF2Mm82Wk9HOGIxeGY4aGI0R3hvbG5raDh6aEFOQXFKUklzQTBnQmd4UXVoM0hndXpSMGZ2U09oR3pqeTNzV3F3em5UR0YxV0hwbGgra3BZNndLQmdRQ1JJSEF4ZU5kYkVIR0FDbmN0MUNaU0hSeE1MejlFRklDRURhazcxK2JGWmx1dlRKM0V0QWxsMGJlUkZNbXhhclF0RUNUMDJOOFVZZEo3TmhPeXMzNlowZ21KY2w4dm94WHRud0FtT01zUDlFOWFoUythZUJQSnBrRGZuQkxJY0VSWTlqOWUwN1gyc0E0Y0ZxdWV0UnM2MUcwS0Y5cGNscHdNRzYwelFKNlBDUUtCZ0RpMnBXRGQ5VWhPWCtYRWN3ZDV0eGc5U0dKY0NsUDBUN0xCaXpTVjJGOWhPNHQ4azVzekhGYXoxQmN5WWdqelg0cXBQdmJ3ZVdRM3Jpc3RpOVVtdXBqT0xoL0lzclFUTHB3cHZaeVNhQ2xMU3FkSjY2aVp1K1l1dWhpaWE5NEZGM0RaNy9uWlNhY1N1c3o2aUJFNVlncTlVWnpoZHJJakNoemZyMFdObnMySEFvR0FIWHN5c0ZWSHRtYlpBSnBMdzc3SXhBYytyMnA0bkVyaEdwVUxIcHduOWdaektqK1JRdHdUck52WGt2Nm5Wc1BKbWx0Q1FiY0NuWEV3ZHF4Y0VkQVhTR3ZndWNXUytJWGg3bTducFgvb0lhTnhZUm5kZ0ZHSWhiTHdkVStJcVVRQU5EUmdNalNnakJQSmY0VzNHdGRrVDlHNkpMNE94Szlwc2h3dzlYK2ovSzA9Cg==service.json文件包含 32 字节十六进制编码的秘密,充当 libp2p 网络保护器。这为使用预共享密钥的对等点 (libp2p) 之间的所有通信提供了额外的加密。secret 值可以用CLUSTER_SECRET环境变量覆盖。我使用以下命令生成了集群机密。1

2

3# 生成 ipfs 集群的 secret

# od -vN 32 -An -tx1 /dev/urandom | tr -d ' \n' | base64 -w 0 -

OTEwMDFhODg1ZGZkY2UzOWQ0ZWE0NTcwZDYxNGZiNTUxMWU1MGJkN2QxMTc3YjY1OTVhNjFhOTQ2OWMzMGM4MQ==

创建 kubernetes 资源清单

创建

configmap.yaml文件内容如下1

2

3

4

5

6

7apiVersion: v1

kind: ConfigMap

metadata:

name: env-config

namespace: vonebfs-dev

data:

bootstrap-peer-id: QmPwLuzdjuhYRqd4ZTXnCc9vsBB3PiiEz5vw7g3AhPXSkpbootstrap-peer-id 的值使用

ipfs-key |base64 -w 0生成创建

secret.yaml文件内容如下1

2

3

4

5

6

7

8

9apiVersion: v1

kind: Secret

metadata:

name: secret-config

namespace: vonebfs-dev

type: Opaque

data:

cluster-secret: YzVhNzZlNTk4YmUzMWJiMjQ3YWNmZTViZmM2MDg0NTZiMjJlZTNiNjMxMjhmYzEwZGNmYjkwZDVjYTBmYWE3Ng==

bootstrap-peer-priv-key: Q0FBU3B3a3dnZ1NqQWdFQUFvSUJBUUN1UGhOQ3VVeGlIaHhNNW85TFFLSGc3K0tLTjNLQkFyNnhJaHUxeGlLbGpNSFJaOVZIYjgyZEFkd21vbHZweEx6SG1aaHlYWThPYTUvb04xQ1JqTFkvYWt6UVJNUVVWRXc3Q2dZQXNvbkRlcUNkb0lPOHRIYklHVE91TU1KU1FETWNEREJhMzFsbnRFazljcDVKK2RGQlNpSmJDeWwyTkJQN3U5ZFNVblkxcGl3SDJwYTRxeTZld3RZK2tVRUV2eFZlMklFbXI5b2pFbjJXVnJZSnNleTh4RWxUY1cvRy9XWGlrUjZ0Y0VaZE1MY1VRMUd4NGt5OGZRZUNRTUxoMFBOSWZFNGRPWVNSQzJ2dHh6U0FNakkxSVQrWWc2YWRhcFErNUcza1ZXOWg1elRRNUJZODBnWWNzeDdqM3FGS0dodSt3TEI1cm14SDZiTmZoTVhGNmpuTkFnTUJBQUVDZ2dFQUp0SExwcnp6eUpSZXg3OUNRNGpGeUFDSjN6TlZQbXJuSXoydklNTmc2ck0rWnpJVDhWTjJZcm1NVzhzbVlTR2swVzlsNkd6eHQwdkJGNUpqVDlvaXJHUTVjdGt2T2p4QnM1R2JIc0tvTUxYOFhNSHJON3FaRUNHVlF3UzM5bTA1TmRGOVlIR01VSzk0OWNpNGZWQzhEeWkrR0x5VzR5L2RGK09VcUZHWTVvQ2R1QTlXeDBOR2FsTi9FN1daazJIZ3puNWJwakszNUllVElGbm5mNFdvSmZVbjJuNzBzSjBFUHpoT2pSanJCd28xWFN5Z0xnalNrL3N0UGhMRkhhTmtJYkxnZmFUTEFSejdvb29XNUt4ZTJIa1d3WkVzOXNPK044a1BtRXZYLzlZcEt0TUIzQjQ4cndvSk4vR1NlakFtR0tZTndqWEI2OXdTS1dUVk9wVG9uUUtCZ1FEQ21STWpaRUU0UzFKY1gyZ2JZU0VvQTIxNXc4Tlg2TG9CZFA4N2tjL0J0L25wZFZUQTdSSkNEY1dsTGVzS0pIRXRDOWtPck4yN0UzVWZFcjdUV1dlV3FXMWNoTi9aekJ6VkRpd1ErYWlZci93T0dsTlhWOHZpRFFDNDJ2Mzl5NUJXZFJObi91K0dMajQwUnRyTVhCR1pObk51b0RicU1mTlJaS0pvQi9pS0p3S0JnUURsT01JKys2a2pzTi9mdkRBZXhEaHMwTm4rTlBhYkxFK2VnNHMwR2Nmb3lSN0lLUWk2Sm11Ylp3YzdNaldDb3BkUHNaZDByZHhzMDZoMFFkbnVRMmovWllQSDF2Mm82Wk9HOGIxeGY4aGI0R3hvbG5raDh6aEFOQXFKUklzQTBnQmd4UXVoM0hndXpSMGZ2U09oR3pqeTNzV3F3em5UR0YxV0hwbGgra3BZNndLQmdRQ1JJSEF4ZU5kYkVIR0FDbmN0MUNaU0hSeE1MejlFRklDRURhazcxK2JGWmx1dlRKM0V0QWxsMGJlUkZNbXhhclF0RUNUMDJOOFVZZEo3TmhPeXMzNlowZ21KY2w4dm94WHRud0FtT01zUDlFOWFoUythZUJQSnBrRGZuQkxJY0VSWTlqOWUwN1gyc0E0Y0ZxdWV0UnM2MUcwS0Y5cGNscHdNRzYwelFKNlBDUUtCZ0RpMnBXRGQ5VWhPWCtYRWN3ZDV0eGc5U0dKY0NsUDBUN0xCaXpTVjJGOWhPNHQ4azVzekhGYXoxQmN5WWdqelg0cXBQdmJ3ZVdRM3Jpc3RpOVVtdXBqT0xoL0lzclFUTHB3cHZaeVNhQ2xMU3FkSjY2aVp1K1l1dWhpaWE5NEZGM0RaNy9uWlNhY1N1c3o2aUJFNVlncTlVWnpoZHJJakNoemZyMFdObnMySEFvR0FIWHN5c0ZWSHRtYlpBSnBMdzc3SXhBYytyMnA0bkVyaEdwVUxIcHduOWdaektqK1JRdHdUck52WGt2Nm5Wc1BKbWx0Q1FiY0NuWEV3ZHF4Y0VkQVhTR3ZndWNXUytJWGg3bTducFgvb0lhTnhZUm5kZ0ZHSWhiTHdkVStJcVVRQU5EUmdNalNnakJQSmY0VzNHdGRrVDlHNkpMNE94Szlwc2h3dzlYK2ovSzA9Cg==cluster-secret 使用

od -vN 32 -An -tx1 /dev/urandom | tr -d ' \n' | base64 -w 0 -命令生成

bootstrap-peer-priv-key 使用echo "<private key value>" | base64 -b 0 -生成,<private key value>为第一步 ipfs-key 命令生成的私钥创建引导

bootstrap.yaml文件,该文件包含entrypoint.sh和configure-ipfs.sh两个 shell 脚本:entrypoint.sh启用 ipfs-cluster 集群的免提引导。configure-ipfs.sh使用生产值配置 ipfs 守护进程。

这些脚本在定义 ipfs-cluster 和 ipfs 部署的 Kubernets StatefulSet 对象中使用。

有关为生产配置 ipfs 的更多信息,请参阅 go-ipfs 配置调整。bootstrap.yaml文件内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62apiVersion: v1

kind: ConfigMap

metadata:

name: ipfs-cluster-set-bootstrap-conf

namespace: vonebfs-dev

data:

entrypoint.sh: |

#!/bin/sh

user=ipfs

# This is a custom entrypoint for k8s designed to connect to the bootstrap

# node running in the cluster. It has been set up using a configmap to

# allow changes on the fly.

if [ ! -f /data/ipfs-cluster/service.json ]; then

ipfs-cluster-service init

fi

# accept gw api requests from outside

# accept cluster api requests from outside

sed -i s~/ip4/127.0.0.1/tcp/9095~/ip4/0.0.0.0/tcp/9095~ /data/ipfs-cluster/service.json

sed -i s~/ip4/127.0.0.1/tcp/9094~/ip4/0.0.0.0/tcp/9094~ /data/ipfs-cluster/service.json

PEER_HOSTNAME=`cat /proc/sys/kernel/hostname`

grep -q ".*ipfs-cluster-0.*" /proc/sys/kernel/hostname

if [ $? -eq 0 ]; then

CLUSTER_ID=${BOOTSTRAP_PEER_ID} \

CLUSTER_PRIVATEKEY=${BOOTSTRAP_PEER_PRIV_KEY} \

exec ipfs-cluster-service daemon --upgrade

else

BOOTSTRAP_ADDR=/dns4/${SVC_NAME}-0.${SVC_NAME}/tcp/9096/ipfs/${BOOTSTRAP_PEER_ID}

if [ -z $BOOTSTRAP_ADDR ]; then

exit 1

fi

# Only ipfs user can get here

exec ipfs-cluster-service daemon --upgrade --bootstrap $BOOTSTRAP_ADDR --leave

fi

configure-ipfs.sh: |

#!/bin/sh

set -e

set -x

user=ipfs

# This is a custom entrypoint for k8s designed to run ipfs nodes in an appropriate

# setup for production scenarios.

mkdir -p /data/ipfs && chown -R ipfs /data/ipfs

if [ -f /data/ipfs/config ]; then

if [ -f /data/ipfs/repo.lock ]; then

rm /data/ipfs/repo.lock

fi

exit 0

fi

ipfs init --profile=local-discovery

ipfs config Addresses.API /ip4/0.0.0.0/tcp/5001

ipfs config Addresses.Gateway /ip4/0.0.0.0/tcp/8080

ipfs config --json Swarm.ConnMgr.HighWater 2000

ipfs config --json Datastore.BloomFilterSize 1048576

ipfs config Datastore.StorageMax 1GB

# 配置跨域访问,注意这里的域名 http://ipfs.59izt.com 要与后面创建 ingress 资源对象的 host 保持一致

ipfs config --json API.HTTPHeaders.Access-Control-Allow-Origin '["http://ipfs.59izt.com", "http://localhost:3000", "http://127.0.0.1:5001", "https://webui.ipfs.io"]'

ipfs config --json API.HTTPHeaders.Access-Control-Allow-Methods '["PUT", "POST"]'

# 删除所有节点

ipfs bootstrap rm all

# 修复文件权限,因为使用 ipfs 用户启动,有时候会报 /data/ipfs/config 文件权限不足

chown -R ipfs /data/ipfs创建

statefulset.yaml文件,IPFS Cluster 容器和 IPFS 容器部署在 Kubernets StatefulSet 中。 StatefulSet 运行 3 个 pod 的副本。单个 pod 包含 ipfs-cluster 和 ipfs 容器。configure-ipfs.sh和entrypoint.sh脚本用作 ipfs-cluster 和 ipfs 容器中的命令。 ipfs-cluster 和 ipfs 容器的数据卷定义为 volumeClaimTemplates。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153apiVersion: apps/v1

kind: StatefulSet

metadata:

name: ipfs-cluster

labels:

app: ipfs-cluster

namespace: vonebfs-dev

spec:

serviceName: ipfs-cluster

replicas: 3

selector:

matchLabels:

app: ipfs-cluster

template:

metadata:

labels:

app: ipfs-cluster

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/edge

operator: DoesNotExist

initContainers:

- name: configure-ipfs

image: "ipfs/kubo:v0.16.0"

command: ["sh", "/custom/configure-ipfs.sh"]

volumeMounts:

- name: ipfs-storage

mountPath: /data/ipfs

- name: configure-script

mountPath: /custom

containers:

- name: ipfs

image: "ipfs/kubo:v0.16.0"

imagePullPolicy: IfNotPresent

env:

- name: IPFS_FD_MAX

value: "4096"

#- name: IPFS_SWARM_KEY_FILE

# value: "/ipfs/swarmkey/swarm.key"

ports:

- name: swarm

protocol: TCP

containerPort: 4001

- name: swarm-udp

protocol: UDP

containerPort: 4002

- name: api

protocol: TCP

containerPort: 5001

- name: ws

protocol: TCP

containerPort: 8081

- name: http

protocol: TCP

containerPort: 8080

livenessProbe:

tcpSocket:

port: swarm

initialDelaySeconds: 30

timeoutSeconds: 5

periodSeconds: 15

volumeMounts:

- name: ipfs-storage

mountPath: /data/ipfs

- name: configure-script

mountPath: /custom

#- name: ipfs-swarm-key

# mountPath: /ipfs/swarmkey

resources:

{}

- name: ipfs-cluster

image: "ipfs/ipfs-cluster:1.0.4"

imagePullPolicy: IfNotPresent

command: ["sh", "/custom/entrypoint.sh"]

envFrom:

- configMapRef:

name: env-config

env:

- name: BOOTSTRAP_PEER_ID

valueFrom:

configMapKeyRef:

name: env-config

key: bootstrap-peer-id

- name: BOOTSTRAP_PEER_PRIV_KEY

valueFrom:

secretKeyRef:

name: secret-config

key: bootstrap-peer-priv-key

- name: CLUSTER_SECRET

valueFrom:

secretKeyRef:

name: secret-config

key: cluster-secret

- name: CLUSTER_MONITOR_PING_INTERVAL

value: "3m"

- name: SVC_NAME

value: ipfs-cluster

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

ports:

- name: api-http

containerPort: 9094

protocol: TCP

- name: proxy-http

containerPort: 9095

protocol: TCP

- name: cluster-swarm

containerPort: 9096

protocol: TCP

livenessProbe:

tcpSocket:

port: cluster-swarm

initialDelaySeconds: 5

timeoutSeconds: 5

periodSeconds: 10

volumeMounts:

- name: cluster-storage

mountPath: /data/ipfs-cluster

- name: configure-script

mountPath: /custom

resources:

{}

volumes:

- name: configure-script

configMap:

name: ipfs-cluster-set-bootstrap-conf

#- name: ipfs-swarm-key

# configMap:

# name: ipfs-swarm-key

volumeClaimTemplates:

- metadata:

name: cluster-storage

spec:

storageClassName: glusterfs

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 5Gi

- metadata:

name: ipfs-storage

spec:

storageClassName: glusterfs

accessModes: ["ReadWriteOnce"]

resources:

requests:

storage: 10Gi创建

service.yaml文件,将 IPFS 集群端点暴露给 Pod 外部的服务。以下是 Kubernets 服务定义1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36apiVersion: v1

kind: Service

metadata:

name: ipfs-cluster

labels:

app: ipfs-cluster

namespace: vonebfs-dev

spec:

type: ClusterIP

ports:

- name: swarm

targetPort: swarm

port: 4001

- name: swarm-udp

targetPort: swarm-udp

port: 4002

- name: api

targetPort: api

port: 5001

- name: ws

targetPort: ws

port: 8081

- name: http

targetPort: http

port: 8080

- name: api-http

targetPort: api-http

port: 9094

- name: proxy-http

targetPort: proxy-http

port: 9095

- name: cluster-swarm

targetPort: cluster-swarm

port: 9096

selector:

app: ipfs-cluster

部署 IPFS Cluster 集群

执行以下命令创建资源对象

1

2

3

4

5kubectl create -f configmap.yaml

kubectl create -f secret.yaml

kubectl create -f bootstrap.yaml

kubectl create -f statefulSet.yaml

kubectl create -f service.yaml查看服务部署状态

1

2

3

4

5

6

7

8

9# kubectl get pods -n vonebfs-dev -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ipfs-cluster-0 2/2 Running 0 12m 172.16.227.214 k8s-sit-node02 <none> <none>

ipfs-cluster-1 2/2 Running 0 12m 172.16.118.93 k8s-sit-node01 <none> <none>

ipfs-cluster-2 2/2 Running 0 9m22s 172.16.131.86 k8s-sit-master02 <none> <none>

# kubectl get svc -n vonebfs-dev -l app=ipfs-cluster

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ipfs-cluster ClusterIP 10.96.247.23 <none> 4001/TCP,4002/TCP,5001/TCP,8081/TCP,8080/TCP,9094/TCP,9095/TCP,9096/TCP 12m

验证集群环境

查看集群状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34# kubectl exec -it -n vonebfs-dev ipfs-cluster-0 -c ipfs-cluster -- ipfs-cluster-ctl peers ls

QmPwLuzdjuhYRqd4ZTXnCc9vsBB3PiiEz5vw7g3AhPXSkp | ipfs-cluster-0 | Sees 2 other peers

> Addresses:

- /ip4/127.0.0.1/tcp/9096/p2p/QmPwLuzdjuhYRqd4ZTXnCc9vsBB3PiiEz5vw7g3AhPXSkp

- /ip4/172.16.227.215/tcp/9096/p2p/QmPwLuzdjuhYRqd4ZTXnCc9vsBB3PiiEz5vw7g3AhPXSkp

> IPFS: 12D3KooWA4F4Hj5wYkfaEuviC4iCbE7eYg1Vvhp7KuVWyFQxrerT

- /ip4/127.0.0.1/tcp/4001/p2p/12D3KooWA4F4Hj5wYkfaEuviC4iCbE7eYg1Vvhp7KuVWyFQxrerT

- /ip4/127.0.0.1/udp/4001/quic/p2p/12D3KooWA4F4Hj5wYkfaEuviC4iCbE7eYg1Vvhp7KuVWyFQxrerT

- /ip4/172.16.227.215/tcp/4001/p2p/12D3KooWA4F4Hj5wYkfaEuviC4iCbE7eYg1Vvhp7KuVWyFQxrerT

- /ip4/172.16.227.215/udp/4001/quic/p2p/12D3KooWA4F4Hj5wYkfaEuviC4iCbE7eYg1Vvhp7KuVWyFQxrerT

- /ip6/::1/tcp/4001/p2p/12D3KooWA4F4Hj5wYkfaEuviC4iCbE7eYg1Vvhp7KuVWyFQxrerT

- /ip6/::1/udp/4001/quic/p2p/12D3KooWA4F4Hj5wYkfaEuviC4iCbE7eYg1Vvhp7KuVWyFQxrerT

12D3KooWShap7iSUELUJyftmRANUt2NdFhbDKrMA9v6XiFbUDgoi | ipfs-cluster-1 | Sees 2 other peers

> Addresses:

- /ip4/127.0.0.1/tcp/9096/p2p/12D3KooWShap7iSUELUJyftmRANUt2NdFhbDKrMA9v6XiFbUDgoi

- /ip4/172.16.118.94/tcp/9096/p2p/12D3KooWShap7iSUELUJyftmRANUt2NdFhbDKrMA9v6XiFbUDgoi

> IPFS: 12D3KooWDDVS2i9zTLptkrDHSsaDsqYpMMsFbm7hcS6nkS8dpPs2

- /ip4/127.0.0.1/tcp/4001/p2p/12D3KooWDDVS2i9zTLptkrDHSsaDsqYpMMsFbm7hcS6nkS8dpPs2

- /ip4/127.0.0.1/udp/4001/quic/p2p/12D3KooWDDVS2i9zTLptkrDHSsaDsqYpMMsFbm7hcS6nkS8dpPs2

- /ip4/172.16.118.94/tcp/4001/p2p/12D3KooWDDVS2i9zTLptkrDHSsaDsqYpMMsFbm7hcS6nkS8dpPs2

- /ip4/172.16.118.94/udp/4001/quic/p2p/12D3KooWDDVS2i9zTLptkrDHSsaDsqYpMMsFbm7hcS6nkS8dpPs2

- /ip6/::1/tcp/4001/p2p/12D3KooWDDVS2i9zTLptkrDHSsaDsqYpMMsFbm7hcS6nkS8dpPs2

- /ip6/::1/udp/4001/quic/p2p/12D3KooWDDVS2i9zTLptkrDHSsaDsqYpMMsFbm7hcS6nkS8dpPs2

12D3KooWAdKy6F32SBNq1qcDw3efViMJ5kUiCewwHUjwASN9hvF2 | ipfs-cluster-2 | Sees 2 other peers

> Addresses:

- /ip4/127.0.0.1/tcp/9096/p2p/12D3KooWAdKy6F32SBNq1qcDw3efViMJ5kUiCewwHUjwASN9hvF2

- /ip4/172.16.131.87/tcp/9096/p2p/12D3KooWAdKy6F32SBNq1qcDw3efViMJ5kUiCewwHUjwASN9hvF2

> IPFS: 12D3KooWN7KBCLkjpjsZRKYjvU8rsgR3iuArxjoqFEJZNXgMjzDk

- /ip4/127.0.0.1/tcp/4001/p2p/12D3KooWN7KBCLkjpjsZRKYjvU8rsgR3iuArxjoqFEJZNXgMjzDk

- /ip4/127.0.0.1/udp/4001/quic/p2p/12D3KooWN7KBCLkjpjsZRKYjvU8rsgR3iuArxjoqFEJZNXgMjzDk

- /ip4/172.16.131.87/tcp/4001/p2p/12D3KooWN7KBCLkjpjsZRKYjvU8rsgR3iuArxjoqFEJZNXgMjzDk

- /ip4/172.16.131.87/udp/4001/quic/p2p/12D3KooWN7KBCLkjpjsZRKYjvU8rsgR3iuArxjoqFEJZNXgMjzDk

- /ip6/::1/tcp/4001/p2p/12D3KooWN7KBCLkjpjsZRKYjvU8rsgR3iuArxjoqFEJZNXgMjzDk

- /ip6/::1/udp/4001/quic/p2p/12D3KooWN7KBCLkjpjsZRKYjvU8rsgR3iuArxjoqFEJZNXgMjzDk查看 ipfs 节点状态

1

2

3

4

5

6

7

8

9

10

11# kubectl exec -it -n vonebfs-dev ipfs-cluster-0 -c ipfs -- ipfs swarm peers

/ip4/172.16.118.94/tcp/4001/p2p/12D3KooWDDVS2i9zTLptkrDHSsaDsqYpMMsFbm7hcS6nkS8dpPs2

/ip4/172.16.131.87/tcp/4001/p2p/12D3KooWN7KBCLkjpjsZRKYjvU8rsgR3iuArxjoqFEJZNXgMjzDk

# kubectl exec -it -n vonebfs-dev ipfs-cluster-1 -c ipfs -- ipfs swarm peers

/ip4/172.16.131.87/udp/4001/quic/p2p/12D3KooWN7KBCLkjpjsZRKYjvU8rsgR3iuArxjoqFEJZNXgMjzDk

/ip4/172.16.227.215/tcp/4001/p2p/12D3KooWA4F4Hj5wYkfaEuviC4iCbE7eYg1Vvhp7KuVWyFQxrerT

# kubectl exec -it -n vonebfs-dev ipfs-cluster-2 -c ipfs -- ipfs swarm peers

/ip4/172.16.118.94/udp/4001/quic/p2p/12D3KooWDDVS2i9zTLptkrDHSsaDsqYpMMsFbm7hcS6nkS8dpPs2

/ip4/172.16.227.215/tcp/4001/p2p/12D3KooWA4F4Hj5wYkfaEuviC4iCbE7eYg1Vvhp7KuVWyFQxrerT

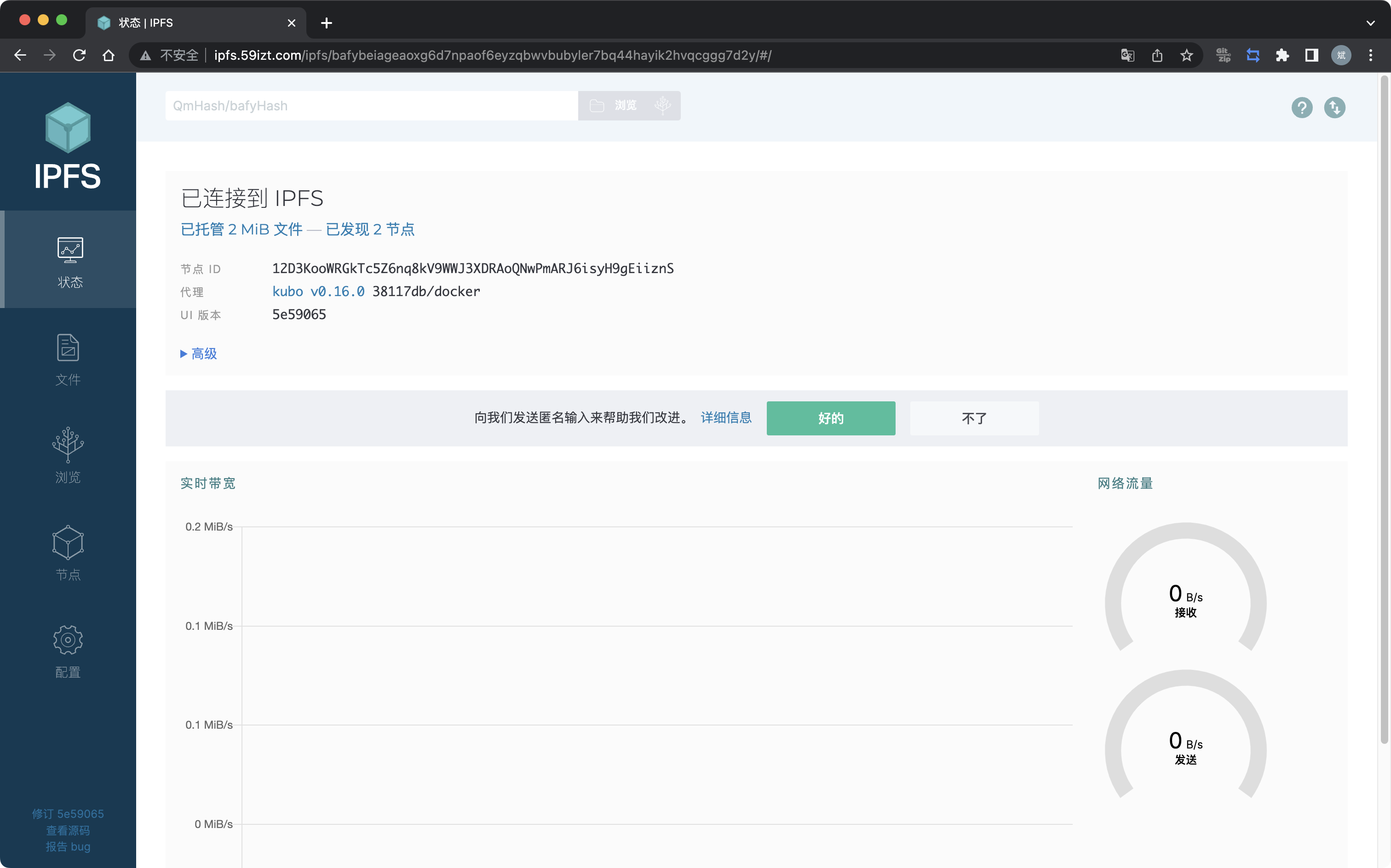

部署 webui

注意: 不同版本的 kubo 或者 ipfs,对应的 webui 版本也不同,所以需要先找出对应版本默认 webui 的 CID 值

创建 ingress 资源对象

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22cat <<EOF | kubectl apply -f -

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ipfs-cluster

namespace: vonebfs-dev

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: 20m

spec:

ingressClassName: nginx

rules:

- host: ipfs.59izt.com

http:

paths:

- backend:

service:

name: ipfs-cluster

port:

number: 5001

path: /

pathType: ImplementationSpecific

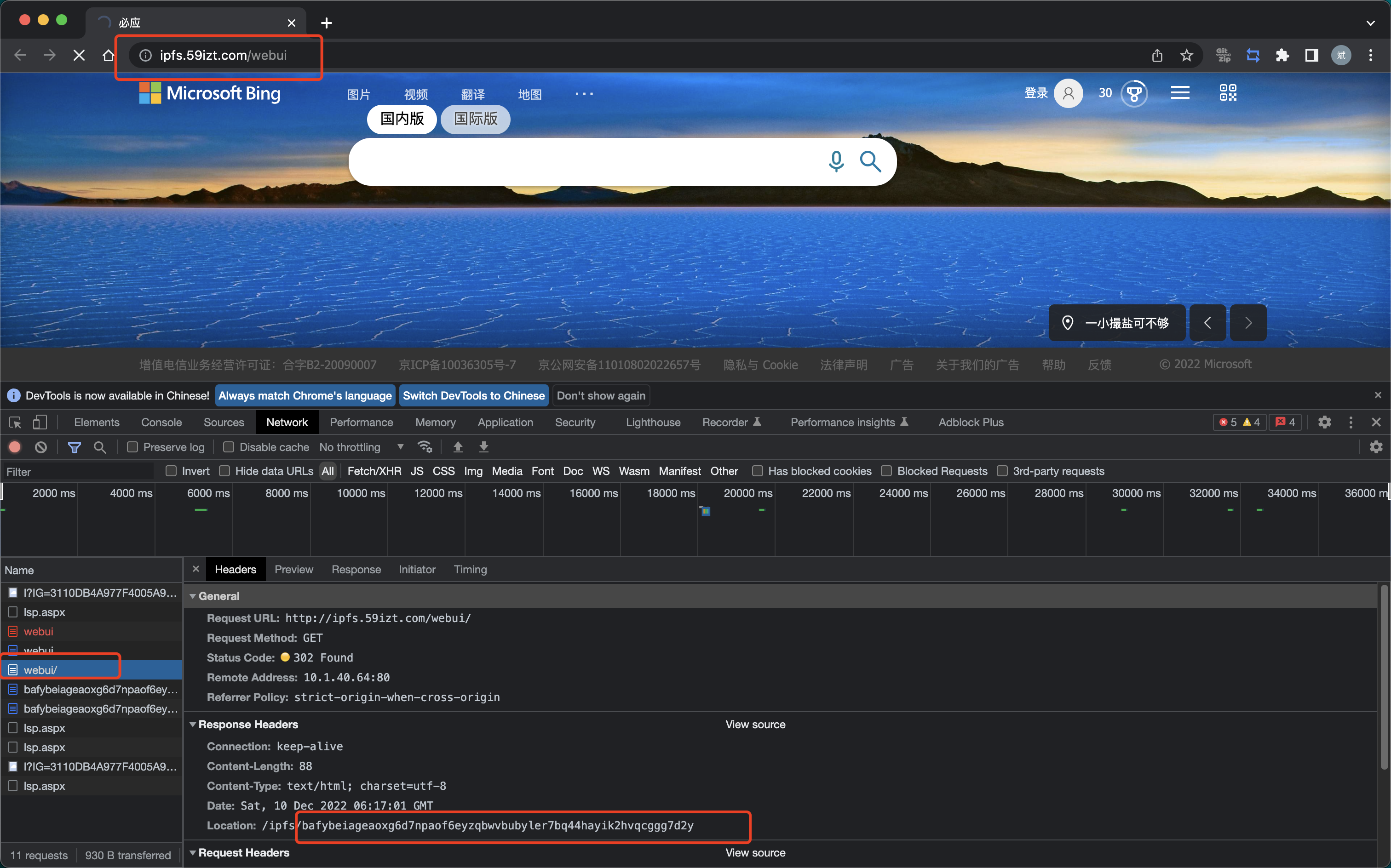

EOF配置 host 解析,打开浏览器开发者模式,访问

http://ipfs.59izt.com/webui,获取 webui 的 CID 值,如下

使用以下命令下载 webui 文件,下载 URL 为

https://ipfs.io/ipfs/${CID}?format=car1

curl https://ipfs.io/ipfs/bafybeiageaoxg6d7npaof6eyzqbwvbubyler7bq44hayik2hvqcggg7d2y?format=car -o webui.car

文件地址为:

https://ipfs.io/ipfs/${CID}导入 webui 到集群

1

2

3

4

5# 拷贝下载的 webui.car 文件到集群内任意一个节点上的 ipfs 容器内

kubectl cp ~/webui.car -n vonebfs-dev ipfs-cluster-0:/tmp -c ipfs

# 导入 webui.car 文件

kubectl exec -it -n vonebfs-dev ipfs-cluster-0 -c ipfs -- ipfs dag import /tmp/webui.car导入成功会有如下提示

1

Pinned root bafybeiageaoxg6d7npaof6eyzqbwvbubyler7bq44hayik2hvqcggg7d2y success