参考文章

部署 EFK 并启用 X-Pack Security

从 Elastic Stack 6.8 和 7.1 开始,Elastic 免费发布了一些安全功能,作为默认发行版(基本许可证)的一部分。这一新功能包括使用 SSL 加密网络流量、创建和管理用户、定义保护索引和集群级访问的角色以及完全保护 Kibana 的功能。

创建证书

我们将安装 3 节点弹性集群作为 StatefulSet,由于我们使用 SSL 进行弹性搜索,因此我们希望为弹性节点生成证书!加密在群集内完成,以及外部 HTTP 调用。

以下脚本将通过执行 Elastic 的示例 docker 镜像中的命令为我们创建证书。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

set -e

export CA_PASSWD=123456

export CERT_PASSWD=123456

if [[ -z $CA_PASSWD ]] || [[ -z $CERT_PASSWD ]] ; then

echo " Provide CA Password [CA_PASSWD] and Cert password [CERT_PASSWD] before hand"

exit 1

fi

rm -f elastic-certificates.p12 elastic-certificate.pem elastic-stack-ca.p12 || true

docker rm -f elastic-helm-charts-certs

docker run --name elastic-helm-charts-certs -i -w /app ${ELASTICSEARCH_IMAGE:-docker.elastic.co/elasticsearch/elasticsearch:7.17.5} \

/bin/sh -c " \

elasticsearch-certutil ca --out /app/elastic-stack-ca.p12 --pass '$CA_PASSWD' && \

elasticsearch-certutil cert \

--name elasticsearch-master-cert \

--dns es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch \

--ca /app/elastic-stack-ca.p12 \

--pass '$CERT_PASSWD' \

--ca-pass '$CA_PASSWD' \

--out /app/elastic-certificates.p12" && \

mkdir certs && \

docker cp elastic-helm-charts-certs:/app/elastic-stack-ca.p12 certs/ && \

docker cp elastic-helm-charts-certs:/app/elastic-certificates.p12 certs/ && \

docker rm -f elastic-helm-charts-certs

echo "elastic certificate created successfully and stored at location $pwd/certs. "

# Extract the CA certificate in PEM format for kibana to ssl verify elastic TLS

openssl pkcs12 -nodes -passin pass:"$CA_PASSWD" -in certs/elastic-stack-ca.p12 -out certs/elastic-ca.pem

echo "CA certificate(pem format) created successfully and stored at location $pwd/certs. "脚本以 pkc12 格式创建 2 个证书。一个是 CA,另一个是证书。这些证书用于弹性节点上的卷挂载。

1

2

3

4

5

6

7

8# ./generate_certificates.sh

This tool assists you in the generation of X.509 certificates and certificate

signing requests for use with SSL/TLS in the Elastic stack.

... 省略 N 行 ...

elastic certificate created successfully and stored at location /certs.

CA certificate(pem format) created successfully and stored at location /certs.生成的证书会存在脚本当前目录的 certs 目录下

1

2# ls certs/

elastic-ca.pem elastic-certificates.p12 elastic-stack-ca.p12创建 efk 部署命名空间

1

kubectl create ns logging

创建 secret 管理 Elastic 证书以及用户名和密码

1

2

3

4

5

6

7

8# 创建 elasticsearch statefulset 使用的 secret

kubectl create secret -n logging generic elastic-certs --from-file=certs/elastic-certificates.p12

# 创建 kinbana 使用的 secret

kubectl create secret -n logging generic elastic-ca-pem --from-file=certs/elastic-ca.pem

# 创建 secret 保存连接 elastic 的用户名和密码

kubectl create secret -n logging generic elastic-auth --from-literal=username=elastic --from-literal=password=abc123456

部署 Elasticsearch 集群

创建 es-sts.yaml 文件,文件内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134kind: Service

apiVersion: v1

metadata:

name: elasticsearch

labels:

app: elasticsearch

namespace: logging

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

namespace: logging

labels:

app: elasticsearch

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

volumes:

- name: elastic-certs

secret:

secretName: elastic-certs

containers:

- name: elasticsearch

image: elasticsearch:7.17.5

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: elastic-certs

mountPath: /usr/share/elasticsearch/config/certs

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch"

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: ES_JAVA_OPTS

value: "-Xms1g -Xmx1g"

- name: xpack.security.enabled

value: "true"

- name: xpack.security.transport.ssl.enabled

value: "true"

- name: xpack.security.transport.ssl.verification_mode

value: certificate

- name: xpack.security.transport.ssl.keystore.path

value: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

- name: xpack.security.transport.ssl.truststore.path

value: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

- name: xpack.security.transport.ssl.keystore.password

value: "123456"

- name: xpack.security.transport.ssl.truststore.password

value: "123456"

- name: xpack.security.http.ssl.keystore.password

value: "123456"

- name: xpack.security.http.ssl.truststore.password

value: "123456"

- name: ELASTIC_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-auth

key: password

- name: ELASTIC_USERNAME

valueFrom:

secretKeyRef:

name: elastic-auth

key: username

initContainers:

- name: fix-permissions

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

imagePullPolicy: IfNotPresent

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

imagePullPolicy: IfNotPresent

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: "glusterfs"

resources:

requests:

storage: 30Gi部署 elasticsearch 集群

1

2

3

4kubectl create -f es-sts.yaml

# 查看 pods 运行状态

kubectl get pods -n logging -owide查看集群状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23# 临时开启 9200 端口转发

kubectl port-forward -n logging es-cluster-0 9200:9200

# 再开一个终端,执行以下命令,查看集群状态

# curl --user elastic:abc123456 -XGET http://localhost:9200/_cluster/health/?pretty

{

"cluster_name" : "k8s-logs",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 1,

"active_shards" : 2,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}

部署 kibana

kibana.yaml 文件,文件内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: logging

spec:

selector:

app: kibana

type: ClusterIP

ports:

- port: 5601

targetPort: 5601

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

labels:

app: kibana

namespace: logging

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: kibana:7.17.3

imagePullPolicy: IfNotPresent

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch.logging.svc.cluster.local:9200

- name: ELASTICSEARCH_USERNAME

valueFrom:

secretKeyRef:

name: elastic-auth

key: username

- name: ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-auth

key: password

- name: xpack.security.enabled

value: "true"

- name: elasticsearch.ssl.certificateAuthorities

value: /usr/share/kibana/config/certs/elastic-ca.pem

- name: elasticsearch.ssl.verificationMode

value: certificate

- name: kibana.index

value: ".kibana"

volumeMounts:

- name: elastic-ca

mountPath: /usr/share/kibana/config/certs

ports:

- containerPort: 5601

volumes:

- name: elastic-ca

secret:

secretName: elastic-ca-pem

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kibana

namespace: logging

annotations:

nginx.ingress.kubernetes.io/proxy-body-size: 20m

spec:

ingressClassName: nginx

rules:

- host: kibana-arm64.vonebaas.com

http:

paths:

- backend:

service:

name: kibana

port:

number: 5601

path: /

pathType: ImplementationSpecific创建 kibana 资源清单对象

1

kubectl create -f kibana.yaml

配置 Host 解析,打开浏览器访问

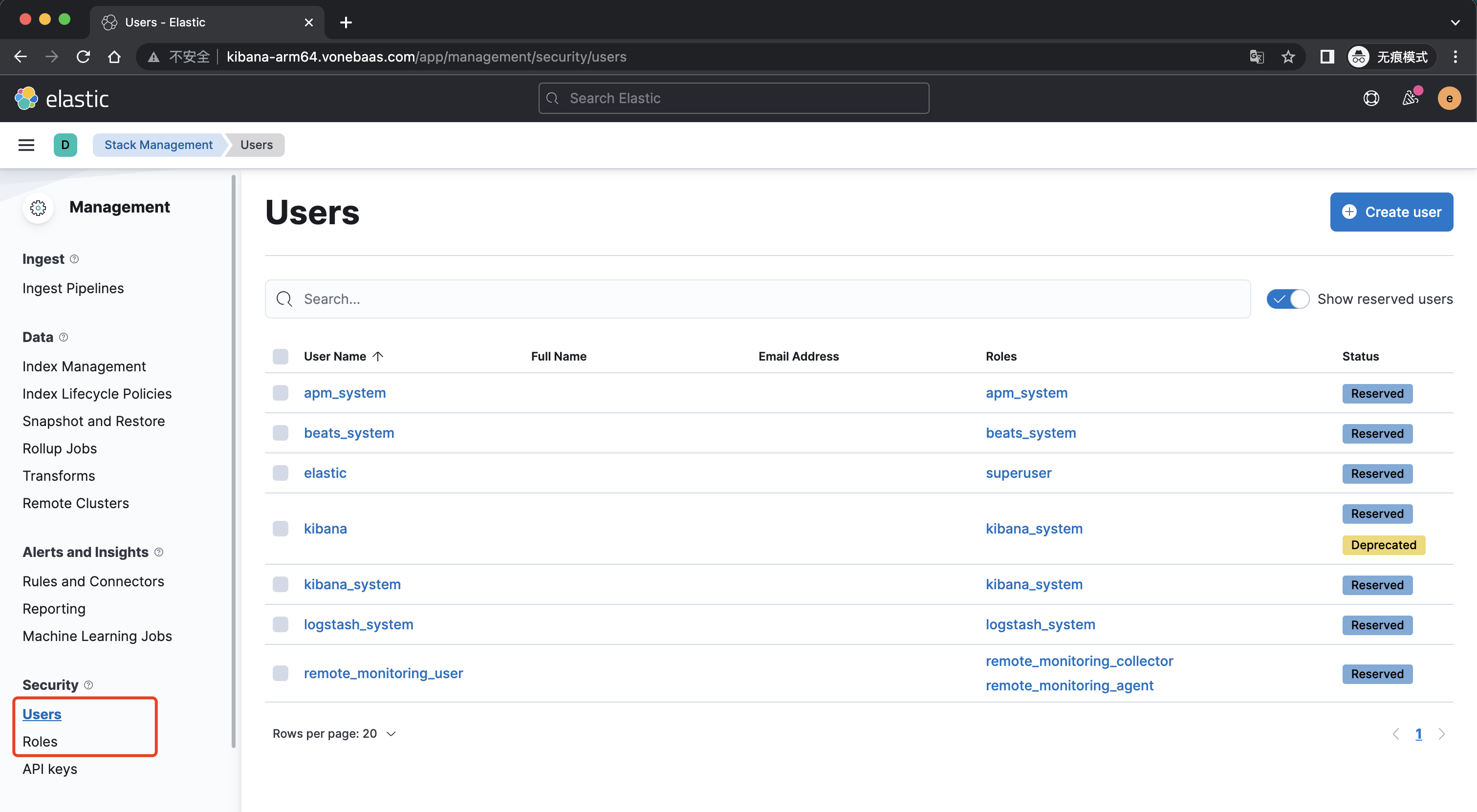

http://kibana-arm64.vonebaas.com,如下所示,Security 页面出现 Uers 以及 Roles 即代表认证启用正常

部署 Fluented 采集 pod 日志

创建 fluentd 自定义配置文件 fluentd-configmap.yaml,内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106kind: ConfigMap

apiVersion: v1

metadata:

name: fluentd-config

namespace: logging

data:

system.conf: |-

<system>

root_dir /tmp/fluentd-buffers/

</system>

containers.input.conf: |-

# 日志源配置

<source>

@id fluentd-containers.log # 日志源唯一标识符,后面可以使用该标识符进一步处理

@type tail # Fluentd 内置的输入方式,其原理是不停地从源文件中获取新的日志。

path /var/log/containers/*.log # 挂载的服务器Docker容器日志地址

pos_file /var/log/es-containers.log.pos # 检查点 Fluentd重启后会从该文件中的位置恢复日志采集

tag raw.kubernetes.* # 设置日志标签

read_from_head true

<parse> # 多行格式化成JSON

@type multi_format # 使用 multi-format-parser 解析器插件

<pattern>

format json # JSON解析器

time_key time # 指定事件时间的时间字段

time_format %Y-%m-%dT%H:%M:%S.%NZ # 时间格式

</pattern>

<pattern>

format /^(?<time>.+) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/

time_format %Y-%m-%dT%H:%M:%S.%N%:z

</pattern>

</parse>

</source>

<match raw.kubernetes.**> # 匹配tag为raw.kubernetes.**日志信息

@id raw.kubernetes

@type detect_exceptions # 使用detect-exceptions插件处理异常栈信息

remove_tag_prefix raw # 移除 raw 前缀

message log

stream stream

multiline_flush_interval 5

max_bytes 500000

max_lines 1000

</match>

<filter kubernetes.**> # 添加 Kubernetes metadata 数据

@id filter_kubernetes_metadata

@type kubernetes_metadata

</filter>

<filter kubernetes.**> # 修复ES中的JSON字段

@id filter_parser

@type parser # multi-format-parser多格式解析器插件

key_name log # 在要解析的记录中指定字段名称。

reserve_data true # 在解析结果中保留原始键值对。

remove_key_name_field true # key_name 解析成功后删除字段。

<parse>

@type multi_format

<pattern>

format json

</pattern>

<pattern>

format none

</pattern>

</parse>

</filter>

<filter kubernetes.**> # 删除一些多余的属

@type record_transformer

remove_keys $.docker.container_id,$.kubernetes.container_image_id,$.kubernetes.pod_id,$.kubernetes.namespace_id,$.kubernetes.master_url,$.kubernetes.labels.pod-template-hash

</filter>

<filter kubernetes.**> # 只采集具有logging=true标签的Pod日志

@id filter_log

@type grep

<regexp>

key $.kubernetes.labels.logging

pattern ^true$

</regexp>

</filter>

forward.input.conf: |- # 监听配置,一般用于日志聚合用

<source>

@id forward

@type forward

</source>

output.conf: |- # 路由配置,将处理后的日志数据发送到ES

<match **> # 标识一个目标标签,后面是一个匹配日志源的正则表达式,我们这里想要捕获所有的日志并将它们发送给 Elasticsearch,所以需要配置成**

@id elasticsearch # 目标的一个唯一标识符

@type elasticsearch # 支持的输出插件标识符,输出到 Elasticsearch

@log_level info # 指定要捕获的日志级别,我们这里配置成 info,表示任何该级别或者该级别以上(INFO、WARNING、ERROR)的日志都将被路由到 Elsasticsearch。

include_tag_key true

host elasticsearch # 定义 Elasticsearch 的地址

port 9200

user elastic

password abc123456

logstash_format true # Fluentd 将会以 logstash 格式来转发结构化的日志数据

logstash_prefix k8s # 设置 index 前缀为 k8s

request_timeout 30s

<buffer> # Fluentd 允许在目标不可用时进行缓存

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size 2M

queue_limit_length 8

overflow_action block

</buffer>

</match>创建 fluentd-ds 资源清单,内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

namespace: logging

labels:

app: fluentd

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd

labels:

app: fluentd

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: fluentd

namespace: logging

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

namespace: logging

labels:

app: fluentd

spec:

selector:

matchLabels:

app: fluentd

template:

metadata:

labels:

app: fluentd

spec:

serviceAccount: fluentd

serviceAccountName: fluentd

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1.14.6-debian-elasticsearch7-1.1

imagePullPolicy: IfNotPresent

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch.logging.svc.cluster.local"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "http"

- name: FLUENTD_SYSTEMD_CONF

value: disable

- name: FLUENT_UID

value: "0"

- name: FLUENT_ELASTICSEARCH_SSL_VERIFY

value: "true"

- name: FLUENT_ELASTICSEARCH_SSL_VERSION

value: "TLSv1_2"

- name: FLUENT_ELASTICSEARCH_USER

valueFrom:

secretKeyRef:

name: elastic-auth

key: username

- name: FLUENT_ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-auth

key: password

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /data/docker/containers

readOnly: true

- name: varlogpods

mountPath: /var/log/pods

readOnly: true

- name: config-volume

mountPath: /fluentd/etc/conf.d

terminationGracePeriodSeconds: 30

nodeSelector:

fluentd: "true"

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /data/docker/containers

- name: varlogpods

hostPath:

path: /var/log/pods

- name: config-volume

configMap:

name: fluentd-config创建 fluentd 资源对象

1

2kubectl create -f fluentd-configmap.yaml

kubectl create -f fluentd-ds.yaml给需要采集日志的节点打上标签

1

2

3

4

5kubectl label nodes k8s-master01 fluentd=true

kubectl label nodes k8s-master02 fluentd=true

kubectl label nodes k8s-master03 fluentd=true

kubectl label nodes k8s-node01 fluentd=true

kubectl label nodes k8s-node02 fluentd=true查看 fluentd pod 状态

1

2

3

4

5

6

7

8

9

10# kubectl get pods -n logging

NAME READY STATUS RESTARTS AGE

es-cluster-0 1/1 Running 0 13m

es-cluster-1 1/1 Running 0 12m

es-cluster-2 1/1 Running 0 12m

fluentd-dh28v 1/1 Running 0 52s

fluentd-ffwrr 1/1 Running 0 52s

fluentd-hz9nl 1/1 Running 0 52s

fluentd-kvzlf 1/1 Running 0 52s

fluentd-x26rd 1/1 Running 0 52s等待 fluentd 服务重启完成,给正在运行的服务打标签,触发 Pod 更新

1

2

3kubectl patch deployments.apps -n vbaas-cli-sit $(kubectl get deploy -n vbaas-cli-sit |grep -v NAME |awk '{print $1}') -p '{"spec": {"template": {"metadata": {"labels": {"logging": "true"}}}}}'

kubectl patch deployments.apps -n vbaas-ops-sit $(kubectl get deploy -n vbaas-ops-sit |grep -v NAME |awk '{print $1}') -p '{"spec": {"template": {"metadata": {"labels": {"logging": "true"}}}}}'

注意: 如果 fluentd 日志提示连不上 Elasticsearch,可以尝试在 kibana 页面的

Stack Management–>Users界面修改 elastic 用户的密码,可以设置成和原来的密码一样