Logstash 官方文档: https://www.elastic.co/

Logstash

Logstash 是一个具有实时流水线功能的开源数据收集引擎。Logstash 可以动态统一来自不同来源的数据,并将数据规范化到您选择的目标。清理和民主化所有数据,用于各种高级下游分析和可视化用例。

部署 Logstash

虽然可以运行多个 Logstash 实例,但在我们的例子中不需要它。因此,这是具有单个实例的 Deplyoment 的示例。

创建 Logstash 配置文件资源清单 ``

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42apiVersion: v1

kind: ConfigMap

metadata:

name: logstash-configmap

namespace: logging

data:

logstash.yml: |-

http.host: "0.0.0.0"

path.config: /usr/share/logstash/pipeline

config.reload.automatic: "true"

config.reload.interval: "3s"

logstash.conf: |-

input {

syslog {

port => 3514

type => "system-syslog"

}

}

filter {

ruby {

# 设置一个自定义字段'logtime'[这个字段可自定义],将logstash自动生成的时间戳中的值减少8小时,赋给这个字段

code => "event.set('logtime', event.get('@timestamp').time.localtime - 8*3600)"

}

ruby {

# 将自定义时间字段中的值重新赋给 @timestamp

code => "event.set('@timestamp',event.get('logtime'))"

}

mutate {

# 删除不需要的字段

remove_field => ["logtime", "@version"]

}

}

output {

elasticsearch {

action => "index"

hosts => [ "${ES_HOSTS}" ]

user => "${ES_USER}"

password => "${ES_PASSWORD}"

index => "syslog-%{+YYYY.MM.dd}"

}

#stdout { codec => rubydebug }

}注意,这里由于服务器时间以及容器时间已经设置为中国时区,所以导致 Logstash 生成的 @timestamp 时间是正常显示的,导致 kibana 上 Discover 上显示的时间会比中国时区晚 8 小时,所以需要在 filter 模块中对日志的 @timestamp 重新赋值,即减去 8 小时.

创建 logstash Deployment 资源清单以及 Service 文件,这里 Service 采用 NodePort 的方式将端口暴露在集群外部,方便外部的机器将 rsyslog 发送到对应的端口上

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99apiVersion: v1

kind: Service

metadata:

labels:

app: logstash

name: logstash

namespace: logging

spec:

ports:

- name: "tcp-3514"

port: 3514

targetPort: 3514

nodePort: 32514

selector:

app: logstash

type: NodePort

apiVersion: apps/v1

kind: Deployment

metadata:

name: logstash-deployment

namespace: logging

spec:

replicas: 1

selector:

matchLabels:

app: logstash

template:

metadata:

labels:

app: logstash

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/hostname

operator: In

values:

- k8s-prod-chain-node01

- key: node-role.kubernetes.io/edge

operator: DoesNotExist

- key: node-role.kubernetes.io/agent

operator: DoesNotExist

containers:

- name: logstash

env:

- name: ES_USER

valueFrom:

secretKeyRef:

name: elastic-auth

key: username

- name: ES_PASSWORD

valueFrom:

secretKeyRef:

name: elastic-auth

key: password

- name: ES_HOSTS

value: "elasticsearch.logging.svc.cluster.local:9200"

image: logstash:7.17.5

imagePullPolicy: IfNotPresent

securityContext:

privileged: true

ports:

- containerPort: 3514

name: tcp-3514

protocol: TCP

volumeMounts:

- name: config-volume

mountPath: /usr/share/logstash/config/logstash.yml

subPath: logstash.yml

- name: logstash-pipeline-volume

mountPath: /usr/share/logstash/pipeline

- name: time-config

mountPath: /etc/localtime

resources:

limits:

memory: "2Gi"

cpu: "1000m"

requests:

memory: "2Gi"

cpu: "1000m"

volumes:

- name: config-volume

configMap:

name: logstash-configmap

items:

- key: logstash.yml

path: logstash.yml

- name: logstash-pipeline-volume

configMap:

name: logstash-configmap

items:

- key: logstash.conf

path: logstash.conf

- name: time-config

hostPath:

path: /etc/localtime创建 Logstash 服务对象

1

2kubectl create -f logstash-configmap.yaml

kubectl create -f logstash-deployment.yaml配置需要被采集日志的服务器 rsyslog 服务,在 文件

/etc/rsyslog.conf中添加以下配置1

*.* @@172.26.112.14:32514

注意: 172.26.112.14 这个 IP 是 Logstash pod 所在节点的IP,高可用集群可以换成 VIP 地址

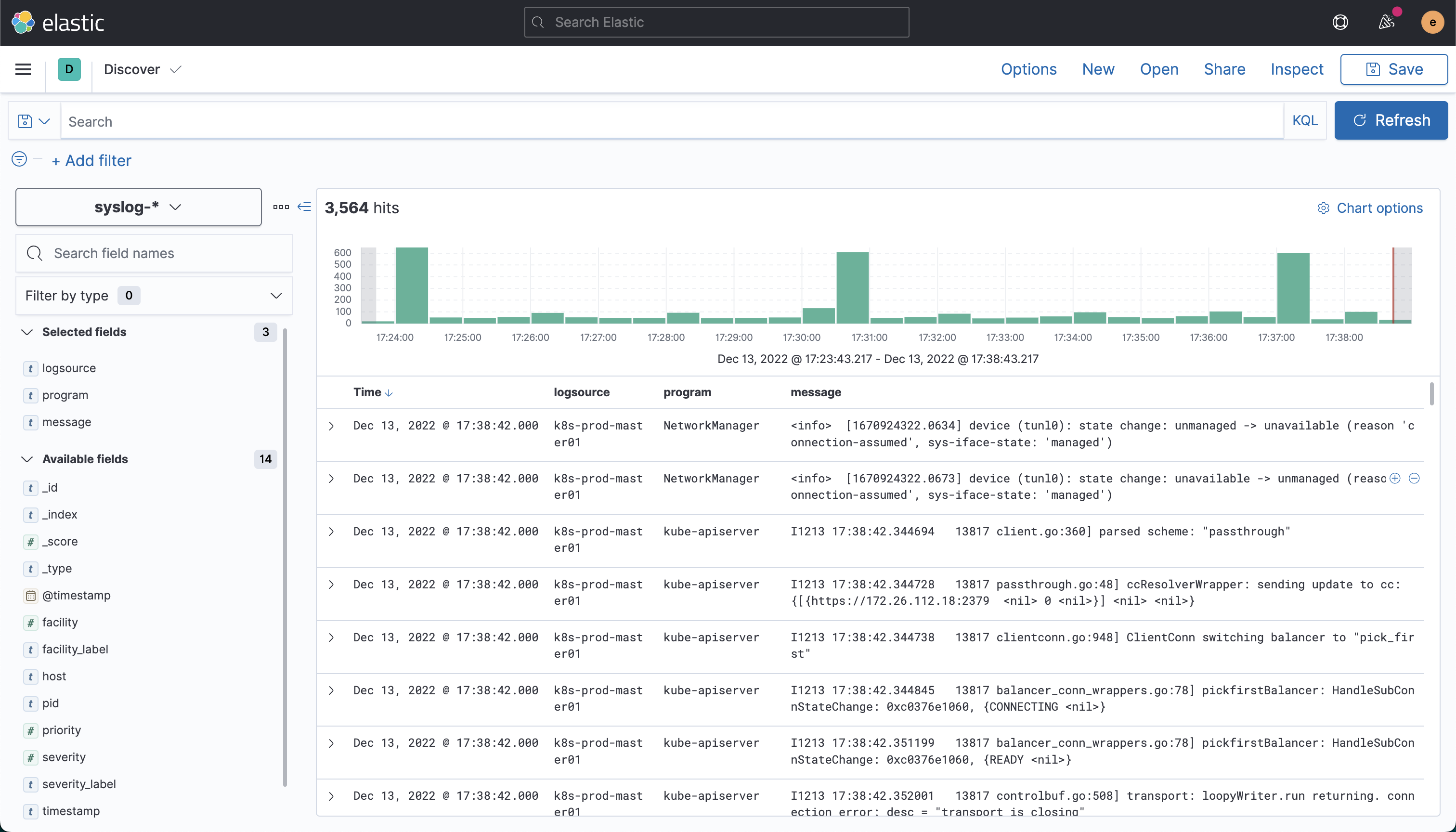

登录 kibana 页面,点击菜单

Stack Management–>Kibana->Index Patters–>Create index pattern新建索引