架构环境说明

本文章将演示如何使用二进制方式部署 k8s 高可用集群,这里部署的 kubernetes 版本为 1.25.x。本次部署使用 5 台虚拟机,系统版本为 CentOS 7.9,分别为 3台 Master,2台 Node。集群相关配置如下

集群网段划分

- k8s 宿主机IP网段: 10.1.40.0/24

- k8s Service 网段: 10.96.0.0/16

- k8s Pod 网段: 192.16.0.0/16

IP 地址信息

高可用地址(VIP): 10.1.40.60

| 主机名 | IP地址 | 系统版本 | 角色 |

|---|---|---|---|

| k8s-master01 | 10.1.40.61 | CentOS 7.9 | master, etcd |

| k8s-master02 | 10.1.40.62 | CentOS 7.9 | master, etcd |

| k8s-master03 | 10.1.40.63 | CentOS 7.9 | master, etcd |

| k8s-node01 | 10.1.40.64 | CentOS 7.9 | worker |

| k8s-node02 | 10.1.40.65 | CentOS 7.9 | worker |

服务器初始化设置

系统配置

所有节点配置 hosts

1

2

3

4

5

6

7

8

9

10cat > /etc/hosts <<EOF

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

10.1.40.61 k8s-master01

10.1.40.62 k8s-master02

10.1.40.63 k8s-master03

10.1.40.64 k8s-node01

10.1.40.65 k8s-node02

EOF所有节点配置 YUM 仓库源

1

2

3

4

5

6

7

8cd /etc/yum.repos.d && mkdir bak && mv *.repo bak && cd ~/

curl -o /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

yum clean all && yum makecache所有节点关闭防火墙,selinux,dnsmasq,swap,以及配置 NetworkManager

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20# 关闭并禁用 firewalld, dnsmasq

systemctl disable --now firewalld

systemctl disable --now dnsmasq

# 配置 NetworkManager

cat > /etc/NetworkManager/conf.d/calico.conf << EOF

[keyfile]

unmanaged-devices=interface-name:cali*;interface-name:tunl*

EOF

systemctl restart NetworkManager

# 临时关闭 selinux

setenforce 0

# 永久关闭 selinux

sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

# 关闭 swap 分区以及注释 swap 挂载项

swapoff -a && sysctl -w vm.swappiness=0

sed -ri 's/.*swap.*/#&/' /etc/fstab所有节点配置 limits 限制

1

2

3

4

5

6

7

8

9

10# 临时设置

ulimit -SHn 65535

# 永久设置

sed -i '/^# End/i\* soft nofile 655350' /etc/security/limits.conf

sed -i '/^# End/i\* hard nofile 131072' /etc/security/limits.conf

sed -i '/^# End/i\* soft nproc 655350' /etc/security/limits.conf

sed -i '/^# End/i\* hard nproc 655350' /etc/security/limits.conf

sed -i '/^# End/i\* soft memlock unlimited' /etc/security/limits.conf

sed -i '/^# End/i\* hard memlock unlimited' /etc/security/limits.conf所有节点配置 k8s 集群中必须的内核参数。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

net.ipv4.conf.all.route_localnet =

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system所有节点安装基础软件

1

2

3

4

5

6

7yum install -y ntpdate \

jq \

psmisc \

wget \

vim \

net-tools \

telnet所有节点配置时间同步策略

1

2

3

4

5

6

7# 设置时区

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' > /etc/timezone

# 添加定时任务以及配置开机启动执行

echo '*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com >/dev/null' >> /var/spool/cron/root

echo '/usr/sbin/ntpdate time2.aliyun.com' >> /etc/rc.local在 k8s-master01 节点配置免密登录其他节点

1

2

3

4

5

6

7# 创建 ssh 免密登录秘钥

ssh-keygen -t rsa

# 拷贝公钥到其他节点

for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02;do

ssh-copy-id -i .ssh/id_rsa.pub root@$i;

done所有节点升级系统软件

1

yum update -y --exclude=kernel* && reboot

配置 IPVS

所有节点安装配置 ipvsadm 软件,新版本的 kube-proxy 默认支持的代理模式为 ipvs 模式,性能比 iptables 要强,如果服务器未配置安装 ipvs,将转换为 iptables 模式。

1

yum install -y ipvsadm ipset sysstat conntrack libseccomp

所有节点配置 ipvs 模块(在内核 4.19版本的 nf_conntrack_ipv4 已经改为 nf_conntrack)。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_lc

modprobe -- ip_vs_wlc

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_lblc

modprobe -- ip_vs_lblcr

modprobe -- ip_vs_dh

modprobe -- ip_vs_sh

modprobe -- ip_vs_fo

modprobe -- ip_vs_nq

modprobe -- ip_vs_sed

modprobe -- ip_vs_ftp

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

modprobe -- ip_tables

modprobe -- ip_set

modprobe -- xt_set

modprobe -- ipt_set

modprobe -- ipt_rpfilter

modprobe -- ipt_REJECT

modprobe -- ipip

EOF

chmod 755 /etc/sysconfig/modules/ipvs.modules

systemctl enable --now systemd-modules-load.service

bash /etc/sysconfig/modules/ipvs.modules && lsmod |grep -e ip_vs -e nf_conntrack

升级内核

CentOS7 需要升级内核至4.18+,本地升级的版本为4.19

在 k8s-master01 节点下载内核,然后发送到其他节点

1

2

3

4

5

6

7

8

9# 下载内核

cd /root

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

# 传送到其他节点

for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do

scp kernel-ml-*.rpm root@$i:~/;

done所有节点安装内核

1

2

3

4

5

6cd /root && yum localinstall -y kernel-ml*

# 所有节点更改内核启动顺序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"重启并检查内核是不是4.19

1

uname -r

基本组件安装

这里主要安装的是集群中用到的各种组件,比如 Docker-ce、Kubernetes 各组件等。

安装 Containerd 作为 Runtime

所有节点安装 Docker-ce-20.10 以及 Containerd

1

2

3

4

5

6

7

8

9# 安装依赖

yum install -y yum-utils device-mapper-persistent-data lvm2

# 配置 Docker yum 仓库

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast

# 安装指定版本 Docker

yum install -y docker-ce-20.10.* docker-ce-cli-20.10.* containerd所有节点配置 Containerd 所需的模块:

1

2

3

4

5

6

7cat << EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

modprobe -- overlay

modprobe -- br_netfilter所有节点配置 Containerd 所需的内核

1

2

3

4

5

6

7cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

sysctl --system所有节点配置 Containerd 的配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13# 创建配置文件目录

mkdir -p /etc/containerd

# 生成默认配置文件

containerd config default | tee /etc/containerd/config.toml

# 修改 SystemdCgroup 配置

sed -i "s#SystemdCgroup\ \=\ false#SystemdCgroup\ \=\ true#g" /etc/containerd/config.toml

cat /etc/containerd/config.toml | grep SystemdCgroup

# 修改 pause 镜像

sed -i "s#registry.k8s.io#registry.cn-hangzhou.aliyuncs.com/google_containers#g" /etc/containerd/config.toml

cat /etc/containerd/config.toml | grep sandbox_image所有节点启动Containerd,并配置开机自启动

1

2systemctl daemon-reload

systemctl enable --now containerd所有节点安装配置 crictl 客户端

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16# 下载 crictl

wget https://github.com/kubernetes-sigs/cri-tools/releases/download/v1.24.2/crictl-v1.24.2-linux-amd64.tar.gz

# 安装 crictl

tar xf crictl-v*-linux-amd64.tar.gz -C /usr/bin/

# 生成 crictl 配置文件

cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

# 测试 crictl

crictl info

安装 HAProxy 以及 Keepalived

这里高可用采用的是 HAProxy + Keepalived,HAProxy 和 Keepalived 以守护进程的方式在所有 Master 节点部署。

所有 Master 节点安装 HAProxy 和 Keepalived。

1

yum install -y haproxy keepalived

所有 Master 节点配置 HAProxy(详细配置参考 HAProxy 文档,所有 MAster 节点的 HAProxy 配置相同)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68mv /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy_ori.cfg

cat > /etc/haproxy/haproxy.cfg <<EOF

global

log 127.0.0.1 local0 err

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

ulimit-n 16384

user haproxy

group haproxy

stats timeout 30s

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

timeout http-request 15s

timeout queue 1m

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-keep-alive 15s

timeout check 15s

maxconn 3000

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

listen stats

bind *:8006

mode http

stats enable

stats hide-version

stats uri /stats

stats refresh 30s

stats realm Haproxy\ Statistics

stats auth admin:admin

frontend k8s-master

bind 0.0.0.0:8443

bind 127.0.0.1:8443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend K8S-master

backend static

balance roundrobin

server static 127.0.0.1:4331 check

backend K8S-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 10.1.40.61:6443 check

server k8s-master02 10.1.40.62:6443 check

server k8s-master03 10.1.40.63:6443 check

EOF所有 Master 节点配置 Keepalived。注意修改 interface(服务网卡),priority(优先级,不同即可)mcast_src_ip(节点IP地址)。详细配置参考 Keepalived 文档。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42mv /etc/keepalived/keepalived.conf /etc/keepalived/keepalived_ori.conf

export INTERFACE=$(ip route show |grep default |cut -d ' ' -f5)

export IPADDR=$(ifconfig |grep -A1 $INTERFACE |grep inet |awk '{print $2}')

cat > /etc/keepalived/keepalived.conf <<EOF

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 2

weight -5

fall 3

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ${INTERFACE}

mcast_src_ip ${IPADDR}

virtual_router_id 60

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.1.40.60

}

track_script {

chk_apiserver

}

}

EOF

# 修改其他 master 节点配置文件的 priority 值

# k8s-master02 节点改为 priority 99, state 改为 BACKUP

# k8s-master03 节点改为 priority 98, state 改为 BACKUP如果一个局域网内有多个 k8s 集群,需要修改

virtual_router_id的值为唯一的,可以设置为 VIP 地址最后的一个值,比如这里的 100所有 Master 节点配置 Keepalived 健康检查文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27cat > /etc/keepalived/check_apiserver.sh <<EOF

#/bin/bash

err=0

for k in \$(seq 1 3)

do

check_code=\$(pgrep haproxy)

if [[ \$check_code == "" ]]; then

err=\$(expr \$err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ \$err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

EOF

chmod +x /etc/keepalived/check_apiserver.sh所有 Master 节点启动 HAProxy 和 Keepalived

1

systemctl enable --now haproxy.service keepalived.service

部署 Etcd 集群

注意: 以下操作未做特别声明时均在

k8s-master01节点操作

下载 etcd 二进制包

1

wget https://github.com/etcd-io/etcd/releases/download/v3.5.5/etcd-v3.5.5-linux-amd64.tar.gz

解压 etcd 二进制包

1

tar zxvf etcd-v3.5.5-linux-amd64.tar.gz

安装 etcd 二进制文件

1

2mkdir -p /usr/local/etcd/{bin,cfg,ssl}

cp etcd-v3.5.5-linux-amd64/{etcd,etcdctl} /usr/local/etcd/bin/下载安装 cfssl 工具

1

2

3

4

5

6

7

8

9

10# 下载工具

wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssljson_1.5.0_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl_1.5.0_linux_amd64

# 添加执行权限

chmod +x cfssljson_1.5.0_linux_amd64 cfssl_1.5.0_linux_amd64

# 将命令移动到可执行路劲并重命名

mv cfssl_1.5.0_linux_amd64 /usr/local/bin/cfssl

mv cfssljson_1.5.0_linux_amd64 /usr/local/bin/cfssljson创建 etcd 相关证书

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80mkdir pki && cd pki

# 创建 CA 配置文件

cat > ca-config.json<<EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

# 创建 CSR 文件

cat > etcd-ca-csr.json <<EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

cat > etcd-csr.json <<EOF

{

"CN": "etcd",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "etcd",

"OU": "Etcd Security"

}

]

}

EOF

# 生成 etcd ca 证书和 key

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare /usr/local/etcd/ssl/etcd-ca

# 生成 etcd 证书文件

cfssl gencert \

-ca=/usr/local/etcd/ssl/etcd-ca.pem \

-ca-key=/usr/local/etcd/ssl/etcd-ca-key.pem \

-config=ca-config.json \

-hostname=127.0.0.1,\

10.1.40.61,\

10.1.40.62,\

10.1.40.63 \

-profile=kubernetes \

etcd-csr.json | cfssljson -bare /usr/local/etcd/ssl/etcd注意,-hostname 需要把所有的 etcd 节点地址都填进去,也可以多填几个预留地址,方便后期 etcd 集群扩容。

创建 ETCD 配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48cat > /usr/local/etcd/cfg/etcd.config.yml << EOF

name: 'k8s-master01'

data-dir: /var/lib/etcd

wal-dir: /var/lib/etcd/wal

snapshot-count: 5000

heartbeat-interval: 100

election-timeout: 1000

quota-backend-bytes: 0

listen-peer-urls: 'https://10.1.40.61:2380'

listen-client-urls: 'https://10.1.40.61:2379,http://127.0.0.1:2379'

max-snapshots: 3

max-wals: 5

cors:

initial-advertise-peer-urls: 'https://10.1.40.61:2380'

advertise-client-urls: 'https://10.1.40.61:2379'

discovery:

discovery-fallback: 'proxy'

discovery-proxy:

discovery-srv:

initial-cluster: 'k8s-master01=https://10.1.40.61:2380,k8s-master02=https://10.1.40.62:2380,k8s-master03=https://10.1.40.63:2380'

initial-cluster-token: 'etcd-k8s-cluster'

initial-cluster-state: 'new'

strict-reconfig-check: false

enable-v2: true

enable-pprof: true

proxy: 'off'

proxy-failure-wait: 5000

proxy-refresh-interval: 30000

proxy-dial-timeout: 1000

proxy-write-timeout: 5000

proxy-read-timeout: 0

client-transport-security:

cert-file: '/usr/local/etcd/ssl/etcd.pem'

key-file: '/usr/local/etcd/ssl/etcd-key.pem'

client-cert-auth: true

trusted-ca-file: '/usr/local/etcd/ssl/etcd-ca.pem'

auto-tls: true

peer-transport-security:

cert-file: '/usr/local/etcd/ssl/etcd.pem'

key-file: '/usr/local/etcd/ssl/etcd-key.pem'

peer-client-cert-auth: true

trusted-ca-file: '/usr/local/etcd/ssl/etcd-ca.pem'

auto-tls: true

debug: false

log-package-levels:

log-outputs: [default]

force-new-cluster: false

EOF创建 ETCD 服务管理文件(所有节点配置一样)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18cat > /usr/lib/systemd/system/etcd.service << EOF

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

ExecStart=/usr/local/etcd/bin/etcd --config-file=/usr/local/etcd/cfg/etcd.config.yml

Restart=on-failure

RestartSec=10

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

Alias=etcd3.service

EOF拷贝 etcd 相关文件到其他 ETCD 节点

1

2

3

4for i in k8s-master02 k8s-master03;do

scp -r /usr/local/etcd root@$i:/usr/local/etcd;

scp /usr/lib/systemd/system/etcd.service root@$i:/usr/lib/systemd/system/etcd.service;

done修改其他节点的配置文件,需要修改的地方如下:

- name: 节点名称,集群中必须唯一

- listen-peer-urls: 修改为当前节点的IP地址

- listen-client-urls: 修改为当前节点的IP地址

- initial-advertise-peer-urls: 修改为当前节点的IP地址

- advertise-client-urls: 修改为当前节点的IP地址

所有节点启动 Etcd 并配置开机启动

1

systemctl enable --now etcd

检查 etcd 集群状态

1

2

3

4

5

6

7

8export ETCDCTL_API=3

/usr/local/etcd/bin/etcdctl \

--cacert=/usr/local/etcd/ssl/etcd-ca.pem \

--cert=/usr/local/etcd/ssl/etcd.pem \

--key=/usr/local/etcd/ssl/etcd-key.pem \

--endpoints="https://10.1.40.61:2379,\

https://10.1.40.62:2379,\

https://10.1.40.63:2379" endpoint status --write-out=table注意:如果启动节点启动失败,需要清除启动信息,然后再次启动, 参考文章 etcd集群节点挂掉后恢复步骤

- 具体操作如下:

1

rm -rf /var/lib/etcd/*

部署 k8s 集群

部署 Master 组件

注意: 以下操作未做特别声明时均在

k8s-master01节点操作

下载 k8s 二进制安装包

1

wget https://dl.k8s.io/v1.25.6/kubernetes-server-linux-amd64.tar.gz

解压 kubernetes 安装包

1

tar zxvf kubernetes-server-linux-amd64.tar.gz

安装 kubernetes 二进制文件

1

2

3mkdir -p /usr/local/kubernetes/{bin,cfg,ssl,manifests}

cp kubernetes/server/bin/kube{-apiserver,-controller-manager,-scheduler,-proxy,let} /usr/local/kubernetes/bin/

cp kubernetes/server/bin/kubectl /usr/local/bin/

创建 Master 组件证书

注意: 以下操作未做特别声明时均在 k8s-master01 节点操作

创建 CA 证书

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49cd ~/pki

# 创建 CA 配置文件

cat > ca-config.json<<EOF

{

"signing": {

"default": {

"expiry": "876000h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "876000h"

}

}

}

}

EOF

# 创建 ca-csr.json 文件

cat > ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

],

"ca": {

"expiry": "876000h"

}

}

EOF

# 创建 CA 证书以及 Key

cfssl gencert -initca ca-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/ca创建 APIServer 证书

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42# 设置 CLB 负载均衡内网地址环境变量,后面生成证书时直接使用环境变量

export CLB_ADDRESS="10.1.40.60"

export CLB_PORT="8443"

# 创建 apiserver-csr.json 文件

cat > apiserver-csr.json <<EOF

{

"CN": "kube-apiserver",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "Kubernetes",

"OU": "Kubernetes-manual"

}

]

}

EOF

# 创建 apiserver 证书

cfssl gencert \

-ca=/usr/local/kubernetes/ssl/ca.pem \

-ca-key=/usr/local/kubernetes/ssl/ca-key.pem \

-config=ca-config.json \

-hostname=10.96.0.1,\

${CLB_ADDRESS},\

127.0.0.1,\

10.1.40.61,\

10.1.40.62,\

10.1.40.63,\

kubernetes,\

kubernetes.default,\

kubernetes.default.svc,\

kubernetes.default.svc.cluster,\

kubernetes.default.svc.cluster.local \

-profile=kubernetes \

apiserver-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/apiserver生成 apiserver 的聚合证书。(Requestheader-client-xxx requestheader-allowwd-xxx:aggerator)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32# 创建 front-proxy-ca-csr.json 文件

cat > front-proxy-ca-csr.json <<EOF

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

# 创建 front-proxy-client-csr.json 文件

cat > front-proxy-client-csr.json <<EOF

{

"CN": "front-proxy-client",

"key": {

"algo": "rsa",

"size": 2048

}

}

EOF

# 创建 Apiserver 聚合 CA

cfssl gencert -initca front-proxy-ca-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/front-proxy-ca

# 创建 Apiserver 聚合证书

cfssl gencert \

-ca=/usr/local/kubernetes/ssl/front-proxy-ca.pem \

-ca-key=/usr/local/kubernetes/ssl/front-proxy-ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

front-proxy-client-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/front-proxy-client生成 controller-manager 证书

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45# 创建 manager-csr.json 文件

cat > manager-csr.json <<EOF

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "Kubernetes-manual"

}

]

}

EOF

# 创建 controller-manager 证书

cfssl gencert \

-ca=/usr/local/kubernetes/ssl/ca.pem \

-ca-key=/usr/local/kubernetes/ssl/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

manager-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/controller-manager

# 创建 controller-manager 组件的 kubeconfig 文件

kubectl config set-cluster kubernetes \

--certificate-authority=/usr/local/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://${CLB_ADDRESS}:${CLB_PORT} \

--kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig && \

kubectl config set-credentials system:kube-controller-manager \

--client-certificate=/usr/local/kubernetes/ssl/controller-manager.pem \

--client-key=/usr/local/kubernetes/ssl/controller-manager-key.pem \

--embed-certs=true \

--kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig && \

kubectl config set-context system:kube-controller-manager@kubernetes \

--cluster=kubernetes \

--user=system:kube-controller-manager \

--kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig && \

kubectl config use-context system:kube-controller-manager@kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig生成 scheduler 证书文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45# 创建 scheduler-csr.json 文件

cat > scheduler-csr.json <<EOF

{

"CN": "system:kube-scheduler",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "Kubernetes-manual"

}

]

}

EOF

# 创建 scheduler 证书

cfssl gencert \

-ca=/usr/local/kubernetes/ssl/ca.pem \

-ca-key=/usr/local/kubernetes/ssl/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

scheduler-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/scheduler

# 创建 scheduler 组件的 kubeconfig 文件

kubectl config set-cluster kubernetes \

--certificate-authority=/usr/local/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://${CLB_ADDRESS}:${CLB_PORT} \

--kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig && \

kubectl config set-credentials system:kube-scheduler \

--client-certificate=/usr/local/kubernetes/ssl/scheduler.pem \

--client-key=/usr/local/kubernetes/ssl/scheduler-key.pem \

--embed-certs=true \

--kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig && \

kubectl config set-context system:kube-scheduler@kubernetes \

--cluster=kubernetes \

--user=system:kube-scheduler \

--kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig && \

kubectl config use-context system:kube-scheduler@kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig生成集群管理员 admin 的证书

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45# 创建 admin-csr.json 文件

cat > admin-csr.json <<EOF

{

"CN": "admin",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "Kubernetes-manual"

}

]

}

EOF

# 创建 admin 证书

cfssl gencert \

-ca=/usr/local/kubernetes/ssl/ca.pem \

-ca-key=/usr/local/kubernetes/ssl/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

admin-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/admin

# 创建 admin 管理员的 kubeconfig 文件

kubectl config set-cluster kubernetes \

--certificate-authority=/usr/local/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://${CLB_ADDRESS}:${CLB_PORT} \

--kubeconfig=/usr/local/kubernetes/cfg/admin.kubeconfig && \

kubectl config set-credentials kubernetes-admin \

--client-certificate=/usr/local/kubernetes/ssl/admin.pem \

--client-key=/usr/local/kubernetes/ssl/admin-key.pem \

--embed-certs=true \

--kubeconfig=/usr/local/kubernetes/cfg/admin.kubeconfig && \

kubectl config set-context kubernetes-admin@kubernetes \

--cluster=kubernetes \

--user=kubernetes-admin \

--kubeconfig=/usr/local/kubernetes/cfg/admin.kubeconfig && \

kubectl config use-context kubernetes-admin@kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/admin.kubeconfig生成 ServiceAccount Key

1

2

3openssl genrsa -out /usr/local/kubernetes/ssl/sa.key 2048

openssl rsa -in /usr/local/kubernetes/ssl/sa.key -pubout -out /usr/local/kubernetes/ssl/sa.pub

配置 Master 组件

注意: 以下操作未做特别声明时均在 k8s-master01 节点操作

创建 Apiserver 服务文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49cat > /usr/lib/systemd/system/kube-apiserver.service <<EOF

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/kubernetes/bin/kube-apiserver \\

--v=2 \\

--logtostderr=true \\

--allow-privileged=true \\

--bind-address=0.0.0.0 \\

--secure-port=6443 \\

--advertise-address=${CLB_ADDRESS} \\

--service-cluster-ip-range=10.96.0.0/16 \\

--service-node-port-range=30000-32767 \\

--etcd-servers=https://10.1.40.61:2379,https://10.1.40.62:2379,https://10.1.40.63:2379 \\

--etcd-cafile=/usr/local/etcd/ssl/etcd-ca.pem \\

--etcd-certfile=/usr/local/etcd/ssl/etcd.pem \\

--etcd-keyfile=/usr/local/etcd/ssl/etcd-key.pem \\

--client-ca-file=/usr/local/kubernetes/ssl/ca.pem \\

--tls-cert-file=/usr/local/kubernetes/ssl/apiserver.pem \\

--tls-private-key-file=/usr/local/kubernetes/ssl/apiserver-key.pem \\

--kubelet-client-certificate=/usr/local/kubernetes/ssl/apiserver.pem \\

--kubelet-client-key=/usr/local/kubernetes/ssl/apiserver-key.pem \\

--service-account-key-file=/usr/local/kubernetes/ssl/sa.pub \\

--service-account-signing-key-file=/usr/local/kubernetes/ssl/sa.key \\

--service-account-issuer=https://kubernetes.default.svc.cluster.local \\

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \\

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,NodeRestriction,ResourceQuota \\

--authorization-mode=Node,RBAC \\

--enable-bootstrap-token-auth=true \\

--requestheader-client-ca-file=/usr/local/kubernetes/ssl/front-proxy-ca.pem \\

--proxy-client-cert-file=/usr/local/kubernetes/ssl/front-proxy-client.pem \\

--proxy-client-key-file=/usr/local/kubernetes/ssl/front-proxy-client-key.pem \\

--requestheader-allowed-names=aggregator \\

--requestheader-group-headers=X-Remote-Group \\

--requestheader-extra-headers-prefix=X-Remote-Extra- \\

--requestheader-username-headers=X-Remote-User \\

--enable-aggregator-routing=true \\

--feature-gates=EphemeralContainers=true

Restart=on-failure

RestartSec=10s

LimitNOFILE=65535

[Install]

WantedBy=multi-user.target

EOF创建 Controller-manager 服务启动文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35cat >/usr/lib/systemd/system/kube-controller-manager.service <<EOF

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/kubernetes/bin/kube-controller-manager \\

--v=2 \\

--logtostderr=true \\

--bind-address=0.0.0.0 \\

--root-ca-file=/usr/local/kubernetes/ssl/ca.pem \\

--cluster-signing-cert-file=/usr/local/kubernetes/ssl/ca.pem \\

--cluster-signing-key-file=/usr/local/kubernetes/ssl/ca-key.pem \\

--service-account-private-key-file=/usr/local/kubernetes/ssl/sa.key \\

--authentication-kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig \\

--authorization-kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig \\

--kubeconfig=/usr/local/kubernetes/cfg/controller-manager.kubeconfig \\

--leader-elect=true \\

--use-service-account-credentials=true \\

--node-monitor-grace-period=40s \\

--node-monitor-period=5s \\

--pod-eviction-timeout=2m0s \\

--controllers=*,bootstrapsigner,tokencleaner \\

--allocate-node-cidrs=true \\

--cluster-cidr=192.16.0.0/16 \\

--requestheader-client-ca-file=/usr/local/kubernetes/ssl/front-proxy-ca.pem \\

--node-cidr-mask-size=24

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF创建 Scheduler 服务启动文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22cat >/usr/lib/systemd/system/kube-scheduler.service <<EOF

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/kubernetes/bin/kube-scheduler \\

--v=2 \\

--logtostderr=true \\

--bind-address=0.0.0.0 \\

--leader-elect=true \\

--authentication-kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig \\

--authorization-kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig \\

--kubeconfig=/usr/local/kubernetes/cfg/scheduler.kubeconfig

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF拷贝文件到其他 Master 节点

1

2

3

4for i in k8s-master02 k8s-master03;do

scp /usr/lib/systemd/system/kube-{apiserver,controller-manager,scheduler}.service root@$i:/usr/lib/systemd/system/;

scp -r /usr/local/kubernetes/ root@$i:/usr/local/kubernetes;

done所有 master 节点启动以下服务

1

2systemctl daemon-reload

systemctl enable --now kube-apiserver kube-controller-manager kube-scheduler配置 kubectl 客户端工具,以下所有步骤只在安装了 kubectl 的节点执行。(kubectl 不一定要在 k8s 集群内,可以在任意一台可以访问 k8s 集群的服务器上安装 kubectl)

1

2

3

4

5

6

7

8

9

10

11

12# 创建 kubectl 配置文件

mkdir ~/.kube

cp /usr/local/kubernetes/cfg/admin.kubeconfig ~/.kube/config

# 配置kubectl子命令补全

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

kubectl completion bash > ~/.kube/completion.bash.inc

echo "source ~/.kube/completion.bash.inc" >> ~/.bash_profile

source $HOME/.bash_profile测试 kubectl 命令

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17# kubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true"}

etcd-2 Healthy {"health":"true"}

etcd-1 Healthy {"health":"true"}

# kubectl cluster-info

Kubernetes master is running at https://10.1.40.60:8443

To further debug and diagnose cluster problems, use 'kubectl cluster-info dump'.

# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 11h注意:

10.96.0.0/16网段第一个可用的 IP 地址为10.96.0.1

部署 Worker 组件

配置 TLSBootstrap

注意: 以下操作未做特别声明时均在

k8s-master01节点操作

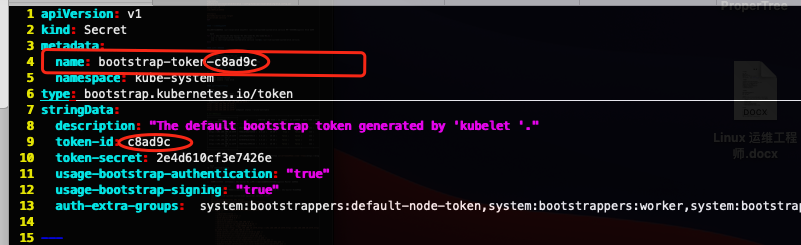

创建 bootstrap.secret.yaml 文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89cat > bootstrap.secret.yaml <<EOF

apiVersion: v1

kind: Secret

metadata:

name: bootstrap-token-c8ad9c

namespace: kube-system

type: bootstrap.kubernetes.io/token

stringData:

description: "The default bootstrap token generated by 'kubelet '."

token-id: c8ad9c

token-secret: 2e4d610cf3e7426e

usage-bootstrap-authentication: "true"

usage-bootstrap-signing: "true"

auth-extra-groups: system:bootstrappers:default-node-token,system:bootstrappers:worker,system:bootstrappers:ingress

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubelet-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:node-bootstrapper

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-bootstrap

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:nodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:bootstrappers:default-node-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: node-autoapprove-certificate-rotation

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:certificates.k8s.io:certificatesigningrequests:selfnodeclient

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:nodes

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:kube-apiserver-to-kubelet

rules:

- apiGroups:

- ""

resources:

- nodes/proxy

- nodes/stats

- nodes/log

- nodes/spec

- nodes/metrics

verbs:

- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:kube-apiserver

namespace: ""

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:kube-apiserver-to-kubelet

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: User

name: kube-apiserver

EOF创建 bootstrap RBAC 策略

1

kubectl create -f bootstrap.secret.yaml

部署 kubelet 组件

注意: 以下操作未做特别声明时均在

k8s-master01节点操作

创建 bootstrap-kubelet.kubeconfig 文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14kubectl config set-cluster kubernetes \

--certificate-authority=/usr/local/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://${CLB_ADDRESS}:${CLB_PORT} \

--kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig && \

kubectl config set-credentials tls-bootstrap-token-user \

--token=c8ad9c.2e4d610cf3e7426e \

--kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig && \

kubectl config set-context tls-bootstrap-token-user@kubernetes \

--cluster=kubernetes \

--user=tls-bootstrap-token-user \

--kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig && \

kubectl config use-context tls-bootstrap-token-user@kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig注意:如果要修改 bootstrap.secret.yaml 的 token-id 和 token-secret,需要保证下图红圈内的字符串一致的,并且位数是一样的。还要保证上个命令的 token:c8ad9c.2e4d610cf3e7426e 与你修改的字符串 要一致

创建应用目录以及拷贝二进制文件(这里使用 ssh 远程登录到 Worker 节点执行命令的方式创建)

1

2

3

4for i in k8s-node01 k8s-node02;do

ssh root@$i "mkdir -p /usr/local/kubernetes/{bin,cfg,ssl,manifests}";

scp ~/kubernetes/server/bin/{kubelet,kube-proxy} root@$i:/usr/local/kubernetes/bin/;

done拷贝证书以及配置文件到所有 Worker 节点

1

2

3

4for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do

scp /usr/local/kubernetes/ssl/{ca.pem,ca-key.pem,front-proxy-ca.pem} root@$i:/usr/local/kubernetes/ssl/;

scp /usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig root@$i:/usr/local/kubernetes/cfg/;

done在所有 Worker 节点执行以下命令创建 kubelet 服务启动文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17cat >/usr/lib/systemd/system/kubelet.service <<EOF

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

ExecStart=/usr/local/kubernetes/bin/kubelet

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

EOF在所有 Worker 节点执行以下命令配置 kubelet service 的配置文件

1

2

3

4

5

6

7

8

9

10mkdir -p /etc/systemd/system/kubelet.service.d;

cat >/etc/systemd/system/kubelet.service.d/10-kubelet.conf <<EOF

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/usr/local/kubernetes/cfg/bootstrap-kubelet.kubeconfig --kubeconfig=/usr/local/kubernetes/cfg/kubelet.kubeconfig"

Environment="KUBELET_SYSTEM_ARGS=--container-runtime-endpoint=unix:///run/containerd/containerd.sock"

Environment="KUBELET_CONFIG_ARGS=--config=/usr/local/kubernetes/cfg/kubelet-conf.yml"

Environment="KUBELET_EXTRA_ARGS=--node-labels=node.kubernetes.io/node="

ExecStart=

ExecStart=/usr/local/kubernetes/bin/kubelet \$KUBELET_KUBECONFIG_ARGS \$KUBELET_CONFIG_ARGS \$KUBELET_SYSTEM_ARGS \$KUBELET_EXTRA_ARGS

EOF注意上面的

--node-labels,如果是 master 节点,需要修改为node-role.kubernetes.io/master=''在所有 Worker 节点执行以下命令创建 kubelet 的配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72cat >/usr/local/kubernetes/cfg/kubelet-conf.yml <<EOF

apiVersion: kubelet.config.k8s.io/v1beta1

kind: KubeletConfiguration

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

authentication:

anonymous:

enabled: false

webhook:

cacheTTL: 2m0s

enabled: true

x509:

clientCAFile: /usr/local/kubernetes/ssl/ca.pem

authorization:

mode: Webhook

webhook:

cacheAuthorizedTTL: 5m0s

cacheUnauthorizedTTL: 30s

cgroupDriver: systemd

cgroupsPerQOS: true

clusterDNS:

- 10.96.0.10

clusterDomain: cluster.local

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

enforceNodeAllocatable:

- pods

eventBurst: 10

eventRecordQPS: 5

evictionHard:

imagefs.available: 15%

memory.available: 100Mi

nodefs.available: 10%

nodefs.inodesFree: 5%

evictionPressureTransitionPeriod: 5m0s

failSwapOn: true

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

healthzBindAddress: 127.0.0.1

healthzPort: 10248

httpCheckFrequency: 20s

imageGCHighThresholdPercent: 85

imageGCLowThresholdPercent: 80

imageMinimumGCAge: 2m0s

iptablesDropBit: 15

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 110

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

podPidsLimit: -1

registryBurst: 10

registryPullQPS: 5

resolvConf: /etc/resolv.conf

rotateCertificates: true

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

staticPodPath: /usr/local/kubernetes/manifests

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0s

EOF在所有 Worker 节点执行以下命令启动 kubelet

1

systemctl daemon-reload && systemctl enable --now kubelet

在所有 Worker 节点执行以下命令查看 kubelet 服务启动状态

1

systemctl status kubelet.service

注意: 出现 Unable to update cni config: no networks found in /etc/cni/net.d 显示只有如下信息为正常

在

k8s-master01节点执行以下命令查看集群节点状态1

2

3

4

5

6

7kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master01 NotReady <none> 18m v1.25.6 10.1.40.61 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.16

k8s-master02 NotReady <none> 16m v1.25.6 10.1.40.62 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.16

k8s-master03 NotReady <none> 16m v1.25.6 10.1.40.63 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.16

k8s-node01 NotReady <none> 18m v1.25.6 10.1.40.64 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.16

k8s-node02 NotReady <none> 18m v1.25.6 10.1.40.65 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.16

部署 kube-proxy

注意: 以下操作未做特别声明时均在

k8s-master01节点操作

创建 kube-proxy 组件的证书以及 kubeconfig 文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47cd ~/pki

# 创建 kube-proxy-csr.json 文件

cat > kube-proxy-csr.json <<EOF

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-proxy",

"OU": "Kubernetes-manual"

}

]

}

EOF

# 创建 kube-proxy 证书

cfssl gencert \

-ca=/usr/local/kubernetes/ssl/ca.pem \

-ca-key=/usr/local/kubernetes/ssl/ca-key.pem \

-config=ca-config.json \

-profile=kubernetes \

kube-proxy-csr.json | cfssljson -bare /usr/local/kubernetes/ssl/kube-proxy

# 创建 kube-proxy 组件的 kubeconfig 文件

kubectl config set-cluster kubernetes \

--certificate-authority=/usr/local/kubernetes/ssl/ca.pem \

--embed-certs=true \

--server=https://${CLB_ADDRESS}:${CLB_PORT} \

--kubeconfig=/usr/local/kubernetes/cfg/kube-proxy.kubeconfig && \

kubectl config set-credentials kube-proxy \

--client-certificate=/usr/local/kubernetes/ssl/kube-proxy.pem \

--client-key=/usr/local/kubernetes/ssl/kube-proxy-key.pem \

--embed-certs=true \

--kubeconfig=/usr/local/kubernetes/cfg/kube-proxy.kubeconfig && \

kubectl config set-context kube-proxy@kubernetes \

--cluster=kubernetes \

--user=kube-proxy \

--kubeconfig=/usr/local/kubernetes/cfg/kube-proxy.kubeconfig && \

kubectl config use-context kube-proxy@kubernetes \

--kubeconfig=/usr/local/kubernetes/cfg/kube-proxy.kubeconfig创建 kube-proxy 服务启动文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17cat >/usr/lib/systemd/system/kube-proxy.service <<EOF

[Unit]

Description=Kubernetes Kube Proxy

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

ExecStart=/usr/local/kubernetes/bin/kube-proxy \\

--config=/usr/local/kubernetes/cfg/kube-proxy.conf \\

--v=2

Restart=always

RestartSec=10s

[Install]

WantedBy=multi-user.target

EOF创建 kube-proxy 配置文件,如果更改了集群 Pod 的网段,需要更改 clusterCIDR: 172.16.0.0/12 参数。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38cat >/usr/local/kubernetes/cfg/kube-proxy.conf <<EOF

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

clientConnection:

acceptContentTypes: ""

burst: 10

contentType: application/vnd.kubernetes.protobuf

kubeconfig: /usr/local/kubernetes/cfg/kube-proxy.kubeconfig

qps: 5

clusterCIDR: 192.16.0.0/16

configSyncPeriod: 15m0s

conntrack:

max: null

maxPerCore: 32768

min: 131072

tcpCloseWaitTimeout: 1h0m0s

tcpEstablishedTimeout: 24h0m0s

enableProfiling: false

healthzBindAddress: 0.0.0.0:10256

hostnameOverride: ""

iptables:

masqueradeAll: false

masqueradeBit: 14

minSyncPeriod: 0s

syncPeriod: 30s

ipvs:

masqueradeAll: true

minSyncPeriod: 5s

scheduler: "rr"

syncPeriod: 30s

kind: KubeProxyConfiguration

metricsBindAddress: 127.0.0.1:10249

mode: "ipvs"

nodePortAddresses: null

oomScoreAdj: -999

portRange: ""

udpIdleTimeout: 250ms

EOF拷贝 kube-proxy 相关文件到其他节点

1

2

3

4for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do

scp /usr/local/kubernetes/cfg/kube-proxy.{conf,kubeconfig} root@$i:/usr/local/kubernetes/cfg/;

scp /usr/lib/systemd/system/kube-proxy.service root@$i:/usr/lib/systemd/system/kube-proxy.service;

done所有节点启动 kube-proxy

1

systemctl daemon-reload && systemctl enable --now kube-proxy.service

kube-proxy 服务运行成功后,所有节点应该均可以访问

10.96.0.1:443端口

部署 k8s 其他组件

注意: 以下操作未做特别声明时均在

k8s-master01节点操作

安装 calico

下载最新的 calico 资源清单

1

curl https://docs.projectcalico.org/archive/v3.25/manifests/calico-etcd.yaml -O

calico 与 kubernetes 之间的版本依赖请参考 Kubernetes Requirements

修改 calico-etcd 配置,添加 ETCD 节点信息以及证书

1

2

3

4

5

6

7

8

9sed -i 's#etcd_endpoints: "http://<ETCD_IP>:<ETCD_PORT>"#etcd_endpoints: "https://10.1.40.61:2379,https://10.1.40.62:2379,https://10.1.40.63:2379"#g' calico-etcd.yaml

ETCD_CA=`cat /usr/local/etcd/ssl/etcd-ca.pem | base64 | tr -d '\n'`

ETCD_CERT=`cat /usr/local/etcd/ssl/etcd.pem | base64 | tr -d '\n'`

ETCD_KEY=`cat /usr/local/etcd/ssl/etcd-key.pem | base64 | tr -d '\n'`

sed -i "s@# etcd-key: null@etcd-key: ${ETCD_KEY}@g; s@# etcd-cert: null@etcd-cert: ${ETCD_CERT}@g; s@# etcd-ca: null@etcd-ca: ${ETCD_CA}@g" calico-etcd.yaml

sed -i 's#etcd_ca: ""#etcd_ca: "/calico-secrets/etcd-ca"#g; s#etcd_cert: ""#etcd_cert: "/calico-secrets/etcd-cert"#g; s#etcd_key: "" #etcd_key: "/calico-secrets/etcd-key" #g' calico-etcd.yaml修改 calico 中 CIDR 的网段为 Pods 网段,即 192.16.0.0/16

1

sed -i "s@# - name: CALICO_IPV4POOL_CIDR@- name: CALICO_IPV4POOL_CIDR@g; s@# value: \"192.168.0.0/16\"@ value: \"192.16.0.0/16\"@g" calico-etcd.yaml

修改镜像仓库地址

1

sed -i 's#docker.io#registry-changsha.vonebaas.com/kubernetes#g' calico-etcd.yaml

添加节点亲和性,使 calico 不调度到边缘节点上(可选,如果不是边缘集群可以忽略这一步)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28...

template:

metadata:

labels:

k8s-app: calico-node

spec:

nodeSelector:

kubernetes.io/os: linux

hostNetwork: true

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/edge

operator: DoesNotExist

- key: node-role.kubernetes.io/agent

operator: DoesNotExist

tolerations:

# Make sure calico-node gets scheduled on all nodes.

- effect: NoSchedule

operator: Exists

# Mark the pod as a critical add-on for rescheduling.

- key: CriticalAddonsOnly

operator: Exists

- effect: NoExecute

operator: Exists

...在 k8s-master01 节点执行以下命令安装 calico

1

kubectl create -f calico-etcd.yaml

查看集群calico pods 运行状态,系统 Pod 都在 kube-system 命名空间

1

2

3

4

5

6

7

8

9kubectl get pods -n kube-system

# 输出如下

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-97769f7c7-42snz 1/1 Running 0 2m47s

calico-node-b8hqx 1/1 Running 0 2m47s

calico-node-m5x7n 1/1 Running 0 2m47s

calico-node-pz5qn 1/1 Running 0 2m47s

calico-node-vp6wm 1/1 Running 0 2m47s

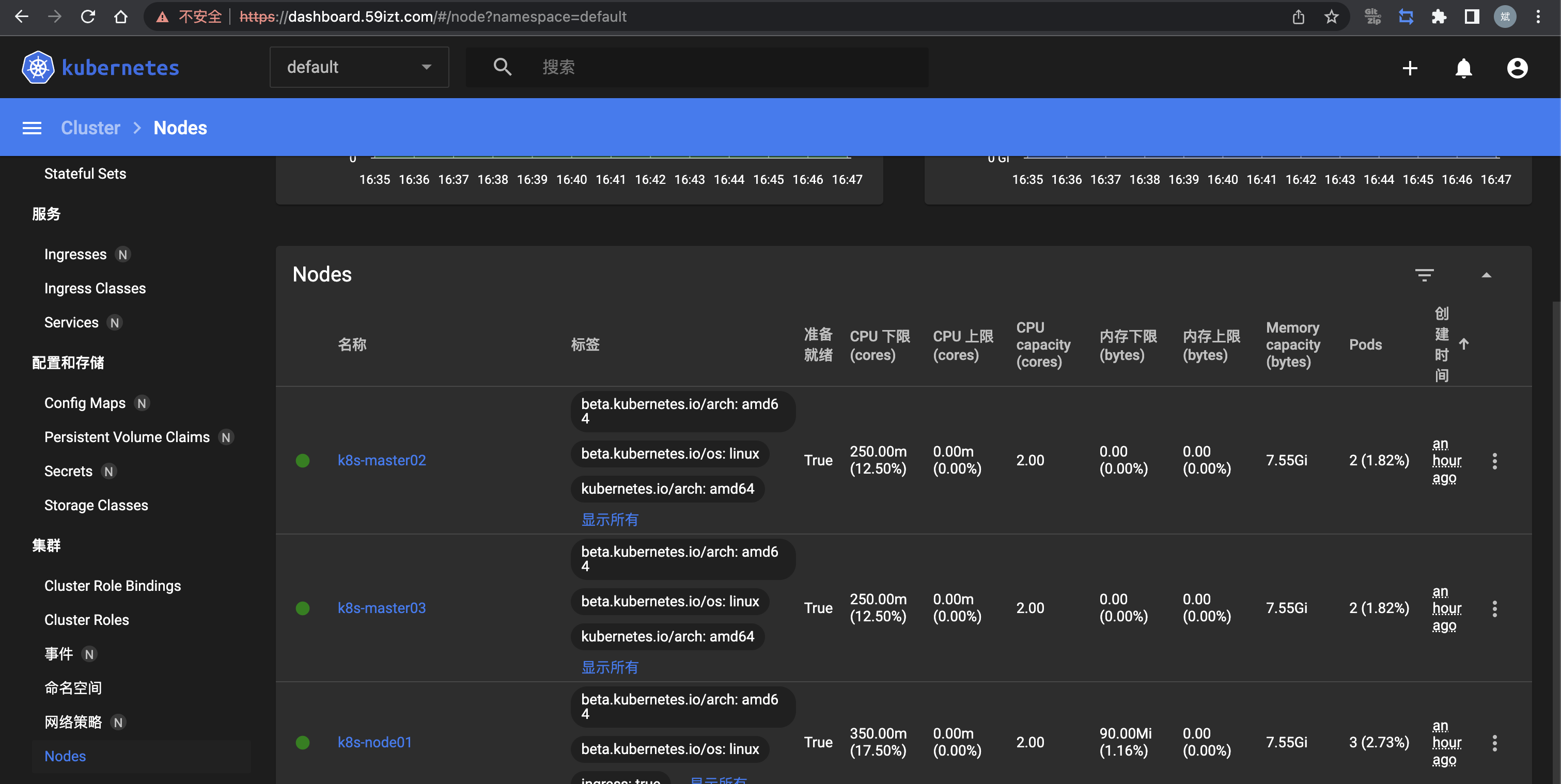

calico-node-wqgtn 1/1 Running 0 2m47s再次查看集群 node 状态

1

2

3

4

5

6

7kubectl get node -n kube-system -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master01 Ready <none> 76m v1.25.6 10.1.40.61 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.16

k8s-master02 Ready <none> 76m v1.25.6 10.1.40.62 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.16

k8s-master03 Ready <none> 76m v1.25.6 10.1.40.63 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.16

k8s-node01 Ready <none> 72m v1.25.6 10.1.40.64 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.16

k8s-node02 Ready <none> 73m v1.25.6 10.1.40.65 <none> CentOS Linux 7 (Core) 4.19.12-1.el7.elrepo.x86_64 containerd://1.6.16如果容器状态异常可以使用 kubectl describe 或者 logs 查看容器的日志.

安装 CoreDNS

安装最新版的 CoreDNS

1

2

3

4

5

6

7

8# 下载源码

git clone https://github.com/coredns/deployment.git

# 直接部署

cd deployment/kubernetes && ./deploy.sh -s -i 10.96.0.10 | kubectl apply -f -

# 生成 coredns.yaml 文件

./deploy.sh -s -i 10.96.0.10 > coredns.yaml查看状态

1

2

3kubectl get pods -n kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-54b8c69d54-cwq79 1/1 Running 0 86s

安装 Metrics Server

下载 metric-server 资源清单

1

wget https://github.com/kubernetes-sigs/metrics-server/releases/download/metrics-server-helm-chart-3.8.2/components.yaml

修改 metrics-server 文件,主要修改内容如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45spec:

# 0. 添加节点亲和性,将 metrics 服务部署到非边缘节点

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: node-role.kubernetes.io/edge

operator: DoesNotExist

- key: node-role.kubernetes.io/agent

operator: DoesNotExist

containers:

- args:

- --cert-dir=/tmp

- --secure-port=4443 # 1. 修改安全端口为 4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --metric-resolution=15s

- --kubelet-insecure-tls # 2. 添加以下内容

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.pem # change to front-proxy-ca.crt for kubeadm

- --requestheader-username-headers=X-Remote-User

- --requestheader-group-headers=X-Remote-Group

- --requestheader-extra-headers-prefix=X-Remote-Extra-

image: registry.cn-hangzhou.aliyuncs.com/59izt/metrics-server-kubeedge:latest # 3. 修改镜像使用自己同步的阿里云镜像

...

ports:

- containerPort: 4443 # 4. 修改容器暴露端口为 4443

name: https

protocol: TCP

...

volumeMounts:

- mountPath: /tmp

name: tmp-dir

- mountPath: /etc/kubernetes/pki # 5. 挂载卷到容器

name: ca-ssl

nodeSelector:

kubernetes.io/os: linux

kubernetes.io/hostname: k8s-prod-master01 # 6. 添加该参数控制 metrics-server 部署到 k8s-prod-master01 节点

...

volumes:

- emptyDir: {}

name: tmp-dir

- name: ca-ssl # 7. 挂载证书到卷

hostPath:

path: /usr/local/kubernetes/ssl使用 kubectl 安装 metrics Server

1

kubectl create -f components.yaml

查看 pod 运行状态

1

2

3kubectl get pods -n kube-system -l k8s-app=metrics-server

NAME READY STATUS RESTARTS AGE

metrics-server-69c977b6ff-6ncw4 1/1 Running 0 4m7s查看集群度量指标

1

2

3

4

5

6

7

8

9

10kubectl top pods -n kube-system

NAME CPU(cores) MEMORY(bytes)

calico-kube-controllers-97769f7c7-kv9d9 2m 12Mi

calico-node-ff66c 19m 45Mi

calico-node-hf46z 20m 45Mi

calico-node-x6nzb 22m 42Mi

calico-node-xff8p 19m 39Mi

calico-node-z9wdl 22m 42Mi

coredns-7bf4bd64bd-fn8wc 4m 13Mi

metrics-server-69c977b6ff-6ncw4 2m 16Mi

安装 Dashboard

Dashboard 官方 GitHub:

https://github.com/kubernetes/dashboard找到最新的安装文件,执行如下命令

1

kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

查看 dashboard 容器状态

1

kubectl get pods -n kubernetes-dashboard

更改 Dashboard 的 svc,将 type: ClusterIP 更改为 type: NodePort,如果已经是 NodePort 则忽略此步骤

1

2kubectl patch svc kubernetes-dashboard -n kubernetes-dashboard \

-p '{"spec":{"type":"NodePort","ports":[{"port":443,"targetPort":8443,"nodePort":30010}]}}'(可选)由于使用的是自签名的证书,浏览器可能会打不开,需要在谷歌浏览器启动文件中加入启动参数,用于解决无法访问 Dashboard 的问题。启动参数如下

1

--test-type --ignore-certificate-errors

根据自己的实例端口号,通过任意安装了 kube-proxy 的宿主机IP或者 VIP 的 IP 加上端口即可访问 dashboard。查看端口的命令如下

1

kubectl get svc -n kubernetes-dashboard kubernetes-dashboard

创建管理员用户以及 RBAC

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22cat <<EOF | kubectl apply -f - -n kube-system

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF获取登录 Dashboard 的 token

1

2

3

4kubectl -n kubernetes-dashboard create token admin-user

# 以上命令会输出类似如下信息

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXY1N253Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIwMzAzMjQzYy00MDQwLTRhNTgtOGE0Ny04NDllZTliYTc5YzEiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZXJuZXRlcy1kYXNoYm9hcmQ6YWRtaW4tdXNlciJ9.Z2JrQlitASVwWbc-s6deLRFVk5DWD3P_vjUFXsqVSY10pbjFLG4njoZwh8p3tLxnX_VBsr7_6bwxhWSYChp9hwxznemD5x5HLtjb16kI9Z7yFWLtohzkTwuFbqmQaMoget_nYcQBUC5fDmBHRfFvNKePh_vSSb2h_aYXa8GV5AcfPQpY7r461itme1EXHQJqv-SN-zUnguDguCTjD80pFZ_CmnSE1z9QdMHPB8hoB4V68gtswR1VLa6mSYdgPwCHauuOobojALSaMc3RH7MmFUumAgguhqAkX3Omqd3rJbYOMRuMjhANqd08piDC3aIabINX6gP5-Tuuw2svnV6NYQ访问 Dashboard。选择登录方式为 token。输入上面获得的 token,选择登陆,结果如下

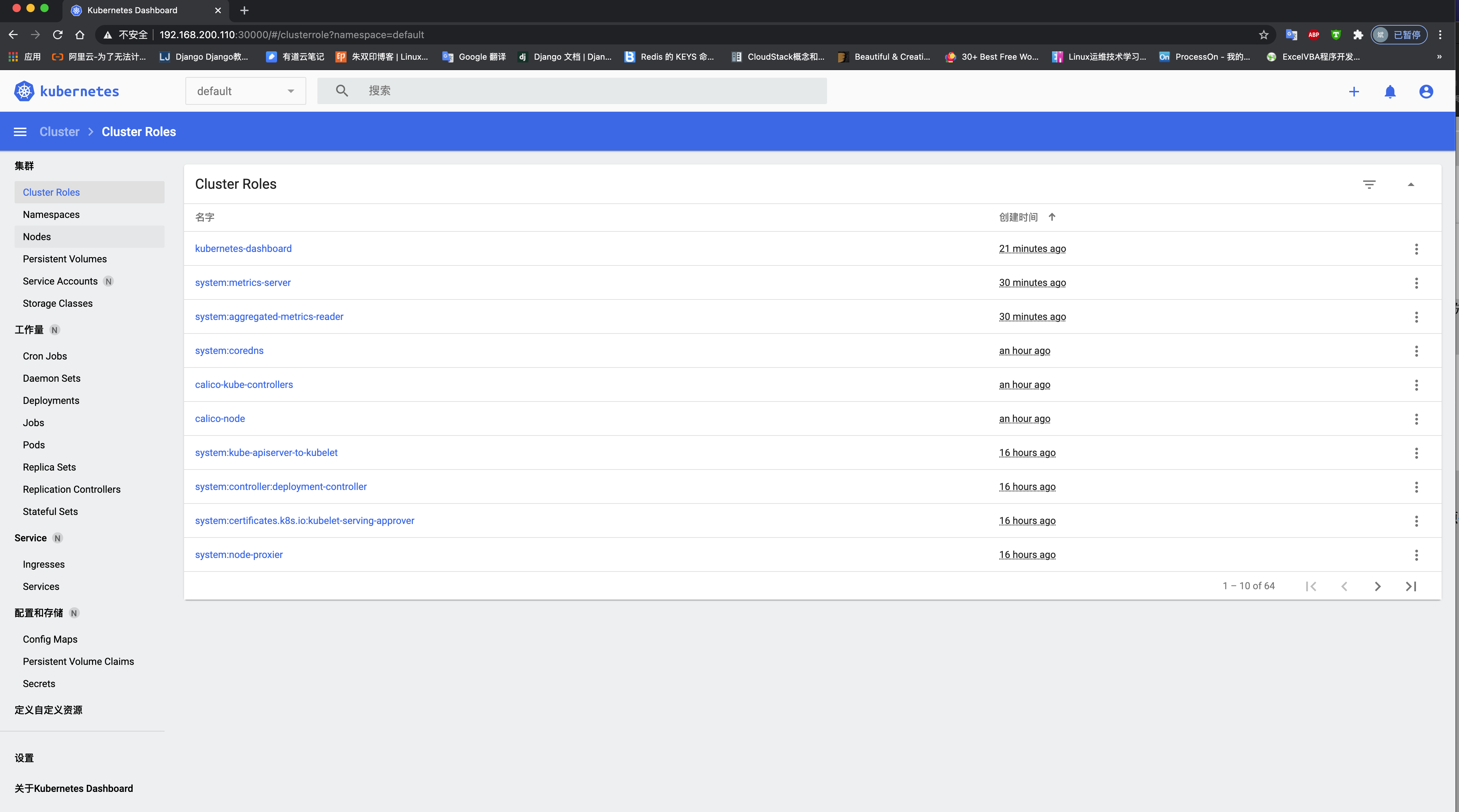

安装 Ingress-Nginx

安装 Helm 工具

1

2

3

4

5

6

7

8

9# 下载 helm 二进制安装包

wget https://get.helm.sh/helm-v3.10.2-linux-amd64.tar.gz

# 安装 helm

tar zxvf helm-v3.10.2-linux-amd64.tar.gz

mv linux-amd64/helm /usr/local/bin/

# 配置 helm 命令自动补全

helm completion bash > /etc/bash_completion.d/helm添加 ingress 的 helm 仓库

1

helm repo add ingress-nginx https://kubernetes.github.io/ingress-nginx

更新 ingress-nginx 仓库

1

helm repo update ingress-nginx

查找仓库中所有的 ingress-nginx 版本

1

helm search repo ingress-nginx --versions

根据 Supported Versions table 下载一个支持的版本到本地

1

helm pull ingress-nginx/ingress-nginx --version 4.4.2

解压下载的 ingress-nginx 压缩包,修改其中部分配置

1

2

3tar xf ingress-nginx-4.4.2.tgz

cd ingress-nginx

vim values.yaml需要修改的位置如下:

- Controller 和 admissionWebhook 的镜象地址,需要将公网镜像同步至公司内网镜像仓库;

- hostNetwork 的值设置为 true;

- dnsPolicy 设置为 ClusterFirstWithHostNet;

- NodeSelector 添加 ingress: “true” ,方便部署到指定节点;

- 资源类型更改为 kind: DaemonSet;

- 修改端口类型 type: LoadBalancer 为 type: ClusterIP

部署 ingress,给需要部署 ingress 的节点打上标签

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59# 给节点打标签

kubectl label nodes k8s-node01 ingress=true

kubectl label nodes k8s-node02 ingress=true

# 创建 ingress-nginx 命名空间

kubectl create ns ingress-nginx

# 安装 ingress-nginx

helm install ingress-nginx -n ingress-nginx .

# 输出信息如下

NAME: ingress-nginx

LAST DEPLOYED: Mon Feb 13 16:25:50 2023

NAMESPACE: ingress-nginx

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

The ingress-nginx controller has been installed.

Get the application URL by running these commands:

export POD_NAME=$(kubectl --namespace ingress-nginx get pods -o jsonpath="{.items[0].metadata.name}" -l "app=ingress-nginx,component=controller,release=ingress-nginx")

kubectl --namespace ingress-nginx port-forward $POD_NAME 8080:80

echo "Visit http://127.0.0.1:8080 to access your application."

An example Ingress that makes use of the controller:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: example

namespace: foo

spec:

ingressClassName: nginx

rules:

- host: www.example.com

http:

paths:

- pathType: Prefix

backend:

service:

name: exampleService

port:

number: 80

path: /

# This section is only required if TLS is to be enabled for the Ingress

tls:

- hosts:

- www.example.com

secretName: example-tls

If TLS is enabled for the Ingress, a Secret containing the certificate and key must also be provided:

apiVersion: v1

kind: Secret

metadata:

name: example-tls

namespace: foo

data:

tls.crt: <base64 encoded cert>

tls.key: <base64 encoded key>

type: kubernetes.io/tls查看 ingress-nginx 安装状态

1

2

3

4

5

6

7

8

9kubectl get pods -n ingress-nginx -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-controller-5gxth 1/1 Running 0 2m43s 10.1.40.65 k8s-node02 <none> <none>

ingress-nginx-controller-zcq55 1/1 Running 0 2m43s 10.1.40.64 k8s-node01 <none> <none>

# kubectl get svc -n ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

ingress-nginx-controller ClusterIP 10.96.143.177 <none> 80/TCP,443/TCP 3m1s

ingress-nginx-controller-admission ClusterIP 10.96.66.234 <none> 443/TCP 3m1s注意: 此时 k8s-node01 以及 k8s-node02 节点应该已经监听了 80 和 443 端口

使用 ingress-nginx 暴露 kubernetes-dashboard 服务,创建 ingress 资源

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28cat <<EOF |kubectl apply -f -

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

annotations:

# 开启use-regex,启用path的正则匹配

nginx.ingress.kubernetes.io/use-regex: "true"

nginx.ingress.kubernetes.io/rewrite-target: /

# 默认为 true,启用 TLS 时,http请求会 308 重定向到https

nginx.ingress.kubernetes.io/ssl-redirect: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

nginx.ingress.kubernetes.io/proxy-body-size: 20m

spec:

ingressClassName: nginx

rules:

- host: dashboard.59izt.com

http:

paths:

- backend:

service:

name: kubernetes-dashboard

port:

number: 443

path: /

pathType: ImplementationSpecific

EOF配置

dashboard.59izt.com域名解析到 CLB 公网IP地址上,访问 Dashboard。选择登录方式为 token。输入上面获得的 token,选择登陆,结果如下

验证集群

安装测试 Pod

执行以下命令安装测试 pod

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: dig-test

namespace: default

spec:

containers:

- name: dig

image: docker.io/azukiapp/dig

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

restartPolicy: Always

EOF

验证解析

集群可用必须满足一下几点:

Pod 必须能解析 Service

1

# kubectl exec dig-test -- nslookup kubernetes

如果返回 error: unable to upgrade connection: Unauthorized 错误,请检查 kubelet-conf.yaml 文件的 authentication: 配置缩进是否正确

Pod 必须能解析跨 namespace 的 Service

1

# kubectl exec dig-test -- nslookup kube-dns.kube-system

每个节点都必须要能访问 Kubernetes 的 Kubernetes svc 443 和 kube-dns 的 53 端口

1

2

3

4

5# service 地址为 10.96.0.1

# $ telnet 10.96.0.1 443

# kube-dns 的地址为 10.96.0.10

# $ telnet 10.96.0.10 53Pod 和 Pod 之间要能通信

- 同 namespace 之间能通信

- 跨 namespace 之间能通信

- 跨机器能通信

1

2

3

4

5

6

7

8

9

10

11

12

13

14# 查看当前的 Pod

# kubectl get pods -n kube-system -owide

# 选择进入一个 pod 的容器,然后 ping 不同节点上的 Pod IP 地址

# kubectl exec -n kube-system calico-node-5bcd6 -- ping 10.1.40.62

PING 10.1.40.62 (10.1.40.62) 56(84) bytes of data.

64 bytes from 10.1.40.62: icmp_seq=1 ttl=64 time=0.385 ms

64 bytes from 10.1.40.62: icmp_seq=2 ttl=64 time=0.231 ms

# 跨 namespace 之间的通信,使用 default 命名空间下的 dig-test Pod ping 其他命名空间的 Pod IP

# kubectl exec -n default dig-test -- ping 10.1.40.65

PING 10.1.40.65 (10.1.40.65): 56 data bytes

64 bytes from 10.1.40.65: seq=0 ttl=64 time=0.190 ms

64 bytes from 10.1.40.65: seq=1 ttl=64 time=0.136 ms创建一个 deploy Nginx pod

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37# 创建一个3副本的 deploy

kubectl create deployment nginx --image=nginx --replicas=3

# 使用一下命令暴露 nginx 服务

kubectl expose deployment nginx --port=80 --target-port=80 --type=NodePort

# 查看 deployment 状态

# kubectl get deployments.apps

NAME READY UP-TO-DATE AVAILABLE AGE

nginx 3/3 3 3 11m

# 查看 pod, svc 状态

# # kubectl get pods,svc -l app=nginx

NAME READY STATUS RESTARTS AGE

pod/nginx-6799fc88d8-26c22 1/1 Running 0 12m

pod/nginx-6799fc88d8-m7sbb 1/1 Running 0 12m

pod/nginx-6799fc88d8-t87xg 1/1 Running 0 12m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx NodePort 192.162.175.90 <none> 80:30714/TCP 118s

# 测试访问

# curl http://10.1.40.100:30714 -I

HTTP/1.1 200 OK

Server: nginx/1.21.5

Date: Tue, 30 Aug 2022 09:09:07 GMT

Content-Type: text/html

Content-Length: 615

Last-Modified: Tue, 28 Dec 2021 15:28:38 GMT

Connection: keep-alive

ETag: "61cb2d26-267"

Accept-Ranges: bytes

# 清理测试 Pod 以及 deploy

kubectl delete deploy nginx

kubectl delete pod dig-test

kubectl delete svc nginx