参考文章:

背景

Kubernetes 集群备份是一大难点。虽然可以通过 etcd 来进行备份来实现 K8S 集群备份,但是这种备份很难恢复单个 Namespace。

Velero 对于 K8s 集群数据的备份和恢复,以及复制当前集群数据到其他集群等都非常方便。可以在两个集群间克隆应用和命名空间,来创建一个临时性的开发环境。

Velero 概述

什么是 Velero

Velero 是一个云原生的灾难恢复和迁移工具,它本身也是开源的, 采用 Go 语言编写,可以安全的备份、恢复和迁移Kubernetes集群资源和持久卷。

Velero 是一种云原生的 Kubernetes 优化方法,支持标准的 K8S 集群,既可以是私有云平台也可以是公有云。除了灾备之外它还能做资源移转,支持把容器应用从一个集群迁移到另一个集群。

使用velero可以对集群进行备份和恢复,降低集群DR造成的影响。velero的基本原理就是将集群的数据备份到对象存储中,在恢复的时候将数据从对象存储中拉取下来。可以从官方文档查看可接收的对象存储,本地存储可以使用Minio。

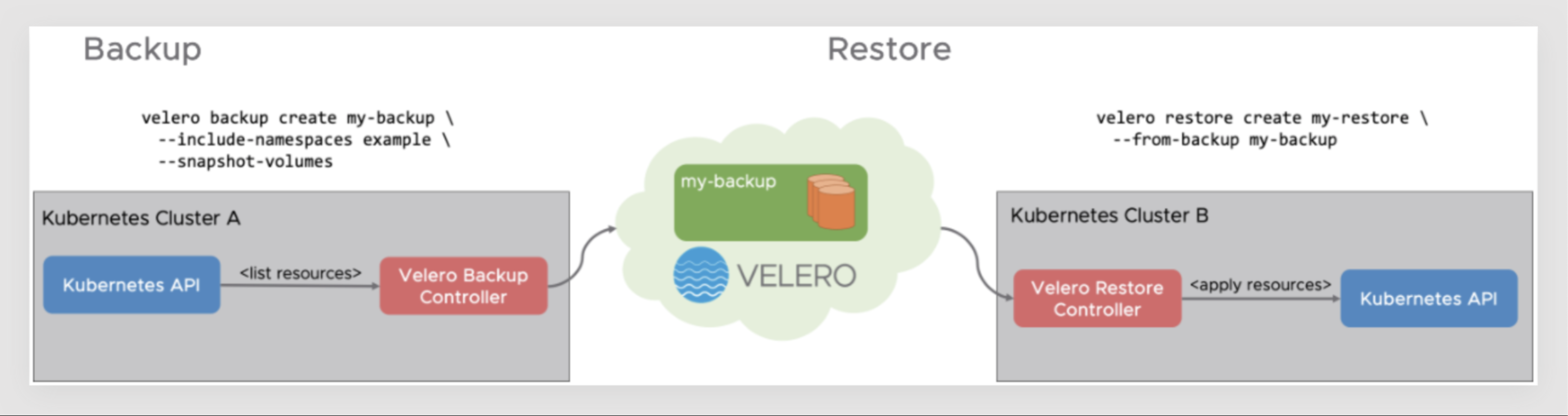

Velero 的备份流程

Velero 的备份过程

- 本地 Velero 客户端发送备份指令。

- Kubernetes 集群内就会创建一个 Backup 对象。

- BackupController 监测 Backup 对象并开始备份过程。

- BackupController 会向 API Server 查询相关数据。

- BackupController 将查询到的数据备份到远端的对象存储。

Velero 的特性

Velero 目前包含以下特性:

- 支持 Kubernetes 集群数据备份和恢复

- 支持复制当前 Kubernetes 集群的资源到其它 Kubernetes 集群

- 支持复制生产环境到开发以及测试环境

Velero 组件

Velero 组件一共分两部分,分别是服务端和客户端。

- 服务端:运行在你 Kubernetes 的集群中

- 客户端:是一些运行在本地的命令行的工具,需要已配置好 kubectl 及集群 kubeconfig 的机器上

支持备份存储

- AWS S3 以及兼容 S3 的存储,比如:Minio

- Azure BloB 存储

- Google Cloud 存储

- Aliyun OSS 存储

适应场景

- 灾备场景:提供备份恢复k8s集群的能力

- 迁移场景:提供拷贝集群资源到其他集群的能力(复制同步开发,测试,生产环境的集群配置,简化环境配置)

与 etcd 的区别

与 Etcd 备份相比,直接备份 Etcd 是将集群的全部资源备份起来。而 Velero 就是可以对 Kubernetes 集群内对象级别进行备份。除了对 Kubernetes 集群进行整体备份外,Velero 还可以通过对 Type、Namespace、Label 等对象进行分类备份或者恢复。

注意: 备份过程中创建的对象是不会被备份的。

备份过程

Velero 在 Kubernetes 集群中创建了很多 CRD 以及相关的控制器,进行备份恢复等操作实质上是对相关 CRD 的操作。

保障数据的唯一性

对象存储的数据是唯一的数据源,也就是说 Kubernetes 集群内的控制器会检查远程的 OSS 存储,发现有备份就会在集群内创建相关 CRD。如果发现远端存储没有当前集群内的 CRD 所关联的存储数据,那么就会删除当前集群内的 CRD。

支持的后端存储

Velero 支持两种关于后端存储的 CRD,分别是 BackupStorageLocation 和 VolumeSnapshotLocation。

BackupStorageLocation

BackupStorageLocation 主要用来定义 Kubernetes 集群资源的数据存放位置,也就是集群对象数据,不是 PVC 的数据。主要支持的后端存储是 S3 兼容的存储,比如:Mino 和阿里云 OSS 等。

Minio

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28apiVersion: velero.io/v1

kind: BackupStorageLocation

metadata:

name: default

namespace: velero

spec:

# 只有 aws gcp azure

provider: aws

# 存储主要配置

objectStorage:

# bucket 的名称

bucket: myBucket

# bucket内的

prefix: backup

# 不同的 provider 不同的配置

config:

#bucket地区

region: us-west-2

# s3认证信息

profile: "default"

# 使用 Minio 的时候加上,默认为 false

# AWS 的 S3 可以支持两种 Url Bucket URL

# 1 Path style URL: http://s3endpoint/BUCKET

# 2 Virtual-hosted style URL: http://oss-cn-beijing.s3endpoint 将 Bucker Name 放到了 Host Header中

# 3 阿里云仅仅支持 Virtual hosted 如果下面写上 true, 阿里云 OSS 会报错 403

s3ForcePathStyle: "false"

# s3的地址,格式为 http://minio:9000

s3Url: http://minio:9000阿里 OSS

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16apiVersion: velero.io/v1

kind: BackupStorageLocation

metadata:

labels:

component: velero

name: default

namespace: velero

spec:

config:

region: oss-cn-beijing

s3Url: http://oss-cn-beijing.aliyuncs.com

s3ForcePathStyle: "false"

objectStorage:

bucket: build-jenkins

prefix: ""

provider: aws

VolumeSnapshotLocation

VolumeSnapshotLocation 主要用来给 PV 做快照,需要云提供商提供插件。阿里云已经提供了插件,这个需要使用 CSI 等存储机制。你也可以使用专门的备份工具 Restic,把 PV 数据备份到阿里云 OSS 中去(安装时需要自定义选项)。

1 | # 安装时需要自定义选项 |

Restic 是一款 GO 语言开发的数据加密备份工具,顾名思义,可以将本地数据加密后传输到指定的仓库。支持的仓库有 Local、SFTP、Aws S3、Minio、OpenStack Swift、Backblaze B2、Azure BS、Google Cloud storage、Rest Server。

实践 velero 备份 到 Minio

安装 Minio 存储

创建 minio 资源清单文件,内容如下

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103apiVersion: v1

kind: Namespace

metadata:

name: velero

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: velero

name: minio

labels:

component: minio

spec:

strategy:

type: Recreate

selector:

matchLabels:

component: minio

template:

metadata:

labels:

component: minio

spec:

volumes:

- name: storage

emptyDir: {}

- name: config

emptyDir: {}

containers:

- name: minio

image: minio/minio:latest

imagePullPolicy: IfNotPresent

args:

- server

- /storage

- --console-address

- ":9001"

- --config-dir=/config

env:

- name: MINIO_ACCESS_KEY

value: "minio"

- name: MINIO_SECRET_KEY

value: "minio123"

ports:

- containerPort: 9000

- containerPort: 9001

volumeMounts:

- name: storage

mountPath: "/storage"

- name: config

mountPath: "/config"

apiVersion: v1

kind: Service

metadata:

namespace: velero

name: minio

labels:

component: minio

spec:

type: NodePort

ports:

- port: 9000

targetPort: 9000

protocol: TCP

name: api-port

nodePort: 32010

- port: 9001

targetPort: 9001

protocol: TCP

name: console-port

nodePort: 32011

selector:

component: minio

apiVersion: batch/v1

kind: Job

metadata:

namespace: velero

name: minio-setup

labels:

component: minio

spec:

template:

metadata:

name: minio-setup

spec:

restartPolicy: OnFailure

volumes:

- name: config

emptyDir: {}

containers:

- name: mc

image: minio/mc:latest

imagePullPolicy: IfNotPresent

command:

- /bin/sh

- -c

- "mc --config-dir=/config config host add velero http://minio:9000 minio minio123 && mc --config-dir=/config mb -p velero/velero"

volumeMounts:

- name: config

mountPath: "/config"由上面资源清淡我们可以看到,在安装 minio 的时候

- MINIO_ACCESS_KEY:minio

- MINIO_SECRET_KEY:minio123

- service的地址为:

http://minio:9000,外部访问地址为http://${KUBE_APISERVER_VIP}:32010

最后执行了一个job来创建一个名称为:

velero/velero的bucket,在创建的时候执行了。安装 Minio

1

2

3

4

5# kubectl apply -f 00-minio-deployment.yaml

namespace/velero created

deployment.apps/minio created

service/minio created

job.batch/minio-setup created查看 Minio 服务是否完成

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20# kubectl get all -n velero

NAME READY STATUS RESTARTS AGE

pod/minio-5b84955bdd-vmptr 1/1 Running 0 2m18s

pod/minio-setup-nk8tf 0/1 Completed 3 2m18s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/minio ClusterIP 10.96.138.136 <none> 9000/TCP 2m18s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/minio 1/1 1 1 2m18s

NAME DESIRED CURRENT READY AGE

replicaset.apps/minio-5b84955bdd 1 1 1 2m18s

NAME COMPLETIONS DURATION AGE

job.batch/minio-setup 1/1 112s 2m18s

# kubectl logs -f -n velero minio-setup-nk8tf

Added `velero` successfully.

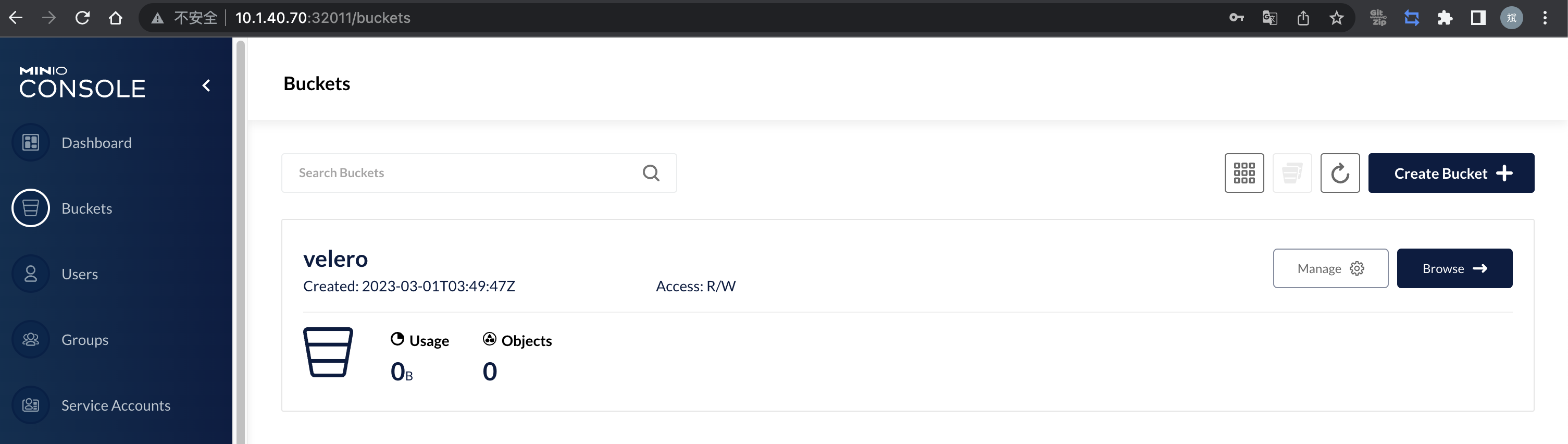

Bucket created successfully `velero/velero`.待服务都已经启动完毕,可以登录 minio 查看

velero/velero的 bucket 是否创建成功。

安装 Velero 客户端

下载velero

1

wget https://github.com/vmware-tanzu/velero/releases/download/v1.10.1/velero-v1.10.1-linux-amd64.tar.gz

安装 Velero

1

2tar xf velero-v1.10.1-linux-amd64.tar.gz

mv velero-v1.10.1-linux-amd64/velero /usr/local/bin/配置 Velero 自动补全

1

2velero completion bash > /etc/bash_completion.d/velero

source /etc/bash_completion.d/velero

安装 Velero 服务端

创建 Velero 能正常登录 minio 的密钥

1

2

3

4

5cat > ~/.credentials-velero <<EOF

[default]

aws_access_key_id = minio

aws_secret_access_key = minio123

EOF执行以下命令,将 Velero 安装到 k8s 集群

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.0.0 \

--bucket velero \

--secret-file ~/.credentials-velero \

--use-volume-snapshots=false \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://minio.velero.svc:9000

# 输出如下信息

CustomResourceDefinition/backuprepositories.velero.io: attempting to create resource

CustomResourceDefinition/backuprepositories.velero.io: attempting to create resource client

CustomResourceDefinition/backuprepositories.velero.io: created

CustomResourceDefinition/backups.velero.io: attempting to create resource

CustomResourceDefinition/backups.velero.io: attempting to create resource client

CustomResourceDefinition/backups.velero.io: created

CustomResourceDefinition/backupstoragelocations.velero.io: attempting to create resource

CustomResourceDefinition/backupstoragelocations.velero.io: attempting to create resource client

CustomResourceDefinition/backupstoragelocations.velero.io: created

CustomResourceDefinition/deletebackuprequests.velero.io: attempting to create resource

CustomResourceDefinition/deletebackuprequests.velero.io: attempting to create resource client

CustomResourceDefinition/deletebackuprequests.velero.io: created

CustomResourceDefinition/downloadrequests.velero.io: attempting to create resource

CustomResourceDefinition/downloadrequests.velero.io: attempting to create resource client

CustomResourceDefinition/downloadrequests.velero.io: created

CustomResourceDefinition/podvolumebackups.velero.io: attempting to create resource

CustomResourceDefinition/podvolumebackups.velero.io: attempting to create resource client

CustomResourceDefinition/podvolumebackups.velero.io: created

CustomResourceDefinition/podvolumerestores.velero.io: attempting to create resource

CustomResourceDefinition/podvolumerestores.velero.io: attempting to create resource client

CustomResourceDefinition/podvolumerestores.velero.io: created

CustomResourceDefinition/restores.velero.io: attempting to create resource

CustomResourceDefinition/restores.velero.io: attempting to create resource client

CustomResourceDefinition/restores.velero.io: created

CustomResourceDefinition/schedules.velero.io: attempting to create resource

CustomResourceDefinition/schedules.velero.io: attempting to create resource client

CustomResourceDefinition/schedules.velero.io: created

CustomResourceDefinition/serverstatusrequests.velero.io: attempting to create resource

CustomResourceDefinition/serverstatusrequests.velero.io: attempting to create resource client

CustomResourceDefinition/serverstatusrequests.velero.io: created

CustomResourceDefinition/volumesnapshotlocations.velero.io: attempting to create resource

CustomResourceDefinition/volumesnapshotlocations.velero.io: attempting to create resource client

CustomResourceDefinition/volumesnapshotlocations.velero.io: created

Waiting for resources to be ready in cluster...

Namespace/velero: attempting to create resource

Namespace/velero: attempting to create resource client

Namespace/velero: already exists, proceeding

Namespace/velero: created

ClusterRoleBinding/velero: attempting to create resource

ClusterRoleBinding/velero: attempting to create resource client

ClusterRoleBinding/velero: created

ServiceAccount/velero: attempting to create resource

ServiceAccount/velero: attempting to create resource client

ServiceAccount/velero: created

Secret/cloud-credentials: attempting to create resource

Secret/cloud-credentials: attempting to create resource client

Secret/cloud-credentials: created

BackupStorageLocation/default: attempting to create resource

BackupStorageLocation/default: attempting to create resource client

BackupStorageLocation/default: created

Deployment/velero: attempting to create resource

Deployment/velero: attempting to create resource client

Deployment/velero: created

Velero is installed! ⛵ Use 'kubectl logs deployment/velero -n velero' to view the status.查看 Velero API

1

2# kubectl api-versions |grep velero

velero.io/v1查看 Velero 安装情况

1

2

3# kubectl get pod -n velero -l component=velero

NAME READY STATUS RESTARTS AGE

velero-5fcbc7b464-njn92 1/1 Running 0 3m6s至此 velero 就已经全部部署完成。

Velero 命令介绍

Velero 创建备份常用的选项如下:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36$ velero create backup NAME [flags]

# 剔除 namespace

--exclude-namespaces stringArray namespaces to exclude from the backup

# 剔除资源类型

--exclude-resources stringArray resources to exclude from the backup, formatted as resource.group, such as storageclasses.storage.k8s.io

# 包含集群资源类型

--include-cluster-resources optionalBool[=true] include cluster-scoped resources in the backup

# 包含 namespace

--include-namespaces stringArray namespaces to include in the backup (use '*' for all namespaces) (default *)

# 包含 namespace 资源类型

--include-resources stringArray resources to include in the backup, formatted as resource.group, such as storageclasses.storage.k8s.io (use '*' for all resources)

# 给这个备份加上标签

--labels mapStringString labels to apply to the backup

-o, --output string Output display format. For create commands, display the object but do not send it to the server. Valid formats are 'table', 'json', and 'yaml'. 'table' is not valid for the install command.

# 对指定标签的资源进行备份

-l, --selector labelSelector only back up resources matching this label selector (default <none>)

# 对 PV 创建快照

--snapshot-volumes optionalBool[=true] take snapshots of PersistentVolumes as part of the backup

# 指定备份的位置

--storage-location string location in which to store the backup

# 备份数据多久删掉

--ttl duration how long before the backup can be garbage collected (default 720h0m0s)

# 指定快照的位置,也就是哪一个公有云驱动

--volume-snapshot-locations strings list of locations (at most one per provider) where volume snapshots should be stored

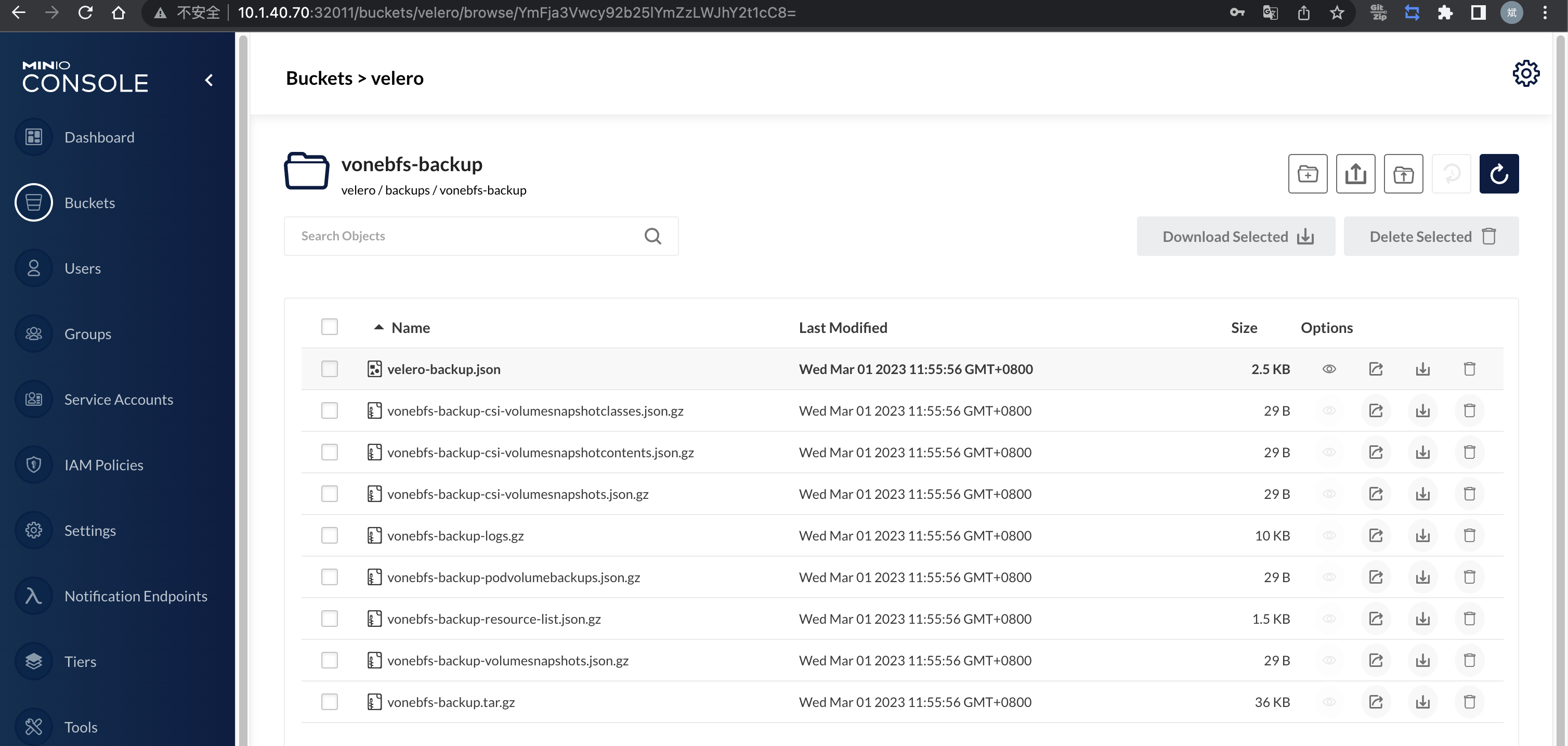

执行备份

备份当前集群的 vonebfs 命名空间

1

2

3# velero backup create vonebfs-backup --include-namespaces vonebfs

Backup request "vonebfs-backup" submitted successfully.

Run `velero backup describe vonebfs-backup` or `velero backup logs vonebfs-backup` for more details.查看备份信息

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45# velero backup describe vonebfs-backup

Name: vonebfs-backup

Namespace: velero

Labels: velero.io/storage-location=default

Annotations: velero.io/source-cluster-k8s-gitversion=v1.20.11

velero.io/source-cluster-k8s-major-version=1

velero.io/source-cluster-k8s-minor-version=20

Phase: Completed

Errors: 0

Warnings: 0

Namespaces:

Included: vonebfs

Excluded: <none>

Resources:

Included: *

Excluded: <none>

Cluster-scoped: auto

Label selector: <none>

Storage Location: default

Velero-Native Snapshot PVs: auto

TTL: 720h0m0s

CSISnapshotTimeout: 10m0s

Hooks: <none>

Backup Format Version: 1.1.0

Started: 2023-03-01 11:23:23 +0800 CST

Completed: 2023-03-01 11:23:52 +0800 CST

Expiration: 2023-03-31 11:23:23 +0800 CST

Total items to be backed up: 159

Items backed up: 159

Velero-Native Snapshots: <none included>

执行恢复

在测试集群安装 velero

1

2

3

4

5

6

7

8

9

10

11

12

13cat > ~/.credentials-velero <<EOF

[default]

aws_access_key_id = minio

aws_secret_access_key = minio123

EOF

# velero install \

--provider aws \

--plugins velero/velero-plugin-for-aws:v1.0.0 \

--bucket velero \

--secret-file ~/.credentials-velero \

--use-volume-snapshots=false \

--backup-location-config region=minio,s3ForcePathStyle="true",s3Url=http://10.1.40.70:32010由于是在新的 k8s 集群环境安装 Velero,需要连接旧集群里面的 minio 服务,所以需要配置 s3Url 的地址为 nodePort 方式访问

新的 k8s 集群上没有 vonebfs 服务。这里直接恢复刚备份的 vonebfs 到新集群上,实现 k8s 服务迁移

1

2

3

4

5# velero restore create --from-backup vonebfs-backup --wait

Restore request "vonebfs-backup-20230301120726" submitted successfully.

Waiting for restore to complete. You may safely press ctrl-c to stop waiting - your restore will continue in the background.

..............

Restore completed with status: Completed. You may check for more information using the commands `velero restore describe vonebfs-backup-20230301120726` and `velero restore logs vonebfs-backup-20230301120726`.查看恢复的服务

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24# kubectl get all -n vonebfs

NAME READY STATUS RESTARTS AGE

pod/ipfs-cluster-0 2/2 Running 0 3m4s

pod/ipfs-cluster-1 2/2 Running 0 3m4s

pod/ipfs-cluster-2 2/2 Running 0 3m4s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/glusterfs-dynamic-1bcc87c3-7e1c-4835-8022-0fdc88ace649 ClusterIP 10.96.77.55 <none> 1/TCP 3m4s

service/glusterfs-dynamic-1cd64a6e-dae3-466a-8c15-c9a2877cc631 ClusterIP 10.96.46.197 <none> 1/TCP 3m4s

service/glusterfs-dynamic-35499208-c1f3-4019-990e-bf729643626d ClusterIP 10.96.80.244 <none> 1/TCP 3m4s

service/glusterfs-dynamic-3bd82327-d812-43d0-901b-f1d092303ea1 ClusterIP 10.96.119.147 <none> 1/TCP 2m58s

service/glusterfs-dynamic-44cdc584-39fc-4e68-bab9-cc0e9ac76c3e ClusterIP 10.96.226.62 <none> 1/TCP 2m58s

service/glusterfs-dynamic-4b3eb377-012f-4739-8a07-b104fa5e9f1d ClusterIP 10.96.71.47 <none> 1/TCP 2m58s

service/glusterfs-dynamic-56944b29-852f-4618-b814-09a53d473b43 ClusterIP 10.96.153.245 <none> 1/TCP 3m4s

service/glusterfs-dynamic-6f3a339d-dfd7-413a-b69f-58b782edb48c ClusterIP 10.96.201.215 <none> 1/TCP 2m58s

service/glusterfs-dynamic-84363aa4-d2d3-445f-90e2-ad311089625a ClusterIP 10.96.22.156 <none> 1/TCP 2m58s

service/glusterfs-dynamic-ada44184-ab87-4d0e-9f09-eff479433a77 ClusterIP 10.96.232.18 <none> 1/TCP 3m4s

service/glusterfs-dynamic-d189e346-626b-4b87-85d6-f3b090cb9405 ClusterIP 10.96.219.89 <none> 1/TCP 2m58s

service/glusterfs-dynamic-d681163d-a7d5-4d48-ba49-6387f65b434c ClusterIP 10.96.123.211 <none> 1/TCP 3m4s

service/ipfs-cluster ClusterIP 10.96.204.232 <none> 4001/TCP,4002/TCP,5001/TCP,8081/TCP,8080/TCP,9094/TCP,9095/TCP,9096/TCP 2m58s

service/ipfs-cluster-test ClusterIP 10.96.169.242 <none> 5001/TCP,9094/TCP 2m58s

NAME READY AGE

statefulset.apps/ipfs-cluster 3/3 2m58s